- Study and research support

- Literature searching

Literature searching explained

Develop a search strategy.

A search strategy is an organised structure of key terms used to search a database. The search strategy combines the key concepts of your search question in order to retrieve accurate results.

Your search strategy will account for all:

- possible search terms

- keywords and phrases

- truncated and wildcard variations of search terms

- subject headings (where applicable)

Each database works differently so you need to adapt your search strategy for each database. You may wish to develop a number of separate search strategies if your research covers several different areas.

It is a good idea to test your strategies and refine them after you have reviewed the search results.

How a search strategy looks in practice

Take a look at this example literature search in PsycINFO (PDF) about self-esteem.

The example shows the subject heading and keyword searches that have been carried out for each concept within our research question and how they have been combined using Boolean operators. It also shows where keyword techniques like truncation, wildcards and adjacency searching have been used.

Search strategy techniques

The next sections show some techniques you can use to develop your search strategy.

Skip straight to:

- Choosing search terms

- Searching with keywords

- Searching for exact phrases

- Using truncated and wildcard searches

Searching with subject headings

- Using Boolean logic

Citation searching

Choose search terms.

Concepts can be expressed in different ways eg “self-esteem” might be referred to as “self-worth”. Your aim is to consider each of your concepts and come up with a list of the different ways they could be expressed.

To find alternative keywords or phrases for your concepts try the following:

- Use a thesaurus to identify synonyms.

- Search for your concepts on a search engine like Google Scholar, scanning the results for alternative words and phrases.

- Examine relevant abstracts or articles for alternative words, phrases and subject headings (if the database uses subject headings).

When you've done this, you should have lists of words and phrases for each concept as in this completed PICO model (PDF) or this example concept map (PDF).

As you search and scan articles and abstracts, you may discover different key terms to enhance your search strategy.

Using truncation and wildcards can save you time and effort by finding alternative keywords.

Search with keywords

Keywords are free text words and phrases. Database search strategies use a combination of free text and subject headings (where applicable).

A keyword search usually looks for your search terms in the title and abstract of a reference. You may wish to search in title fields only if you want a small number of specific results.

Some databases will find the exact word or phrase, so make sure your spelling is accurate or you will miss references.

Search for the exact phrase

If you want words to appear next to each other in an exact phrase, use quotation marks, eg “self-esteem”.

Phrase searching decreases the number of results you get and makes your results more relevant. Most databases allow you to search for phrases, but check the database guide if you are unsure.

Truncation and wildcard searches

You can use truncated and wildcard searches to find variations of your search term. Truncation is useful for finding singular and plural forms of words and variant endings.

Many databases use an asterisk (*) as their truncation symbol. Check the database help section if you are not sure which symbol to use. For example, “therap*” will find therapy, therapies, therapist or therapists. A wildcard finds variant spellings of words. Use it to search for a single character, or no character.

Check the database help section to see which symbol to use as a wildcard.

Wildcards are useful for finding British and American spellings, for example: “behavio?r” in Medline will find both behaviour and behavior.

There are sometimes different symbols to find a variable single character. For example, in the Medline database, “wom#n” will find woman and also women.

Use adjacency searching for more accurate results

You can specify how close two words appear together in your search strategy. This can make your results more relevant; generally the closer two words appear to each other, the closer the relationship is between them.

Commands for adjacency searching differ among databases, so make sure you consult database guides.

In OvidSP databases (like Medline), searching for “physician ADJ3 relationship” will find both physician and relationship within two major words of each other, in any order. This finds more papers than "physician relationship".

Using this adjacency retrieves papers with phrases like "physician patient relationship", "patient physician relationship", "relationship of the physician to the patient" and so on.

Database subject headings are controlled vocabulary terms that a database uses to describe what an article is about.

Watch our 3-minute introduction to subject headings video . You can also View the video using Microsoft Stream (link opens in a new window, available for University members only).

Using appropriate subject headings enhances your search and will help you to find more results on your topic. This is because subject headings find articles according to their subject, even if the article does not use your chosen key words.

You should combine both subject headings and keywords in your search strategy for each of the concepts you identify. This is particularly important if you are undertaking a systematic review or an in-depth piece of work

Subject headings may vary between databases, so you need to investigate each database separately to find the subject headings they use. For example, for Medline you can use MeSH (Medical Subject Headings) and for Embase you can use the EMTREE thesaurus.

SEARCH TIP: In Ovid databases, search for a known key paper by title, select the "complete reference" button to see which subject headings the database indexers have given that article, and consider adding relevant ones to your own search strategy.

Use Boolean logic to combine search terms

Boolean operators (AND, OR and NOT) allow you to try different combinations of search terms or subject headings.

Databases often show Boolean operators as buttons or drop-down menus that you can click to combine your search terms or results.

The main Boolean operators are:

OR is used to find articles that mention either of the topics you search for.

AND is used to find articles that mention both of the searched topics.

NOT excludes a search term or concept. It should be used with caution as you may inadvertently exclude relevant references.

For example, searching for “self-esteem NOT eating disorders” finds articles that mention self-esteem but removes any articles that mention eating disorders.

Citation searching is a method to find articles that have been cited by other publications.

Use citation searching (or cited reference searching) to:

- find out whether articles have been cited by other authors

- find more recent papers on the same or similar subject

- discover how a known idea or innovation has been confirmed, applied, improved, extended, or corrected

- help make your literature review more comprehensive.

You can use cited reference searching in:

- OvidSP databases

- Google Scholar

- Web of Science

Cited reference searching can complement your literature search. However be careful not to just look at papers that have been cited in isolation. A robust literature search is also needed to limit publication bias.

Literature Review: Developing a search strategy

- Traditional or narrative literature reviews

- Scoping Reviews

- Systematic literature reviews

- Annotated bibliography

- Keeping up to date with literature

- Finding a thesis

- Evaluating sources and critical appraisal of literature

- Managing and analysing your literature

- Further reading and resources

From research question to search strategy

Keeping a record of your search activity

Good search practice could involve keeping a search diary or document detailing your search activities (Phelps et. al. 2007, pp. 128-149), so that you can keep track of effective search terms, or to help others to reproduce your steps and get the same results.

This record could be a document, table or spreadsheet with:

- The names of the sources you search and which provider you accessed them through - eg Medline (Ovid), Web of Science (Thomson Reuters). You should also include any other literature sources you used.

- how you searched (keyword and/or subject headings)

- which search terms you used (which words and phrases)

- any search techniques you employed (truncation, adjacency, etc)

- how you combined your search terms (AND/OR). Check out the Database Help guide for more tips on Boolean Searching.

- The number of search results from each source and each strategy used. This can be the evidence you need to prove a gap in the literature, and confirms the importance of your research question.

A search planner may help you to organise you thoughts prior to conducting your search. If you have any problems with organising your thoughts prior, during and after searching please contact your Library Faculty Team for individual help.

- Literature search - a librarian's handout to introduce tools, terms and techniques Created by Elsevier librarian, Katy Kavanagh Web, this document outlines tools, terms and techniques to think about when conducting a literature search.

- Search planner

Literature search cycle

Diagram text description

This diagram illustrates the literature search cycle. It shows a circle in quarters. Top left quarter is identify main concepts with rectangle describing how to do this by identifying:controlled vocabulary terms, synonyms, keywords and spelling. Top right quarter select library resources to search and rectangle describing resources to search library catalogue relevant journal articles and other resource. Bottom right corner of circle search resources and in rectangle consider using boolean searching proximity searching and truncated searching techniques. Bottom left quarter of circle review and refine results. In rectangle evaluate results, rethink keywords and create alerts.

Have a search framework

Search frameworks are mnemonics which can help you focus your research question. They are also useful in helping you to identify the concepts and terms you will use in your literature search.

PICO is a search framework commonly used in the health sciences to focus clinical questions. As an example, you work in an aged care facility and are interested in whether cranberry juice might help reduce the common occurrence of urinary tract infections. The PICO framework would look like this:

Now that the issue has been broken up to its elements, it is easier to turn it into an answerable research question: “Does cranberry juice help reduce urinary tract infections in people living in aged care facilities?”

Other frameworks may be helpful, depending on your question and your field of interest. PICO can be adapted to PICOT (which adds T ime) or PICOS (which adds S tudy design), or PICOC (adding C ontext).

For qualitative questions you could use

- SPIDER : S ample, P henomenon of I nterest, D esign, E valuation, R esearch type

For questions about causes or risk,

- PEO : P opulation, E xposure, O utcomes

For evaluations of interventions or policies,

- SPICE: S etting, P opulation or P erspective, I ntervention, C omparison, E valuation or

- ECLIPSE: E xpectation, C lient group, L ocation, I mpact, P rofessionals, SE rvice

See the University of Notre Dame Australia’s examples of some of these frameworks.

You can also try some PICO examples in the National Library of Medicine's PubMed training site: Using PICO to frame clinical questions.

Contact Your Faculty Team Librarian

Faculty librarians are here to provide assistance to students, researchers and academic staff by providing expert searching advice, research and curriculum support.

- Faculty of Arts & Education team

- Faculty of Business, Justice & Behavioural Science team

- Faculty of Science team

Further reading

- << Previous: Annotated bibliography

- Next: Keeping up to date with literature >>

- Last Updated: May 12, 2024 12:18 PM

- URL: https://libguides.csu.edu.au/review

Charles Sturt University is an Australian University, TEQSA Provider Identification: PRV12018. CRICOS Provider: 00005F.

Trinity College Dublin, The University of Dublin

Trinity Search

Trinity menu.

- Faculties and Schools

- Trinity Courses

- Trinity Research

Writing a Literature Review

- Getting Started

- Defining the Scope

- Finding the Literature

Getting your search right

Keyword searches, widening your search: truncation and wildcards, combining your terms: search operators, being more specific: phrase and proximity searching, subject headings, combining keyword and subject heading searches, using methodological search filters.

- Managing Your Research

- Writing the Review

- Systematic Reviews and Other Review Types

- Useful Books

- Useful Videos

- Useful Links

- Commonly Used Terms

Test your strategy!

- Search the database for each of the test records and make a note of the unique record number for each one - in Medline this is in the UI field.

- Run your search strategy.

- Run a search for all the record numbers for your test set using 'OR' in between each one.

- Lastly combine the result of your search strategy with the test set using 'OR'.

- If the number of records retrieved stays the same then the strategy has identified all the records. If it doesn't, combine the result of your search strategy with the test set, this time using 'NOT'. This will identify the records in your test set which are not being retrieved. Work out why these weren't retrieved and adjust your search strategy accordingly.

Think about...

- abbreviations

- related terms

- UK/US spellings

- singular/plural forms of words

- thesaurus terms (where available)

Your search is likely to be complex and involve multiple steps to do with different subjects, what are often called "strands" or "strings" in the search. Look at the appendices of existing reviews for an idea of what's involved in creating a comprehensive search.

Most people should start by finding all the articles on Topic A, then moving on to Topic B, then Topic C (and so on), then combining those strands together using AND (see Combining your terms: search operators below). This will then give you results that mention all those topics.

You will then need to adapt (or "translate") your strategy for each database depending on the searching options available on each one. A core of terms is used across multiple databases - this is the "systematic" part - BUT with additions and subtractions as necessary. While the words in the title and abstract might remain the same, it's highly likely the thesaurus terms (if they exist) will be different across the databases. You may need to leave out some strings completely; for example, let's say you are doing a study that needs to find Randomised Controlled Trials (RCTs) on a particular disease and its treatment. You will be looking in multiple databases for words to do with the condition, and also words that are used for RCTs. But when you are looking in databases that are composed entirely of RCTs (trials registers), the part of a search looking for RCTs doesn’t need to be included as it's redundant.

The techniques described below will help ensure you cover everything. Contact your Subject Librarian if you would like guidance on constructing your search.

This video from the University of Reading gives a good overview of literature searching tips and tricks:

Jump to 01:45 for truncation and 05:46 for wildcards.

- Contact your Subject Librarian

- How to translate searches between certain databases A fantastic crib sheet from Karolinska Institutet University Library, showing how to translate searches between Medline (Ovid), PsycInfo (Ovid), Embase (Elsevier), Web of Science, Cinahl (Ebsco), Cochrane (Wiley), and PubMed.

Most searches have two elements - the "keywords" part and the "subject headings" part - for each topic. When you are initially constructing your search and trialling it in a database, you are likely to just add your keywords, click Search, and see how many that retrieves. But after that, for any type of comprehensive search, you should look at limiting your keywords to looking in specific search fields .

A field in this context is where the database only looks at one aspect of the information about the article. Common examples are the Author, Title, Abstract, and Journal Name. More esoteric ones could be fields like the CAS Registry Entry or Corporate Author.

In complex reviews like systematic, scoping and rapid reviews, the accepted wisdom is to limit these "keyword" searches to the Title and Abstract fields, plus (if available, and the search is looking to be comprehensive) any available "Author Keyword" or "Contributed Indexing" fields. It is vital that the keywords you use in these fields are identical - you are using the same words in the Title, Abstract and any related fields - and that you combine them using OR (see Combining your terms: search operators below)

Using keyword searching limited to the Title/Abstract/Keywords fields should reduce the number of results which are retrieved in error or are only on the periphery of your subject. If you do this, please be aware that you will need to ensure that you have definitely also included all relevant subject headings in your search strategy (in databases that use controlled vocabulary) otherwise you risk missing out on useful results. It *is* quite possible that there will be no relevant subject headings in a particular search.

Although some databases will automatically search for variant spellings, mostly they will just search for the exact letters you type in. Use wildcard and truncation symbols to take control of your search and include variations to widen your search and ensure you don't miss something relevant.

A truncation symbol (*) retrieves any number of letters - useful to find different word endings based on the root of a word: africa* will find africa, african, africans, africaans agricultur* will find agriculture, agricultural, agriculturalist

A wildcard symbol (?) replaces a single letter . It's useful for retrieving alternate spelling spellings (i.e. British vs American English) and simple plurals: wom?n will find woman or women behavio?r will find behaviour or behavior

Hint: Not all databases use the * and ? symbols - some may use different ones (! instead of *, for example), or not have the feature at all, so check the online help section of the database before you start.

- Introduction

Search operators (also called Boolean operators) allow you to include multiple words and concepts in your searches. This means you can search for all of your terms at once rather than carrying out multiple searches for each concept.

There are three main operators:

- OR - for combining alternative words for your concepts and widening your results e.g. women OR gender

- AND - for combining your concepts giving more specific results e.g. women AND Africa

- NOT - to exclude specific terms from your search - use this with caution as you might exclude relevant results accidentally!

women OR female

Using OR will bring you back records containing either of your search terms. It will return items that include both terms, but will also return items that contain only one of the terms.

This will give you a broader range of results.

OR can be used to link together synonyms. These are then placed in brackets to show that they are all the same concept.

- (cat OR kitten OR feline)

- (women OR female)

women AND Africa

Using AND will find items that contain both of your search terms, giving you a more specific set of results.

If you're getting too many results, using AND can be a good way to narrow your search.

women NOT Africa

Using NOT will find articles containing a particular term, but will exclude articles containing your second term.

Use this with caution - by excluding results you might miss out on key resources.

- Phrase searching

- Proximity searching

Sometimes your search may contain common words (i.e. development, communication) which will retrieve too many irrelevant records, even when using an AND search. On many databases, including Google, to look for a specific phrase, use inverted commas:

- "agricultural development"

- "foot and mouth"

Your search will only bring back items containing these exact phrases.

Some databases automatically perform a phrase search if you do not use any search operators. For example, "agriculture africa" is not a phrase used in English so you may not find any items on the subject. Use AND in between your search words to avoid this.

On Scopus to search for an exact phrase use { } e.g. {agricultural development}. Using quotes on Scopus will find your words in the same field (e.g., title) but not necessarily next to one another. In this database, you need to be very careful with those brackets - {heart-attack} and {heart attack} will return different results because the dash is included. Wildcards are searched as actual characters, e.g. {health care?} returns results such as: Who pays for health care?

Some databases use proximity operators, which are a more advanced search function. You can use these to tell the database how close one word must be to another and, in some cases, in what order. This makes a search more specific and excludes irrelevant records.

For instance, if you were searching for references about women in Africa, you might retrieve irrelevant records for items about women published in Africa. Performing a proximity search will only retrieve the two words in the same sentence, making your search more accurate.

Each database has its own way of proximity searching, often with multiple ways of doing it, so it's important to check the online help before you start . Here are some examples of the variety of possible searches:

- Web of Science : women same Africa - retrieves records where the words 'women' and 'Africa' appear in the same sentence

- JSTOR : agricultural development ~5 - retrieves records where the words 'agricultural' and 'development' are within five words of one another

- Scopus : agricultural W/2 development - retrieves records where the word 'agricultural' is within two words of the word 'development'.

After completing your keywords search on a topic, you can move on to looking for appropriate subject headings.

Most databases have this controlled vocabulary feature (standardised subject headings or thesaurus terms - a bit like standard tags) which can help ensure you capture all the relevant studies; for example, MEDLINE, CENTRAL and PubMed use the exact same headings, which are called MeSH (Medical Subject Headings). Some of these headings will be the same in other related databases like CINAHL, but many of them will be slightly different, could be the same but have subtly different meanings, or not be there at all.

Not all databases have these types of subject headings - Web of Science and JSTOR don't allow you to search for subject headings like these, although you can of course search for subjects in them.

The easiest way to search for a subject heading is to go to the relevant area in the database that searches specifically for them; this might be called something like Thesaurus, Subject Headings, or similar. Then search for some of the words to do with your topic - not all of them at once, just a word on its own or a very simple phrase. Does this bring anything up? When you read the description, are you talking about the same thing?

You can then tell it to search for everything listed under that subject heading, then move on to looking for another subject heading. It's quite common for one topic to have several relevant headings.

Once you have found all the relevant headings, and made the database run searches for them, you will then combine them together using OR.

After you have found all the title/abstract/keywords for Topic A, and then all the relevant subject headings, you then combine those together using OR. You may need to go into the Search History section of the database to do this, and work out whether you can tick boxes next to your various searches to combine them, or have to type out something like "#1 OR #2".

This gives you a "super search" with everything in the database on that topic. It's likely to be a lot!

It might be that adding them together gives no extra results than the amount in either the keywords or the subject headings on their own. This is unusual, but not impossible:

You now go back to the start and for Topic B do the same title/abstract/keywords searches, then the relevant subject headings searches, then combine them as above. Then Topic C, and so on. Again, each of these super searches may have very high numbers - possibly millions.

Finally, you then combine all these super searches together, but this time using AND; they need to mention all the topics. It's possible that there are no articles in that database that mention all those things - the more subjects you AND together, the less results you are likely to find. However, it's also possible to end up with zero as there is a mistake in your search, and in most cases having zero results won't allow you to write your paper or thesis. So contact us if you think you have too few or two many results, and we can advise.

Methodological search filters are search terms or strategies that identify a topic or aspect. They are predefined, tried and tested filters which can be applied to a search.

Study types: 'systematic reviews', 'Randomised Controlled Trials'

Age groups: 'children', 'elderly'

Language: 'English'

They are available to select via the results filters displayed alongside your results and are normally applied at the very end of your search . For instance, on PubMed after running your results it is possible to limit by 'Ages' which gives predefined groupings such as 'Infant: birth-23 months'. These limits and filters are not always the same across the databases, so do be careful .

- << Previous: Finding the Literature

- Next: Managing Your Research >>

- Last Updated: Oct 10, 2023 1:52 PM

- URL: https://libguides.tcd.ie/literature-reviews

- Subject guides

- Researching for your literature review

- Develop a search strategy

Researching for your literature review: Develop a search strategy

- Literature reviews

- Literature sources

- Before you start

- Keyword search activity

- Subject search activity

- Combined keyword and subject searching

- Online tutorials

- Apply search limits

- Run a search in different databases

- Supplementary searching

- Save your searches

- Manage results

Identify key terms and concepts

Start developing a search strategy by identifying the key words and concepts within your research question. The aim is to identify the words likely to have been used in the published literature on this topic.

For example: What are the key infection control strategies for preventing the transmission of Meticillin-resistant Staphylococcus aureus (MRSA) in aged care homes .

Treat each component as a separate concept so that your topic is organised into separate blocks (concepts).

For each concept block, list the key words derived from your research question, as well as any other relevant terms or synonyms that you have found in your preliminary searches. Also consider singular and plural forms of words, variant spellings, acronyms and relevant index terms (subject headings).

As part of the process of developing a search strategy, it is recommended that you keep a master list of search terms for each key concept. This will make it easier when it comes to translating your search strategy across multiple database platforms.

Concept map template for documenting search terms

Combine search terms and concepts

Boolean operators are used to combine the different concepts in your topic to form a search strategy. The main operators used to connect your terms are AND and OR . See an explanation below:

- Link keywords related to a single concept with OR

- Linking with OR broadens a search (increases the number of results) by searching for any of the alternative keywords

Example: nursing home OR aged care home

- Link different concepts with AND

- Linking with AND narrows a search (reduces the number of results) by retrieving only those records that include all of your specified keywords

Example: nursing home AND infection control

- using NOT narrows a search by excluding results that contain certain search terms

- Most searches do not require the use of the NOT operator

Example: aged care homes NOT residential homes will retrieve all the results that include the words aged care homes but don't include the words residential homes . So if an article discussed both concepts this article would not be retrieved as it would be excluded on the basis of the words residential homes .

See the website for venn diagrams demonstrating the function of AND/OR/NOT:

Combine the search terms using Boolean

Advanced search operators - truncation and wildcards

By using a truncation symbol you can capture all of the various endings possible for a particular word. This may increase the number of results and reduce the likelihood of missing something relevant. Some tips about truncation:

- The truncation symbol is generally an asterisk symbol * and is added at the end of a word.

- It may be added to the root of a word that is a word in itself. Example: prevent * will retrieve prevent, prevent ing , prevent ion prevent ative etc. It may also be added to the root of a word that is not a word in itself. Example: strateg * will retrieve strateg y , strateg ies , strateg ic , strateg ize etc.

- If you don't want to retrieve all possible variations, an easy alternative is to utilise the OR operator instead e.g. strategy OR strategies. Always use OR instead of truncation where the root word is too small e.g. ill OR illness instead of ill*

There are also wildcard symbols that function like truncation but are often used in the middle of a word to replace zero, one or more characters.

- Unlike the truncator which is usually an asterisk, wildcards vary across database platforms

- Common wildcards symbols are the question mark ? and hash #.

- Example: wom # n finds woman or women, p ? ediatric finds pediatric or paediatric.

See the Database search tips for details of these operators, or check the Help link in any database.

Phrase searching

For words that you want to keep as a phrase, place two or more words in "inverted commas" or "quote marks". This will ensure word order is maintained and that you only retrieve results that have those words appearing together.

Example: “nursing homes”

There are a few databases that don't require the use of quote marks such as Ovid Medline and other databases in the Ovid suite. The Database search tips provides details on phrase searching in key databases, or you can check the Help link in any database.

Subject headings (index terms)

Identify appropriate subject headings (index terms).

Many databases use subject headings to index content. These are selected from a controlled list and describe what the article is about.

A comprehensive search strategy is often best achieved by using a combination of keywords and subject headings where possible.

In-depth knowledge of subject headings is not required for users to benefit from improved search performance using them in their searches.

Advantages of subject searching:

- Helps locate articles that use synonyms, variant spellings, plurals

- Search terms don’t have to appear in the title or abstract

Note: Subject headings are often unique to a particular database, so you will need to look for appropriate subject headings in each database you intend to use.

Subject headings are not available for every topic, and it is best to only select them if they relate closely to your area of interest.

MeSH (Medical Subject Headings)

The MeSH thesaurus provides standard terminology, imposing uniformity and consistency on the indexing of biomedical literature. In Pubmed/Medline each record is tagged with MeSH (Medical Subject Headings).

The MeSH vocabulary includes:

- Represent concepts found in the biomedical literature

- Some headings are commonly considered for every article (eg. Species (including humans), Sex, Age groups (for humans), Historical time periods)

- attached to MeSH headings to describe a specific aspect of a concept

- describe the type of publication being indexed; i.e., what the item is, not what the article is about (eg. Letter, Review, Randomized Controlled Trial)

- Terms in a separate thesaurus, primarily substance terms

Create a 'gold set'

It is useful to build a ‘sample set’ or ‘gold set’ of relevant references before you develop your search strategy..

Sources for a 'gold set' may include:

- key papers recommended by subject experts or supervisors

- citation searching - looking at a reference list to see who has been cited, or using a citation database (eg. Scopus, Web of Science) to see who has cited a known relevant article

- results of preliminary scoping searches.

The papers in your 'gold set' can then be used to help you identify relevant search terms

- Look up your 'gold set' articles in a database that you will use for your literature review. For the articles indexed in the database, look at the records to see what keywords and/or subject headings are listed.

The 'gold set' will also provide a means of testing your search strategy

- When an article in the sample set that is also indexed in the database is not retrieved, your search strategy can be revised in order to include it (see what concepts or keywords can be incorporated into your search strategy so that the article is retrieved).

- If your search strategy is retrieving a lot of irrelevant results, look at the irrelevant records to determine why they are being retrieved. What keywords or subject headings are causing them to appear? Can you change these without losing any relevant articles from your results?

- Information on the process of testing your search strategy using a gold set can be found in the systematic review guide

Example search strategy

A search strategy is the planned and structured organisation of terms used to search a database.

An example of a search strategy incorporating all three concepts, that could be applied to different databases is shown below:

You will use a combination of search operators to construct a search strategy, so it’s important to keep your concepts grouped together correctly. This can be done with parentheses (round brackets), or by searching for each concept separately or on a separate line.

The above search strategy in a nested format (combined into a single line using parentheses) would look like:

("infection control*" OR "infection prevention") AND ("methicillin resistant staphylococcus aureus" OR "meticillin resistant staphylococcus aureus" OR MRSA) AND ( "aged care home*" OR "nursing home*")

- << Previous: Search strategies - Health/Medical topic example

- Next: Keyword search activity >>

Literature Reviews

- 3. Search the literature

- Getting started

- Types of reviews

- 1. Define your research question

- 2. Plan your search

Creating a search strategy

Document your search, rinse and repeat, grey literature, grey literature sources.

- 4. Organize your results

- 5. Synthesize your findings

- 6. Write the review

- Artificial intelligence (AI) tools

- Thompson Writing Studio This link opens in a new window

- Need to write a systematic review? This link opens in a new window

Contact a Librarian

Ask a Librarian

- Thesauri / subject headings

- Ask a librarian!

When conducting a literature review, it is imperative to brainstorm a list of keywords related to your topic. Examining the titles, abstracts, and author-provided keywords of pertinent literature is a great starting point.

Things to keep in mind:

- Alternative spellings (e.g., behavior and behaviour)

- Variants and truncation (e.g., environ* = environment, environments, environmental, environmentally)

- Synonyms (e.g., alternative fuels >> electricity, ethanol, natural gas, hydrogen fuel cells)

- Phrases and double quotes (e.g., "food security" versus food OR security)

One way to visually organize your thoughts is to create a table where each column represents one concept in your research question. For example, if your research question is...

Does social media play a role in the number of eating disorder diagnoses in college-aged women?

...then your table might look something like this:

Generative AI tools, such as chatbots, are actually quite helpful at this stage when it comes to brainstorming synonyms and other related terms. You can also look at author-provided keywords from benchmark articles (key papers related to your topic), databases' controlled vocabularies, or do a preliminary search and look through abstracts from relevant papers.

Generative AI tools : ChatGPT , Google Gemini (formerly Bard) , Claude , Microsoft Copilot

For more information on how to incorporate AI tools into your research, check out the section on AI Tools .

Boolean searching yields more effective and precise search results. Boolean operators include AND , OR , and NOT . These are logic-based words that help search engines narrow down or broaden search results.

Using the Operators

The Boolean operator AND tells a search engine that you want to find information about two (or more) search terms. For example, sustainability AND plastics. This will narrow down your search results because the search engine will only bring back results that include both search terms.

The Boolean operator OR tells the search engine that you want to find information about either search term you've entered. For example, sustainability OR plastics. This will broaden your search results because the search engine will bring back any results that have either search term in them.

The Boolean operator NOT tells the search engine that you want to find information about the first search term, but nothing about the second. For example, sustainability NOT plastics. This will narrow down your research results because the search engine will bring back only resources about the first search term (sustainability), but exclude any resources that include the second search term (plastics).

Some databases offer a thesaurus , controlled vocabulary , or list of available subject headings that are assigned to each of its records, either by an indexer or by the original author. The use of controlled vocabularies is a highly effective, efficient, and deliberate way of comprehensively discovering the material within a field of study.

- APA Thesaurus of Psychological Index Terms (via PsycInfo database)

- Medical Subject Headings (MeSH) (via PubMed)

- List of ProQuest database thesauri

Web of Science's Core Collection offers a list of subject categories that are searchable by the Web of Science Categories field .

Reach out to a Duke University Libraries librarian at [email protected] or use the chat function.

Information animated icons created by Freepik - Flaticon

While not essential for traditional literature reviews, documenting your search can help you:

- Keep track of what you've done so that you don't repeat unproductive searches

- Reuse successful search strategies for future papers

- Help you describe your search process for manuscripts

- Justify your search process

Documenting your search will help you stay organized and save time when tweaking your search strategy. This is a critical step for rigorous review papers, such as systematic reviews .

One of the easiest ways to document your search strategy is to use a table like this:

If you find that you're receiving too many results , try the following tips:

- Use more AND operators to connect keywords/concepts in order to narrow down your search.

- Use more specific keywords rather than an umbrella term (e.g., "formaldehyde" instead of "chemical").

- Use quotation marks (" ") to search an entire phrase.

- Use filters such as date, language, document type, etc.

- Examine your research question to see if it's still too broad.

On the other hand, if you're not receiving enough results :

- Use more OR operators to connect related terms and bring in additional results.

- Use more generic terms (e.g., "acetone" instead of "dimethyl ketone") or fewer keywords altogether.

- Use wildcard operators (*) to expand your results (e.g., toxi* searches toxic, toxin, toxins).

- Examine your research question to see if it's too narrow.

Grey (or gray) literature refers to research materials and publications that are not commercially published or widely distributed through traditional academic channels. If you are tasked with doing an intensive type of review or evidence synthesis, or you are involved in research related to policy-making, you will likely want to include searching for grey literature. This type of literature includes:

- working papers

- government documents

- conference proceedings

- theses and dissertations

- white papers...etc.

For more information on grey literature, please see our Grey Literature guide .

- Public policy

- Health/medicine

- Statistics/data

- Thesis/dissertation

- ProQuest Central This link opens in a new window Search for articles from thousands of scholarly journals

- OpenDOAR OpenDOAR is the quality-assured, global Directory of Open Access Repositories. We host repositories that provide free, open access to academic outputs and resources.

- OAIster A catalog of millions of open-access resources harvested from WorldCat.

- GreySource An index of repository hyperlinks across all disciplines.

- Pew Research Center Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. We conduct public opinion polling, demographic research, content analysis and other data-driven social science research.

- The World Bank The World Bank is a vital source of financial and technical assistance to developing countries around the world.

- World Health Organization (WHO): IRIS IRIS is the Institutional Repository for Information Sharing, a digital library of WHO's published material and technical information in full text produced since 1948.

- PolicyArchive PolicyArchive is a comprehensive digital library of public policy research containing over 30,000 documents.

- Kaiser Family Foundation KFF is the independent source for health policy research, polling, and journalism. Our mission is to serve as a nonpartisan source of information for policymakers, the media, the health policy community, and the public.

- MedNar Mednar is a free, medically-focused deep web search engine that uses Explorit Everywhere!, an advanced search technology by Deep Web Technologies. As an alternative to Google, Mednar accelerates your research with a search of authoritative public and deep web resources, returning the most relevant results to one easily navigable page.

- Global Index Medicus The Global Index Medicus (GIM) provides worldwide access to biomedical and public health literature produced by and within low-middle income countries. The main objective is to increase the visibility and usability of this important set of resources. The material is collated and aggregated by WHO Regional Office Libraries on a central search platform allowing retrieval of bibliographical and full text information.

For more in-depth information related to grey literature searching in medicine, please visit Duke Medical Center Library's guide .

- Education Resources Information Center (ERIC) ERIC is a comprehensive, easy-to-use, searchable, Internet-based bibliographic and full-text database of education research and information. It is sponsored by the Institute of Education Sciences within the U.S. Department of Education.

- National Center for Occupational Safety and Health (NIOSHTIC-2) NIOSHTIC-2 is a searchable bibliographic database of occupational safety and health publications, documents, grant reports, and other communication products supported in whole or in part by NIOSH (CDC).

- National Technical Information Service (NTIS) The National Technical Information Service acquires, indexes, abstracts, and archives the largest collection of U.S. government-sponsored technical reports in existence. The NTRL offers online, free and open access to these authenticated government technical reports.

- Science.gov Science.gov provides access to millions of authoritative scientific research results from U.S. federal agencies.

- GovInfo GovInfo is a service of the United States Government Publishing Office (GPO), which is a Federal agency in the legislative branch. GovInfo provides free public access to official publications from all three branches of the Federal Government.

- CQ Press Library This link opens in a new window Search for analysis of Congressional actions and US political issues. Includes CQ Weekly and CQ Researcher.

- Congressional Research Service (CRS) This collection provides the public with access to research products produced by the Congressional Research Service (CRS) for the United States Congress.

Please see the Data Sets and Collections page from our Statistical Sciences guide.

- arXiv arXiv is a free distribution service and an open-access archive for nearly 2.4 million scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics. Materials on this site are not peer-reviewed by arXiv.

- OSF Preprints OSF Preprints is an open access option for discovering multidisciplinary preprints as well as postprints and working papers.

- << Previous: 2. Plan your search

- Next: 4. Organize your results >>

- Last Updated: May 17, 2024 8:42 AM

- URL: https://guides.library.duke.edu/litreviews

Services for...

- Faculty & Instructors

- Graduate Students

- Undergraduate Students

- International Students

- Patrons with Disabilities

- Harmful Language Statement

- Re-use & Attribution / Privacy

- Support the Libraries

- Interlibrary Loan

Ask an Expert

Ask an expert about access to resources, publishing, grants, and more.

MD Anderson faculty and staff can also request a one-on-one consultation with a librarian or scientific editor.

- Library Calendar

Log in to the Library's remote access system using your MyID account.

- Library Home

- Research Guides

Literature Search Basics

Develop a search strategy.

- Define your search

- Decide where to search

What is a search strategy

Advanced search tips.

- Track and save your search

- Class Recording: Writing an Effective Narrative Review

- A search strategy includes a combination of keywords, subject headings, and limiters (language, date, publication type, etc.)

- A search strategy should be planned out and practiced before executing the final search in a database.

- A search strategy and search results should be documented throughout the searching process.

What is a search strategy?

A search strategy is an organized combination of keywords, phrases, subject headings, and limiters used to search a database.

Your search strategy will include:

- keywords

- boolean operators

- variations of search terms (synonyms, suffixes)

- subject headings

Your search strategy may include:

- truncation (where applicable)

- phrases (where applicable)

- limiters (date, language, age, publication type, etc.)

A search strategy usually requires several iterations. You will need to test the strategy along the way to ensure that you are finding relevant articles. It's also a good idea to review your search strategy with your co-authors. They may have ideas about terms or concepts you may have missed.

Additionally, each database you search is developed differently. You will need to adjust your strategy for each database your search. For instance, Embase is a European database, many of the medical terms are slightly different than those used in MEDLINE and PubMed.

Choose search terms

Start by writing down as many terms as you can think of that relate to your question. You might try cited reference searching to find a few good articles that you can review for relevant terms.

Remember than most terms or concepts can be expressed in different ways. A few things to consider:

- synonyms: "cancer" may be referred to as "neoplasms", "tumors", or "malignancy"

- abbreviations: spell out the word instead of abbreviating

- generic vs. trade names of drugs

Search for the exact phrase

If you want words to appear next to each other in an exact phrase, use quotation marks, eg “self-esteem”.

Phrase searching decreases the number of results you get. Most databases allow you to search for phrases, but check the database guide if you are unsure.

Truncation and wildcards

Many databases use an asterisk (*) as their truncation symbol to find various word endings like singulars and plurals. Check the database help section if you are not sure which symbol to use.

"Therap*"

retrieves: therapy, therapies, therapist or therapists.

Use a wildcard (?) to find different spellings like British and American spellings.

"Behavio?r" retrieves behaviour and behavior.

Searching with subject headings

Database subject headings are controlled vocabulary terms that a database uses to describe what an article is about.

Using appropriate subject headings enhances your search and will help you to find more results on your topic. This is because subject headings find articles according to their subject, even if the article does not use your chosen key words.

You should combine both subject headings and keywords in your search strategy for each of the concepts you identify. This is particularly important if you are undertaking a systematic review or an in-depth piece of work

Subject headings may vary between databases, so you need to investigate each database separately to find the subject headings they use. For example, for MEDLINE you can use MeSH (Medical Subject Headings) and for Embase you can use the EMTREE thesaurus.

SEARCH TIP: In Ovid databases, search for a known key paper by title, select the "complete reference" button to see which subject headings the database indexers have given that article, and consider adding relevant ones to your own search strategy.

Use Boolean logic to combine search terms

Boolean operators (AND, OR and NOT) allow you to try different combinations of search terms or subject headings.

Databases often show Boolean operators as buttons or drop-down menus that you can click to combine your search terms or results.

The main Boolean operators are:

OR is used to find articles that mention either of the topics you search for.

AND is used to find articles that mention both of the searched topics.

NOT excludes a search term or concept. It should be used with caution as you may inadvertently exclude relevant references.

For example, searching for “self-esteem NOT eating disorders” finds articles that mention self-esteem but removes any articles that mention eating disorders.

Adjacency searching

Use adjacency operators to search by phrase or with two or more words in relation to one another. A djacency searching commands differ among databases. Check the database help section if you are not sure which searching commands to use.

In Ovid Medline

"breast ADJ3 cancer" finds the word breast within three words of cancer, in any order.

This includes breast cancer or cancer of the breast.

Cited Reference Searching

Cited reference searching is a method to find articles that have been cited by other publications.

Use cited reference searching to:

- find keywords or terms you may need to include in your search strategy

- find pivotal papers the same or similar subject area

- find pivotal authors in the same or similar subject area

- track how a topic has developed over time

Cited reference searching is available through these tools:

- Web of Science

- GoogleScholar

- << Previous: Decide where to search

- Next: Track and save your search >>

- Last Updated: Nov 29, 2022 3:34 PM

- URL: https://mdanderson.libguides.com/literaturesearchbasics

Best Practice for Literature Searching

- Literature Search Best Practice

- What is literature searching?

- What are literature reviews?

- Hierarchies of evidence

- 1. Managing references

- 2. Defining your research question

- 3. Where to search

- 4. Search strategy

- 5. Screening results

- 6. Paper acquisition

- 7. Critical appraisal

- Further resources

- Training opportunities and videos

- Join FSTA student advisory board This link opens in a new window

- Chinese This link opens in a new window

- Italian This link opens in a new window

- Persian This link opens in a new window

- Portuguese This link opens in a new window

- Spanish This link opens in a new window

Creating a search strategy

Once you have determined what your research question is and where you think you should search, you need to translate your question into a useable search. Doing so will:

- Make it much more likely that you will find the relevant research and minimise false hits (irrelevant results)

- Save you time in the long run

- Help you to stay objective throughout your searching and stick to your plan

- Help you replicate and update your results (where needed)

- Help future researchers build on your research.

If you need to explore a topic first, your search strategy can initially be quite loose. You can then revisit search terms and update your search strategy accordingly. Record your search strategy as you develop it and capture the final version for each place that you search.

Remember that information retrieval in the area of food is complex because of the broadness of the field and the way in which content is indexed. As a result, there is often a high level of ‘noise’ when searching food topics in a database not designed for food content. Creating successful search strategies involves knowledge of a database, its scope, indexing and structure.

- Key concepts and meaningful terms

- Keywords or subject headings

- Alternative keywords

- Care in linking concepts correctly

- Regular evaluation of search results, to ensure that your search is focused

- A detailed record of your final strategy. You will need to re-run your search at the end of the review process to catch any new literature published since you began.

- Search matrix

- Populated matrix

- Revised matrix (after running searches)

- DOWNLOAD THE SEARCH MATRIX

Using a search matrix helps you brainstorm and collect words to include in your search. To populate a search matrix:

- Identify the main concepts in your search

- Run initial searches with your terms, scanning abstract and subject terms (sometimes called descriptors, keywords, MeSH headings, or thesaurus terms, depending on which database you are using) of relevant results for words to add to the matrix.

- Explore a database thesaurus hierarchy for suitable broader and narrower terms.

Note : You don’t need to fill all of the boxes in a search matrix.

You will find that you need to do some searches as you experiment in running it and this will help you refine your search strategy. For the search on this example question:

- Some of the broader terms turned out to be too broad, introducing a host of irrelevant results about pork and chicken

- Some of the narrower terms were unnecessary, as any result containing “beef extract” is captured by just using the term beef.

See the revised matrix (after running searches) tab!

This revised matrix shows both adjustments made to terms, and how the terms are connected with Boolean operators. Different forms of the same concept (the columns) are connected with OR, and each of the different concepts are connected with AND.

Search tools

- Boolean operators

- Phrases and proximity searching

- Truncation and wildcards

Boolean operators tell a database or search engine how the terms you type are related to each other.

Use OR to connect variations representing the same concept . In many search interfaces you will want to put your OR components inside parentheses like this: (safe OR “food safety” OR decontamination OR contamination OR disinfect*). These are now lumped together into a single food safety concept for your search.

Use AND to link different concepts. By typing (safe OR “food safety” OR decontamination OR contamination OR disinfect*) AND (beef OR “cattle carcasses”)—you are directing the database to display results containing both concepts.

NOT eliminates all results containing a specific word. Use NOT with caution. The term excluded might be used in a way you have not anticipated, and you will not know because you will not see the missing results.

Learn more about using Boolean operators: Research Basics: Using Boolean Operators to Build a Search (ifis.org)

The search in the matrix above would look like this in a database:

("food safety" OR safety OR decontamination OR contamination OR disinfection) AND (thaw* OR defrost* OR "thawing medium") AND ("sensory quality attributes" OR "sensory perception" OR quality OR aroma OR appearance OR "eating quality" OR juiciness OR mouthfeel OR texture OR "mechanical properties" OR "sensory analysis" OR "rheological properties") AND (beef OR "cattle carcasses")

Thesaurus terms will help you capture variations in words and spellings that researchers might use to refer to the same concept, but you can and should also use other mechanisms utilised by databases to do the same. This is especially important for searches in databases where the thesaurus is not specialised for food science.

- Phrase searching , putting two or more words inside quotation marks like “food safety” will ensure that those words appear in a single field (i.e. title or abstract or subject heading) together as the phrase. Phrase searching can eliminate false hits where the words used separately do not represent the needed concept.

- Some databases allow you to use proximity searching to specify that words need to be near each other. For instance, if you type ripening N5 cheese you will get results with a maximum of five words between ripening and cheese . You would get results containing cheese ripening as well as results containing ripening of semi-hard goat cheese .

Learn how to test if a phrase search or a proximity search is the better choice for your search: Proximity searching, phrase searching, and Boolean AND: 3 techniques to focus your literature search (ifis.org)

Note : Proximity symbols vary from database to database. Some use N plus a number, while others use NEAR, ADJ or W. Always check the database help section to be sure that you are using the right symbols for that database .

Truncating a word mean typing the start of a word, followed by a symbol, usually an asterisk (*). This symbol tells the database to return the letters you have typed followed either by no letters (if appropriate) or letters. It is an easy way to capture a concept that might be expressed with a variety of endings.

Sometimes you need to adjust where you truncate to avoid irrelevant results. See the difference between results for nutri* or nutrit*

Inserting wildcard symbols into words covers spelling variations. In some databases, typing organi?ation would return results with organisation or organization , and flavo#r would bring back results with flavor or flavour .

Note : While the truncation symbol is often *, it can also be $ or !. Wildcard symbols also vary from database to database. $ or ? are sometimes used. Always check the database help section to be sure that you are using the right symbols for that database.

In building a search you can combine all the tools available to you. “Brewer* yeast” , which uses both phrase searching and truncation, will bring back results for brewer yeast , brewer’s yeast and brewers yeast , three variations which are all used in the literature.

Best Practice!

BEST PRACTICE RECOMMENDATION: Always check a database's help section to be sure that you are using the correct proximity, truncation or wildcard symbols for that database.

Handsearching

It is good practice to supplement your database searches with handsearching . This is the process of manually looking through the table of contents of journals and conferences to find studies that your database searches missed. A related activity is looking through the reference lists of relevant articles found through database searches. There are three reasons why doing both these things is a good idea:

- If, through handsearching, you identify additional articles which are in the database you used but weren’t included in the results from your searches, you can look at the article records to consider if you need to adjust your search strategy. You may have omitted a useful variation of a concept from your search string.

- Even when your search string is excellent, some abstracts and records don’t contain terms that allow them to be easily identified in a search, but are relevant to your research.

- References might point to research published before the indexing began for the databases you are using.

For handsearching, target journals or conference proceedings that are clearly in the area of your topic and look through tables of contents. Sometimes valuable information within supplements or letters is not indexed within databases.

Academic libraries might subscribe to tools which can speed the process such as Zetoc (which includes conference and journal contents) or Browzine (which only covers journals). You can also see past and current issues’ tables of contents on a journal’s webpage.

Handsearching is a valuable but labour-intensive activity, so think carefully about where to invest your time.

Best practice!

BEST PRACTICE RECOMMENDATION: Ask a colleague, lecturer, or librarian to review your search strategy. This can be very helpful, especially if you are new to a topic. It adds credibility to your literature search and will help ensure that you are running the best search possible.

BEST PRACTICE RECOMMENDATION: Remember to save a detailed record of your searches so that you can run them shortly before you are ready to submit your project to see if any new relevant research has been published since you embarked on your project. A good way to do this is to document:

- Where the search was run

- The exact search

- The date it was run

- The number of results

Keeping all this information will make it easy to see if your search picks up new results when you run it again.

BEST PRACTICE RECOMMENDATION: If you are publishing your research, take note of journals appearing frequently in your search results for an indication of where to publish a research topic for good impact.

- << Previous: 3. Where to search

- Next: 5. Screening results >>

- Last Updated: May 17, 2024 5:48 PM

- URL: https://ifis.libguides.com/literature_search_best_practice

- Open access

- Published: 14 August 2018

Defining the process to literature searching in systematic reviews: a literature review of guidance and supporting studies

- Chris Cooper ORCID: orcid.org/0000-0003-0864-5607 1 ,

- Andrew Booth 2 ,

- Jo Varley-Campbell 1 ,

- Nicky Britten 3 &

- Ruth Garside 4

BMC Medical Research Methodology volume 18 , Article number: 85 ( 2018 ) Cite this article

204k Accesses

205 Citations

118 Altmetric

Metrics details

Systematic literature searching is recognised as a critical component of the systematic review process. It involves a systematic search for studies and aims for a transparent report of study identification, leaving readers clear about what was done to identify studies, and how the findings of the review are situated in the relevant evidence.

Information specialists and review teams appear to work from a shared and tacit model of the literature search process. How this tacit model has developed and evolved is unclear, and it has not been explicitly examined before.

The purpose of this review is to determine if a shared model of the literature searching process can be detected across systematic review guidance documents and, if so, how this process is reported in the guidance and supported by published studies.

A literature review.

Two types of literature were reviewed: guidance and published studies. Nine guidance documents were identified, including: The Cochrane and Campbell Handbooks. Published studies were identified through ‘pearl growing’, citation chasing, a search of PubMed using the systematic review methods filter, and the authors’ topic knowledge.

The relevant sections within each guidance document were then read and re-read, with the aim of determining key methodological stages. Methodological stages were identified and defined. This data was reviewed to identify agreements and areas of unique guidance between guidance documents. Consensus across multiple guidance documents was used to inform selection of ‘key stages’ in the process of literature searching.

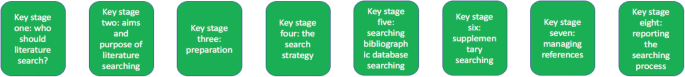

Eight key stages were determined relating specifically to literature searching in systematic reviews. They were: who should literature search, aims and purpose of literature searching, preparation, the search strategy, searching databases, supplementary searching, managing references and reporting the search process.

Conclusions

Eight key stages to the process of literature searching in systematic reviews were identified. These key stages are consistently reported in the nine guidance documents, suggesting consensus on the key stages of literature searching, and therefore the process of literature searching as a whole, in systematic reviews. Further research to determine the suitability of using the same process of literature searching for all types of systematic review is indicated.

Peer Review reports

Systematic literature searching is recognised as a critical component of the systematic review process. It involves a systematic search for studies and aims for a transparent report of study identification, leaving review stakeholders clear about what was done to identify studies, and how the findings of the review are situated in the relevant evidence.

Information specialists and review teams appear to work from a shared and tacit model of the literature search process. How this tacit model has developed and evolved is unclear, and it has not been explicitly examined before. This is in contrast to the information science literature, which has developed information processing models as an explicit basis for dialogue and empirical testing. Without an explicit model, research in the process of systematic literature searching will remain immature and potentially uneven, and the development of shared information models will be assumed but never articulated.

One way of developing such a conceptual model is by formally examining the implicit “programme theory” as embodied in key methodological texts. The aim of this review is therefore to determine if a shared model of the literature searching process in systematic reviews can be detected across guidance documents and, if so, how this process is reported and supported.

Identifying guidance

Key texts (henceforth referred to as “guidance”) were identified based upon their accessibility to, and prominence within, United Kingdom systematic reviewing practice. The United Kingdom occupies a prominent position in the science of health information retrieval, as quantified by such objective measures as the authorship of papers, the number of Cochrane groups based in the UK, membership and leadership of groups such as the Cochrane Information Retrieval Methods Group, the HTA-I Information Specialists’ Group and historic association with such centres as the UK Cochrane Centre, the NHS Centre for Reviews and Dissemination, the Centre for Evidence Based Medicine and the National Institute for Clinical Excellence (NICE). Coupled with the linguistic dominance of English within medical and health science and the science of systematic reviews more generally, this offers a justification for a purposive sample that favours UK, European and Australian guidance documents.

Nine guidance documents were identified. These documents provide guidance for different types of reviews, namely: reviews of interventions, reviews of health technologies, reviews of qualitative research studies, reviews of social science topics, and reviews to inform guidance.

Whilst these guidance documents occasionally offer additional guidance on other types of systematic reviews, we have focused on the core and stated aims of these documents as they relate to literature searching. Table 1 sets out: the guidance document, the version audited, their core stated focus, and a bibliographical pointer to the main guidance relating to literature searching.

Once a list of key guidance documents was determined, it was checked by six senior information professionals based in the UK for relevance to current literature searching in systematic reviews.

Identifying supporting studies

In addition to identifying guidance, the authors sought to populate an evidence base of supporting studies (henceforth referred to as “studies”) that contribute to existing search practice. Studies were first identified by the authors from their knowledge on this topic area and, subsequently, through systematic citation chasing key studies (‘pearls’ [ 1 ]) located within each key stage of the search process. These studies are identified in Additional file 1 : Appendix Table 1. Citation chasing was conducted by analysing the bibliography of references for each study (backwards citation chasing) and through Google Scholar (forward citation chasing). A search of PubMed using the systematic review methods filter was undertaken in August 2017 (see Additional file 1 ). The search terms used were: (literature search*[Title/Abstract]) AND sysrev_methods[sb] and 586 results were returned. These results were sifted for relevance to the key stages in Fig. 1 by CC.

The key stages of literature search guidance as identified from nine key texts

Extracting the data

To reveal the implicit process of literature searching within each guidance document, the relevant sections (chapters) on literature searching were read and re-read, with the aim of determining key methodological stages. We defined a key methodological stage as a distinct step in the overall process for which specific guidance is reported, and action is taken, that collectively would result in a completed literature search.

The chapter or section sub-heading for each methodological stage was extracted into a table using the exact language as reported in each guidance document. The lead author (CC) then read and re-read these data, and the paragraphs of the document to which the headings referred, summarising section details. This table was then reviewed, using comparison and contrast to identify agreements and areas of unique guidance. Consensus across multiple guidelines was used to inform selection of ‘key stages’ in the process of literature searching.

Having determined the key stages to literature searching, we then read and re-read the sections relating to literature searching again, extracting specific detail relating to the methodological process of literature searching within each key stage. Again, the guidance was then read and re-read, first on a document-by-document-basis and, secondly, across all the documents above, to identify both commonalities and areas of unique guidance.

Results and discussion

Our findings.

We were able to identify consensus across the guidance on literature searching for systematic reviews suggesting a shared implicit model within the information retrieval community. Whilst the structure of the guidance varies between documents, the same key stages are reported, even where the core focus of each document is different. We were able to identify specific areas of unique guidance, where a document reported guidance not summarised in other documents, together with areas of consensus across guidance.

Unique guidance

Only one document provided guidance on the topic of when to stop searching [ 2 ]. This guidance from 2005 anticipates a topic of increasing importance with the current interest in time-limited (i.e. “rapid”) reviews. Quality assurance (or peer review) of literature searches was only covered in two guidance documents [ 3 , 4 ]. This topic has emerged as increasingly important as indicated by the development of the PRESS instrument [ 5 ]. Text mining was discussed in four guidance documents [ 4 , 6 , 7 , 8 ] where the automation of some manual review work may offer efficiencies in literature searching [ 8 ].

Agreement between guidance: Defining the key stages of literature searching

Where there was agreement on the process, we determined that this constituted a key stage in the process of literature searching to inform systematic reviews.

From the guidance, we determined eight key stages that relate specifically to literature searching in systematic reviews. These are summarised at Fig. 1 . The data extraction table to inform Fig. 1 is reported in Table 2 . Table 2 reports the areas of common agreement and it demonstrates that the language used to describe key stages and processes varies significantly between guidance documents.

For each key stage, we set out the specific guidance, followed by discussion on how this guidance is situated within the wider literature.

Key stage one: Deciding who should undertake the literature search

The guidance.

Eight documents provided guidance on who should undertake literature searching in systematic reviews [ 2 , 4 , 6 , 7 , 8 , 9 , 10 , 11 ]. The guidance affirms that people with relevant expertise of literature searching should ‘ideally’ be included within the review team [ 6 ]. Information specialists (or information scientists), librarians or trial search co-ordinators (TSCs) are indicated as appropriate researchers in six guidance documents [ 2 , 7 , 8 , 9 , 10 , 11 ].

How the guidance corresponds to the published studies

The guidance is consistent with studies that call for the involvement of information specialists and librarians in systematic reviews [ 12 , 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 ] and which demonstrate how their training as ‘expert searchers’ and ‘analysers and organisers of data’ can be put to good use [ 13 ] in a variety of roles [ 12 , 16 , 20 , 21 , 24 , 25 , 26 ]. These arguments make sense in the context of the aims and purposes of literature searching in systematic reviews, explored below. The need for ‘thorough’ and ‘replicable’ literature searches was fundamental to the guidance and recurs in key stage two. Studies have found poor reporting, and a lack of replicable literature searches, to be a weakness in systematic reviews [ 17 , 18 , 27 , 28 ] and they argue that involvement of information specialists/ librarians would be associated with better reporting and better quality literature searching. Indeed, Meert et al. [ 29 ] demonstrated that involving a librarian as a co-author to a systematic review correlated with a higher score in the literature searching component of a systematic review [ 29 ]. As ‘new styles’ of rapid and scoping reviews emerge, where decisions on how to search are more iterative and creative, a clear role is made here too [ 30 ].

Knowing where to search for studies was noted as important in the guidance, with no agreement as to the appropriate number of databases to be searched [ 2 , 6 ]. Database (and resource selection more broadly) is acknowledged as a relevant key skill of information specialists and librarians [ 12 , 15 , 16 , 31 ].

Whilst arguments for including information specialists and librarians in the process of systematic review might be considered self-evident, Koffel and Rethlefsen [ 31 ] have questioned if the necessary involvement is actually happening [ 31 ].