Literature Review Basics

- What is a Literature Review?

- Synthesizing Research

- Using Research & Synthesis Tables

- Additional Resources

About the Research and Synthesis Tables

Research Tables and Synthesis Tables are useful tools for organizing and analyzing your research as you assemble your literature review. They represent two different parts of the review process: assembling relevant information and synthesizing it. Use a Research table to compile the main info you need about the items you find in your research -- it's a great thing to have on hand as you take notes on what you read! Then, once you've assembled your research, use the Synthesis table to start charting the similarities/differences and major themes among your collected items.

We've included an Excel file with templates for you to use below; the examples pictured on this page are snapshots from that file.

- Research and Synthesis Table Templates This Excel workbook includes simple templates for creating research tables and synthesis tables. Feel free to download and use!

Using the Research Table

This is an example of a research table, in which you provide a basic description of the most important features of the studies, articles, and other items you discover in your research. The table identifies each item according to its author/date of publication, its purpose or thesis, what type of work it is (systematic review, clinical trial, etc.), the level of evidence it represents (which tells you a lot about its impact on the field of study), and its major findings. Your job, when you assemble this information, is to develop a snapshot of what the research shows about the topic of your research question and assess its value (both for the purpose of your work and for general knowledge in the field).

Think of your work on the research table as the foundational step for your analysis of the literature, in which you assemble the information you'll be analyzing and lay the groundwork for thinking about what it means and how it can be used.

Using the Synthesis Table

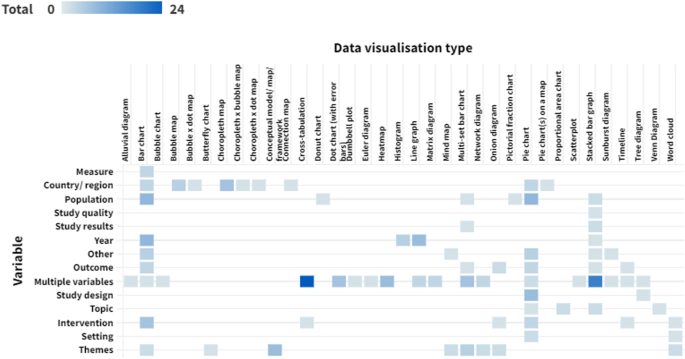

This is an example of a synthesis table or synthesis matrix , in which you organize and analyze your research by listing each source and indicating whether a given finding or result occurred in a particular study or article ( each row lists an individual source, and each finding has its own column, in which X = yes, blank = no). You can also add or alter the columns to look for shared study populations, sort by level of evidence or source type, etc. The key here is to use the table to provide a simple representation of what the research has found (or not found, as the case may be). Think of a synthesis table as a tool for making comparisons, identifying trends, and locating gaps in the literature.

How do I know which findings to use, or how many to include? Your research question tells you which findings are of interest in your research, so work from your research question to decide what needs to go in each Finding header, and how many findings are necessary. The number is up to you; again, you can alter this table by adding or deleting columns to match what you're actually looking for in your analysis. You should also, of course, be guided by what's actually present in the material your research turns up!

- << Previous: Synthesizing Research

- Next: Additional Resources >>

- Last Updated: Sep 26, 2023 12:06 PM

- URL: https://usi.libguides.com/literature-review-basics

- Research Guides

- Vanderbilt University Libraries

- Peabody Library

Literature Reviews

Literature review table.

- Steps to Success: The Literature Review Process

- Literature Reviews Webinar Recording

- Writing Like an Academic

- Publishing in Academic Journals

- Managing Citations This link opens in a new window

- Literature Review Table Template Peabody Librarians have created a sample literature review table to use to organize your research. Feel free to download this file and use or adapt as needed.

- << Previous: Literature Reviews Webinar Recording

- Next: Writing Like an Academic >>

- Last Updated: Feb 29, 2024 4:20 PM

- URL: https://researchguides.library.vanderbilt.edu/peabody/litreviews

MSU Libraries

- Need help? Ask a Librarian

Nursing Literature Reviews

- Literature and Other Types of Reviews

- Starting Your Search

- Developing a Research Question and the Literature Search Process

- Conducting a Literature Search

- Levels of Evidence

- Creating a PRISMA Table

- Literature Table and Synthesis

- Other Resources

About Literature Tables and Writing a Synthesis

A literature table is a way to organize the articles you've selected for inclusion in your publication. There are many different types of literature tables-the main thing is to determine the important pieces that help draw out the comparisons and contrasts between the articles included in your review. The first few columns should include the basic info about the article (title, authors, journal), publication year, and the purpose of the paper.

While the table is a step to help you organize the articles you've selected for your research, the literature synthesis can take many forms and can have multiple parts. This largely depends on what type of review you've undertaken. Look back at the examples under Literature and Other Types of Reviews to see examples of different types of reviews.

- Example of Literature Table

Examples of Literature Tables

Camak, D.J. (2015), Addressing the burden of stroke caregivers: a literature review. J Clin Nurs, 24: 2376-2382. doi: 10.1111/jocn.12884

Balcombe, L., Miller, C., & McGuiness, W. (2017). Approaches to the application and removal of compression therapy: A literature review. British Journal of Community Nursing , 22 , S6–S14. https://doi-org.proxy1.cl.msu.edu/10.12968/bjcn.2017.22.Sup10.S6

- << Previous: Creating a PRISMA Table

- Next: Other Resources >>

- Last Updated: May 10, 2024 9:36 AM

- URL: https://libguides.lib.msu.edu/nursinglitreview

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- What is a Literature Review? | Guide, Template, & Examples

What is a Literature Review? | Guide, Template, & Examples

Published on 22 February 2022 by Shona McCombes . Revised on 7 June 2022.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research.

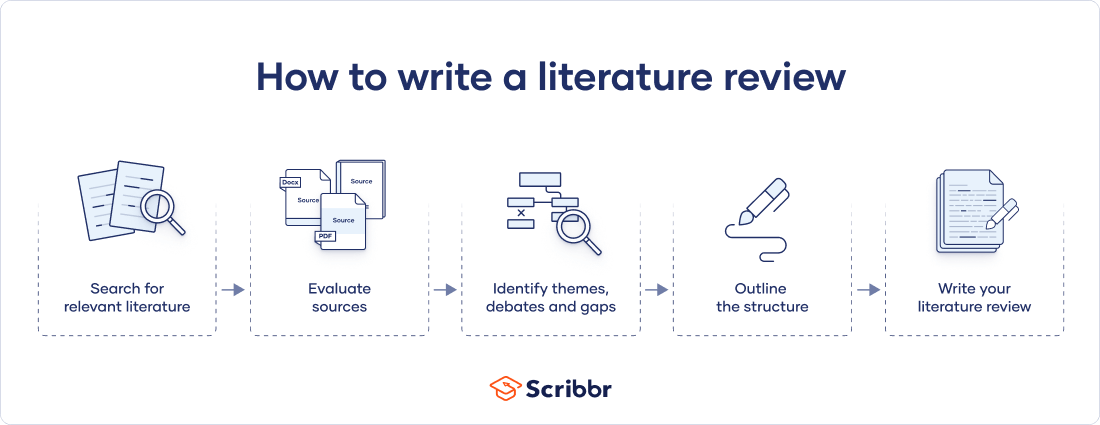

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarise sources – it analyses, synthesises, and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

Why write a literature review, examples of literature reviews, step 1: search for relevant literature, step 2: evaluate and select sources, step 3: identify themes, debates and gaps, step 4: outline your literature review’s structure, step 5: write your literature review, frequently asked questions about literature reviews, introduction.

- Quick Run-through

- Step 1 & 2

When you write a dissertation or thesis, you will have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and scholarly context

- Develop a theoretical framework and methodology for your research

- Position yourself in relation to other researchers and theorists

- Show how your dissertation addresses a gap or contributes to a debate

You might also have to write a literature review as a stand-alone assignment. In this case, the purpose is to evaluate the current state of research and demonstrate your knowledge of scholarly debates around a topic.

The content will look slightly different in each case, but the process of conducting a literature review follows the same steps. We’ve written a step-by-step guide that you can follow below.

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Correct my document today

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research objectives and questions .

If you are writing a literature review as a stand-alone assignment, you will have to choose a focus and develop a central question to direct your search. Unlike a dissertation research question, this question has to be answerable without collecting original data. You should be able to answer it based only on a review of existing publications.

Make a list of keywords

Start by creating a list of keywords related to your research topic. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list if you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can use boolean operators to help narrow down your search:

Read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

To identify the most important publications on your topic, take note of recurring citations. If the same authors, books or articles keep appearing in your reading, make sure to seek them out.

You probably won’t be able to read absolutely everything that has been written on the topic – you’ll have to evaluate which sources are most relevant to your questions.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models and methods? Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- How does the publication contribute to your understanding of the topic? What are its key insights and arguments?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible, and make sure you read any landmark studies and major theories in your field of research.

You can find out how many times an article has been cited on Google Scholar – a high citation count means the article has been influential in the field, and should certainly be included in your literature review.

The scope of your review will depend on your topic and discipline: in the sciences you usually only review recent literature, but in the humanities you might take a long historical perspective (for example, to trace how a concept has changed in meaning over time).

Remember that you can use our template to summarise and evaluate sources you’re thinking about using!

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It’s important to keep track of your sources with references to avoid plagiarism . It can be helpful to make an annotated bibliography, where you compile full reference information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

You can use our free APA Reference Generator for quick, correct, consistent citations.

To begin organising your literature review’s argument and structure, you need to understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly-visual platforms like Instagram and Snapchat – this is a gap that you could address in your own research.

There are various approaches to organising the body of a literature review. You should have a rough idea of your strategy before you start writing.

Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarising sources in order.

Try to analyse patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organise your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text, your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

If you are writing the literature review as part of your dissertation or thesis, reiterate your central problem or research question and give a brief summary of the scholarly context. You can emphasise the timeliness of the topic (“many recent studies have focused on the problem of x”) or highlight a gap in the literature (“while there has been much research on x, few researchers have taken y into consideration”).

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, make sure to follow these tips:

- Summarise and synthesise: give an overview of the main points of each source and combine them into a coherent whole.

- Analyse and interpret: don’t just paraphrase other researchers – add your own interpretations, discussing the significance of findings in relation to the literature as a whole.

- Critically evaluate: mention the strengths and weaknesses of your sources.

- Write in well-structured paragraphs: use transitions and topic sentences to draw connections, comparisons and contrasts.

In the conclusion, you should summarise the key findings you have taken from the literature and emphasise their significance.

If the literature review is part of your dissertation or thesis, reiterate how your research addresses gaps and contributes new knowledge, or discuss how you have drawn on existing theories and methods to build a framework for your research. This can lead directly into your methodology section.

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a dissertation , thesis, research paper , or proposal .

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarise yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, June 07). What is a Literature Review? | Guide, Template, & Examples. Scribbr. Retrieved 6 May 2024, from https://www.scribbr.co.uk/thesis-dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, how to write a dissertation proposal | a step-by-step guide, what is a theoretical framework | a step-by-step guide, what is a research methodology | steps & tips.

Get science-backed answers as you write with Paperpal's Research feature

What is a Literature Review? How to Write It (with Examples)

A literature review is a critical analysis and synthesis of existing research on a particular topic. It provides an overview of the current state of knowledge, identifies gaps, and highlights key findings in the literature. 1 The purpose of a literature review is to situate your own research within the context of existing scholarship, demonstrating your understanding of the topic and showing how your work contributes to the ongoing conversation in the field. Learning how to write a literature review is a critical tool for successful research. Your ability to summarize and synthesize prior research pertaining to a certain topic demonstrates your grasp on the topic of study, and assists in the learning process.

Table of Contents

- What is the purpose of literature review?

- a. Habitat Loss and Species Extinction:

- b. Range Shifts and Phenological Changes:

- c. Ocean Acidification and Coral Reefs:

- d. Adaptive Strategies and Conservation Efforts:

- How to write a good literature review

- Choose a Topic and Define the Research Question:

- Decide on the Scope of Your Review:

- Select Databases for Searches:

- Conduct Searches and Keep Track:

- Review the Literature:

- Organize and Write Your Literature Review:

- Frequently asked questions

What is a literature review?

A well-conducted literature review demonstrates the researcher’s familiarity with the existing literature, establishes the context for their own research, and contributes to scholarly conversations on the topic. One of the purposes of a literature review is also to help researchers avoid duplicating previous work and ensure that their research is informed by and builds upon the existing body of knowledge.

What is the purpose of literature review?

A literature review serves several important purposes within academic and research contexts. Here are some key objectives and functions of a literature review: 2

- Contextualizing the Research Problem: The literature review provides a background and context for the research problem under investigation. It helps to situate the study within the existing body of knowledge.

- Identifying Gaps in Knowledge: By identifying gaps, contradictions, or areas requiring further research, the researcher can shape the research question and justify the significance of the study. This is crucial for ensuring that the new research contributes something novel to the field.

- Understanding Theoretical and Conceptual Frameworks: Literature reviews help researchers gain an understanding of the theoretical and conceptual frameworks used in previous studies. This aids in the development of a theoretical framework for the current research.

- Providing Methodological Insights: Another purpose of literature reviews is that it allows researchers to learn about the methodologies employed in previous studies. This can help in choosing appropriate research methods for the current study and avoiding pitfalls that others may have encountered.

- Establishing Credibility: A well-conducted literature review demonstrates the researcher’s familiarity with existing scholarship, establishing their credibility and expertise in the field. It also helps in building a solid foundation for the new research.

- Informing Hypotheses or Research Questions: The literature review guides the formulation of hypotheses or research questions by highlighting relevant findings and areas of uncertainty in existing literature.

Literature review example

Let’s delve deeper with a literature review example: Let’s say your literature review is about the impact of climate change on biodiversity. You might format your literature review into sections such as the effects of climate change on habitat loss and species extinction, phenological changes, and marine biodiversity. Each section would then summarize and analyze relevant studies in those areas, highlighting key findings and identifying gaps in the research. The review would conclude by emphasizing the need for further research on specific aspects of the relationship between climate change and biodiversity. The following literature review template provides a glimpse into the recommended literature review structure and content, demonstrating how research findings are organized around specific themes within a broader topic.

Literature Review on Climate Change Impacts on Biodiversity:

Climate change is a global phenomenon with far-reaching consequences, including significant impacts on biodiversity. This literature review synthesizes key findings from various studies:

a. Habitat Loss and Species Extinction:

Climate change-induced alterations in temperature and precipitation patterns contribute to habitat loss, affecting numerous species (Thomas et al., 2004). The review discusses how these changes increase the risk of extinction, particularly for species with specific habitat requirements.

b. Range Shifts and Phenological Changes:

Observations of range shifts and changes in the timing of biological events (phenology) are documented in response to changing climatic conditions (Parmesan & Yohe, 2003). These shifts affect ecosystems and may lead to mismatches between species and their resources.

c. Ocean Acidification and Coral Reefs:

The review explores the impact of climate change on marine biodiversity, emphasizing ocean acidification’s threat to coral reefs (Hoegh-Guldberg et al., 2007). Changes in pH levels negatively affect coral calcification, disrupting the delicate balance of marine ecosystems.

d. Adaptive Strategies and Conservation Efforts:

Recognizing the urgency of the situation, the literature review discusses various adaptive strategies adopted by species and conservation efforts aimed at mitigating the impacts of climate change on biodiversity (Hannah et al., 2007). It emphasizes the importance of interdisciplinary approaches for effective conservation planning.

How to write a good literature review

Writing a literature review involves summarizing and synthesizing existing research on a particular topic. A good literature review format should include the following elements.

Introduction: The introduction sets the stage for your literature review, providing context and introducing the main focus of your review.

- Opening Statement: Begin with a general statement about the broader topic and its significance in the field.

- Scope and Purpose: Clearly define the scope of your literature review. Explain the specific research question or objective you aim to address.

- Organizational Framework: Briefly outline the structure of your literature review, indicating how you will categorize and discuss the existing research.

- Significance of the Study: Highlight why your literature review is important and how it contributes to the understanding of the chosen topic.

- Thesis Statement: Conclude the introduction with a concise thesis statement that outlines the main argument or perspective you will develop in the body of the literature review.

Body: The body of the literature review is where you provide a comprehensive analysis of existing literature, grouping studies based on themes, methodologies, or other relevant criteria.

- Organize by Theme or Concept: Group studies that share common themes, concepts, or methodologies. Discuss each theme or concept in detail, summarizing key findings and identifying gaps or areas of disagreement.

- Critical Analysis: Evaluate the strengths and weaknesses of each study. Discuss the methodologies used, the quality of evidence, and the overall contribution of each work to the understanding of the topic.

- Synthesis of Findings: Synthesize the information from different studies to highlight trends, patterns, or areas of consensus in the literature.

- Identification of Gaps: Discuss any gaps or limitations in the existing research and explain how your review contributes to filling these gaps.

- Transition between Sections: Provide smooth transitions between different themes or concepts to maintain the flow of your literature review.

Conclusion: The conclusion of your literature review should summarize the main findings, highlight the contributions of the review, and suggest avenues for future research.

- Summary of Key Findings: Recap the main findings from the literature and restate how they contribute to your research question or objective.

- Contributions to the Field: Discuss the overall contribution of your literature review to the existing knowledge in the field.

- Implications and Applications: Explore the practical implications of the findings and suggest how they might impact future research or practice.

- Recommendations for Future Research: Identify areas that require further investigation and propose potential directions for future research in the field.

- Final Thoughts: Conclude with a final reflection on the importance of your literature review and its relevance to the broader academic community.

Conducting a literature review

Conducting a literature review is an essential step in research that involves reviewing and analyzing existing literature on a specific topic. It’s important to know how to do a literature review effectively, so here are the steps to follow: 1

Choose a Topic and Define the Research Question:

- Select a topic that is relevant to your field of study.

- Clearly define your research question or objective. Determine what specific aspect of the topic do you want to explore?

Decide on the Scope of Your Review:

- Determine the timeframe for your literature review. Are you focusing on recent developments, or do you want a historical overview?

- Consider the geographical scope. Is your review global, or are you focusing on a specific region?

- Define the inclusion and exclusion criteria. What types of sources will you include? Are there specific types of studies or publications you will exclude?

Select Databases for Searches:

- Identify relevant databases for your field. Examples include PubMed, IEEE Xplore, Scopus, Web of Science, and Google Scholar.

- Consider searching in library catalogs, institutional repositories, and specialized databases related to your topic.

Conduct Searches and Keep Track:

- Develop a systematic search strategy using keywords, Boolean operators (AND, OR, NOT), and other search techniques.

- Record and document your search strategy for transparency and replicability.

- Keep track of the articles, including publication details, abstracts, and links. Use citation management tools like EndNote, Zotero, or Mendeley to organize your references.

Review the Literature:

- Evaluate the relevance and quality of each source. Consider the methodology, sample size, and results of studies.

- Organize the literature by themes or key concepts. Identify patterns, trends, and gaps in the existing research.

- Summarize key findings and arguments from each source. Compare and contrast different perspectives.

- Identify areas where there is a consensus in the literature and where there are conflicting opinions.

- Provide critical analysis and synthesis of the literature. What are the strengths and weaknesses of existing research?

Organize and Write Your Literature Review:

- Literature review outline should be based on themes, chronological order, or methodological approaches.

- Write a clear and coherent narrative that synthesizes the information gathered.

- Use proper citations for each source and ensure consistency in your citation style (APA, MLA, Chicago, etc.).

- Conclude your literature review by summarizing key findings, identifying gaps, and suggesting areas for future research.

The literature review sample and detailed advice on writing and conducting a review will help you produce a well-structured report. But remember that a literature review is an ongoing process, and it may be necessary to revisit and update it as your research progresses.

Frequently asked questions

A literature review is a critical and comprehensive analysis of existing literature (published and unpublished works) on a specific topic or research question and provides a synthesis of the current state of knowledge in a particular field. A well-conducted literature review is crucial for researchers to build upon existing knowledge, avoid duplication of efforts, and contribute to the advancement of their field. It also helps researchers situate their work within a broader context and facilitates the development of a sound theoretical and conceptual framework for their studies.

Literature review is a crucial component of research writing, providing a solid background for a research paper’s investigation. The aim is to keep professionals up to date by providing an understanding of ongoing developments within a specific field, including research methods, and experimental techniques used in that field, and present that knowledge in the form of a written report. Also, the depth and breadth of the literature review emphasizes the credibility of the scholar in his or her field.

Before writing a literature review, it’s essential to undertake several preparatory steps to ensure that your review is well-researched, organized, and focused. This includes choosing a topic of general interest to you and doing exploratory research on that topic, writing an annotated bibliography, and noting major points, especially those that relate to the position you have taken on the topic.

Literature reviews and academic research papers are essential components of scholarly work but serve different purposes within the academic realm. 3 A literature review aims to provide a foundation for understanding the current state of research on a particular topic, identify gaps or controversies, and lay the groundwork for future research. Therefore, it draws heavily from existing academic sources, including books, journal articles, and other scholarly publications. In contrast, an academic research paper aims to present new knowledge, contribute to the academic discourse, and advance the understanding of a specific research question. Therefore, it involves a mix of existing literature (in the introduction and literature review sections) and original data or findings obtained through research methods.

Literature reviews are essential components of academic and research papers, and various strategies can be employed to conduct them effectively. If you want to know how to write a literature review for a research paper, here are four common approaches that are often used by researchers. Chronological Review: This strategy involves organizing the literature based on the chronological order of publication. It helps to trace the development of a topic over time, showing how ideas, theories, and research have evolved. Thematic Review: Thematic reviews focus on identifying and analyzing themes or topics that cut across different studies. Instead of organizing the literature chronologically, it is grouped by key themes or concepts, allowing for a comprehensive exploration of various aspects of the topic. Methodological Review: This strategy involves organizing the literature based on the research methods employed in different studies. It helps to highlight the strengths and weaknesses of various methodologies and allows the reader to evaluate the reliability and validity of the research findings. Theoretical Review: A theoretical review examines the literature based on the theoretical frameworks used in different studies. This approach helps to identify the key theories that have been applied to the topic and assess their contributions to the understanding of the subject. It’s important to note that these strategies are not mutually exclusive, and a literature review may combine elements of more than one approach. The choice of strategy depends on the research question, the nature of the literature available, and the goals of the review. Additionally, other strategies, such as integrative reviews or systematic reviews, may be employed depending on the specific requirements of the research.

The literature review format can vary depending on the specific publication guidelines. However, there are some common elements and structures that are often followed. Here is a general guideline for the format of a literature review: Introduction: Provide an overview of the topic. Define the scope and purpose of the literature review. State the research question or objective. Body: Organize the literature by themes, concepts, or chronology. Critically analyze and evaluate each source. Discuss the strengths and weaknesses of the studies. Highlight any methodological limitations or biases. Identify patterns, connections, or contradictions in the existing research. Conclusion: Summarize the key points discussed in the literature review. Highlight the research gap. Address the research question or objective stated in the introduction. Highlight the contributions of the review and suggest directions for future research.

Both annotated bibliographies and literature reviews involve the examination of scholarly sources. While annotated bibliographies focus on individual sources with brief annotations, literature reviews provide a more in-depth, integrated, and comprehensive analysis of existing literature on a specific topic. The key differences are as follows:

References

- Denney, A. S., & Tewksbury, R. (2013). How to write a literature review. Journal of criminal justice education , 24 (2), 218-234.

- Pan, M. L. (2016). Preparing literature reviews: Qualitative and quantitative approaches . Taylor & Francis.

- Cantero, C. (2019). How to write a literature review. San José State University Writing Center .

Paperpal is an AI writing assistant that help academics write better, faster with real-time suggestions for in-depth language and grammar correction. Trained on millions of research manuscripts enhanced by professional academic editors, Paperpal delivers human precision at machine speed.

Try it for free or upgrade to Paperpal Prime , which unlocks unlimited access to premium features like academic translation, paraphrasing, contextual synonyms, consistency checks and more. It’s like always having a professional academic editor by your side! Go beyond limitations and experience the future of academic writing. Get Paperpal Prime now at just US$19 a month!

Related Reads:

- Empirical Research: A Comprehensive Guide for Academics

- How to Write a Scientific Paper in 10 Steps

- Life Sciences Papers: 9 Tips for Authors Writing in Biological Sciences

- What is an Argumentative Essay? How to Write It (With Examples)

6 Tips for Post-Doc Researchers to Take Their Career to the Next Level

Self-plagiarism in research: what it is and how to avoid it, you may also like, how paperpal’s research feature helps you develop and..., how paperpal is enhancing academic productivity and accelerating..., how to write a successful book chapter for..., academic editing: how to self-edit academic text with..., 4 ways paperpal encourages responsible writing with ai, what are scholarly sources and where can you..., how to write a hypothesis types and examples , measuring academic success: definition & strategies for excellence, what is academic writing: tips for students, why traditional editorial process needs an upgrade.

- Walden University

- Faculty Portal

Common Assignments: Literature Review Matrix

Literature review matrix.

As you read and evaluate your literature there are several different ways to organize your research. Courtesy of Dr. Gary Burkholder in the School of Psychology, these sample matrices are one option to help organize your articles. These documents allow you to compile details about your sources, such as the foundational theories, methodologies, and conclusions; begin to note similarities among the authors; and retrieve citation information for easy insertion within a document.

You can review the sample matrixes to see a completed form or download the blank matrix for your own use.

- Literature Review Matrix 1 This PDF file provides a sample literature review matrix.

- Literature Review Matrix 2 This PDF file provides a sample literature review matrix.

- Literature Review Matrix Template (Word)

- Literature Review Matrix Template (Excel)

Related Resources

Didn't find what you need? Email us at [email protected] .

- Previous Page: Commentary Versus Opinion

- Next Page: Professional Development Plans (PDPs)

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

The Sheridan Libraries

- Write a Literature Review

- Sheridan Libraries

- Find This link opens in a new window

- Evaluate This link opens in a new window

Get Organized

- Lit Review Prep Use this template to help you evaluate your sources, create article summaries for an annotated bibliography, and a synthesis matrix for your lit review outline.

Synthesize your Information

Synthesize: combine separate elements to form a whole.

Synthesis Matrix

A synthesis matrix helps you record the main points of each source and document how sources relate to each other.

After summarizing and evaluating your sources, arrange them in a matrix or use a citation manager to help you see how they relate to each other and apply to each of your themes or variables.

By arranging your sources by theme or variable, you can see how your sources relate to each other, and can start thinking about how you weave them together to create a narrative.

- Step-by-Step Approach

- Example Matrix from NSCU

- Matrix Template

- << Previous: Summarize

- Next: Integrate >>

- Last Updated: Sep 26, 2023 10:25 AM

- URL: https://guides.library.jhu.edu/lit-review

- UWF Libraries

Literature Review: Conducting & Writing

- Sample Literature Reviews

- Steps for Conducting a Lit Review

- Finding "The Literature"

- Organizing/Writing

- APA Style This link opens in a new window

- Chicago: Notes Bibliography This link opens in a new window

- MLA Style This link opens in a new window

Sample Lit Reviews from Communication Arts

Have an exemplary literature review.

- Literature Review Sample 1

- Literature Review Sample 2

- Literature Review Sample 3

Have you written a stellar literature review you care to share for teaching purposes?

Are you an instructor who has received an exemplary literature review and have permission from the student to post?

Please contact Britt McGowan at [email protected] for inclusion in this guide. All disciplines welcome and encouraged.

- << Previous: MLA Style

- Next: Get Help! >>

- Last Updated: Mar 22, 2024 9:37 AM

- URL: https://libguides.uwf.edu/litreview

Jump to navigation

Cochrane Training

Chapter 14: completing ‘summary of findings’ tables and grading the certainty of the evidence.

Holger J Schünemann, Julian PT Higgins, Gunn E Vist, Paul Glasziou, Elie A Akl, Nicole Skoetz, Gordon H Guyatt; on behalf of the Cochrane GRADEing Methods Group (formerly Applicability and Recommendations Methods Group) and the Cochrane Statistical Methods Group

Key Points:

- A ‘Summary of findings’ table for a given comparison of interventions provides key information concerning the magnitudes of relative and absolute effects of the interventions examined, the amount of available evidence and the certainty (or quality) of available evidence.

- ‘Summary of findings’ tables include a row for each important outcome (up to a maximum of seven). Accepted formats of ‘Summary of findings’ tables and interactive ‘Summary of findings’ tables can be produced using GRADE’s software GRADEpro GDT.

- Cochrane has adopted the GRADE approach (Grading of Recommendations Assessment, Development and Evaluation) for assessing certainty (or quality) of a body of evidence.

- The GRADE approach specifies four levels of the certainty for a body of evidence for a given outcome: high, moderate, low and very low.

- GRADE assessments of certainty are determined through consideration of five domains: risk of bias, inconsistency, indirectness, imprecision and publication bias. For evidence from non-randomized studies and rarely randomized studies, assessments can then be upgraded through consideration of three further domains.

Cite this chapter as: Schünemann HJ, Higgins JPT, Vist GE, Glasziou P, Akl EA, Skoetz N, Guyatt GH. Chapter 14: Completing ‘Summary of findings’ tables and grading the certainty of the evidence. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

14.1 ‘Summary of findings’ tables

14.1.1 introduction to ‘summary of findings’ tables.

‘Summary of findings’ tables present the main findings of a review in a transparent, structured and simple tabular format. In particular, they provide key information concerning the certainty or quality of evidence (i.e. the confidence or certainty in the range of an effect estimate or an association), the magnitude of effect of the interventions examined, and the sum of available data on the main outcomes. Cochrane Reviews should incorporate ‘Summary of findings’ tables during planning and publication, and should have at least one key ‘Summary of findings’ table representing the most important comparisons. Some reviews may include more than one ‘Summary of findings’ table, for example if the review addresses more than one major comparison, or includes substantially different populations that require separate tables (e.g. because the effects differ or it is important to show results separately). In the Cochrane Database of Systematic Reviews (CDSR), all ‘Summary of findings’ tables for a review appear at the beginning, before the Background section.

14.1.2 Selecting outcomes for ‘Summary of findings’ tables

Planning for the ‘Summary of findings’ table starts early in the systematic review, with the selection of the outcomes to be included in: (i) the review; and (ii) the ‘Summary of findings’ table. This is a crucial step, and one that review authors need to address carefully.

To ensure production of optimally useful information, Cochrane Reviews begin by developing a review question and by listing all main outcomes that are important to patients and other decision makers (see Chapter 2 and Chapter 3 ). The GRADE approach to assessing the certainty of the evidence (see Section 14.2 ) defines and operationalizes a rating process that helps separate outcomes into those that are critical, important or not important for decision making. Consultation and feedback on the review protocol, including from consumers and other decision makers, can enhance this process.

Critical outcomes are likely to include clearly important endpoints; typical examples include mortality and major morbidity (such as strokes and myocardial infarction). However, they may also represent frequent minor and rare major side effects, symptoms, quality of life, burdens associated with treatment, and resource issues (costs). Burdens represent the impact of healthcare workload on patient function and well-being, and include the demands of adhering to an intervention that patients or caregivers (e.g. family) may dislike, such as having to undergo more frequent tests, or the restrictions on lifestyle that certain interventions require (Spencer-Bonilla et al 2017).

Frequently, when formulating questions that include all patient-important outcomes for decision making, review authors will confront reports of studies that have not included all these outcomes. This is particularly true for adverse outcomes. For instance, randomized trials might contribute evidence on intended effects, and on frequent, relatively minor side effects, but not report on rare adverse outcomes such as suicide attempts. Chapter 19 discusses strategies for addressing adverse effects. To obtain data for all important outcomes it may be necessary to examine the results of non-randomized studies (see Chapter 24 ). Cochrane, in collaboration with others, has developed guidance for review authors to support their decision about when to look for and include non-randomized studies (Schünemann et al 2013).

If a review includes only randomized trials, these trials may not address all important outcomes and it may therefore not be possible to address these outcomes within the constraints of the review. Review authors should acknowledge these limitations and make them transparent to readers. Review authors are encouraged to include non-randomized studies to examine rare or long-term adverse effects that may not adequately be studied in randomized trials. This raises the possibility that harm outcomes may come from studies in which participants differ from those in studies used in the analysis of benefit. Review authors will then need to consider how much such differences are likely to impact on the findings, and this will influence the certainty of evidence because of concerns about indirectness related to the population (see Section 14.2.2 ).

Non-randomized studies can provide important information not only when randomized trials do not report on an outcome or randomized trials suffer from indirectness, but also when the evidence from randomized trials is rated as very low and non-randomized studies provide evidence of higher certainty. Further discussion of these issues appears also in Chapter 24 .

14.1.3 General template for ‘Summary of findings’ tables

Several alternative standard versions of ‘Summary of findings’ tables have been developed to ensure consistency and ease of use across reviews, inclusion of the most important information needed by decision makers, and optimal presentation (see examples at Figures 14.1.a and 14.1.b ). These formats are supported by research that focused on improved understanding of the information they intend to convey (Carrasco-Labra et al 2016, Langendam et al 2016, Santesso et al 2016). They are available through GRADE’s official software package developed to support the GRADE approach: GRADEpro GDT (www.gradepro.org).

Standard Cochrane ‘Summary of findings’ tables include the following elements using one of the accepted formats. Further guidance on each of these is provided in Section 14.1.6 .

- A brief description of the population and setting addressed by the available evidence (which may be slightly different to or narrower than those defined by the review question).

- A brief description of the comparison addressed in the ‘Summary of findings’ table, including both the experimental and comparison interventions.

- A list of the most critical and/or important health outcomes, both desirable and undesirable, limited to seven or fewer outcomes.

- A measure of the typical burden of each outcomes (e.g. illustrative risk, or illustrative mean, on comparator intervention).

- The absolute and relative magnitude of effect measured for each (if both are appropriate).

- The numbers of participants and studies contributing to the analysis of each outcomes.

- A GRADE assessment of the overall certainty of the body of evidence for each outcome (which may vary by outcome).

- Space for comments.

- Explanations (formerly known as footnotes).

Ideally, ‘Summary of findings’ tables are supported by more detailed tables (known as ‘evidence profiles’) to which the review may be linked, which provide more detailed explanations. Evidence profiles include the same important health outcomes, and provide greater detail than ‘Summary of findings’ tables of both of the individual considerations feeding into the grading of certainty and of the results of the studies (Guyatt et al 2011a). They ensure that a structured approach is used to rating the certainty of evidence. Although they are rarely published in Cochrane Reviews, evidence profiles are often used, for example, by guideline developers in considering the certainty of the evidence to support guideline recommendations. Review authors will find it easier to develop the ‘Summary of findings’ table by completing the rating of the certainty of evidence in the evidence profile first in GRADEpro GDT. They can then automatically convert this to one of the ‘Summary of findings’ formats in GRADEpro GDT, including an interactive ‘Summary of findings’ for publication.

As a measure of the magnitude of effect for dichotomous outcomes, the ‘Summary of findings’ table should provide a relative measure of effect (e.g. risk ratio, odds ratio, hazard) and measures of absolute risk. For other types of data, an absolute measure alone (such as a difference in means for continuous data) might be sufficient. It is important that the magnitude of effect is presented in a meaningful way, which may require some transformation of the result of a meta-analysis (see also Chapter 15, Section 15.4 and Section 15.5 ). Reviews with more than one main comparison should include a separate ‘Summary of findings’ table for each comparison.

Figure 14.1.a provides an example of a ‘Summary of findings’ table. Figure 15.1.b provides an alternative format that may further facilitate users’ understanding and interpretation of the review’s findings. Evidence evaluating different formats suggests that the ‘Summary of findings’ table should include a risk difference as a measure of the absolute effect and authors should preferably use a format that includes a risk difference .

A detailed description of the contents of a ‘Summary of findings’ table appears in Section 14.1.6 .

Figure 14.1.a Example of a ‘Summary of findings’ table

Summary of findings (for interactive version click here )

a All the stockings in the nine studies included in this review were below-knee compression stockings. In four studies the compression strength was 20 mmHg to 30 mmHg at the ankle. It was 10 mmHg to 20 mmHg in the other four studies. Stockings come in different sizes. If a stocking is too tight around the knee it can prevent essential venous return causing the blood to pool around the knee. Compression stockings should be fitted properly. A stocking that is too tight could cut into the skin on a long flight and potentially cause ulceration and increased risk of DVT. Some stockings can be slightly thicker than normal leg covering and can be potentially restrictive with tight foot wear. It is a good idea to wear stockings around the house prior to travel to ensure a good, comfortable fit. Participants put their stockings on two to three hours before the flight in most of the studies. The availability and cost of stockings can vary.

b Two studies recruited high risk participants defined as those with previous episodes of DVT, coagulation disorders, severe obesity, limited mobility due to bone or joint problems, neoplastic disease within the previous two years, large varicose veins or, in one of the studies, participants taller than 190 cm and heavier than 90 kg. The incidence for the seven studies that excluded high risk participants was 1.45% and the incidence for the two studies that recruited high-risk participants (with at least one risk factor) was 2.43%. We have used 10 and 30 per 1000 to express different risk strata, respectively.

c The confidence interval crosses no difference and does not rule out a small increase.

d The measurement of oedema was not validated (indirectness of the outcome) or blinded to the intervention (risk of bias).

e If there are very few or no events and the number of participants is large, judgement about the certainty of evidence (particularly judgements about imprecision) may be based on the absolute effect. Here the certainty rating may be considered ‘high’ if the outcome was appropriately assessed and the event, in fact, did not occur in 2821 studied participants.

f None of the other studies reported adverse effects, apart from four cases of superficial vein thrombosis in varicose veins in the knee region that were compressed by the upper edge of the stocking in one study.

Figure 14.1.b Example of alternative ‘Summary of findings’ table

14.1.4 Producing ‘Summary of findings’ tables

The GRADE Working Group’s software, GRADEpro GDT ( www.gradepro.org ), including GRADE’s interactive handbook, is available to assist review authors in the preparation of ‘Summary of findings’ tables. GRADEpro can use data on the comparator group risk and the effect estimate (entered by the review authors or imported from files generated in RevMan) to produce the relative effects and absolute risks associated with experimental interventions. In addition, it leads the user through the process of a GRADE assessment, and produces a table that can be used as a standalone table in a review (including by direct import into software such as RevMan or integration with RevMan Web), or an interactive ‘Summary of findings’ table (see help resources in GRADEpro).

14.1.5 Statistical considerations in ‘Summary of findings’ tables

14.1.5.1 dichotomous outcomes.

‘Summary of findings’ tables should include both absolute and relative measures of effect for dichotomous outcomes. Risk ratios, odds ratios and risk differences are different ways of comparing two groups with dichotomous outcome data (see Chapter 6, Section 6.4.1 ). Furthermore, there are two distinct risk ratios, depending on which event (e.g. ‘yes’ or ‘no’) is the focus of the analysis (see Chapter 6, Section 6.4.1.5 ). In the presence of a non-zero intervention effect, any variation across studies in the comparator group risks (i.e. variation in the risk of the event occurring without the intervention of interest, for example in different populations) makes it impossible for more than one of these measures to be truly the same in every study.

It has long been assumed in epidemiology that relative measures of effect are more consistent than absolute measures of effect from one scenario to another. There is empirical evidence to support this assumption (Engels et al 2000, Deeks and Altman 2001, Furukawa et al 2002). For this reason, meta-analyses should generally use either a risk ratio or an odds ratio as a measure of effect (see Chapter 10, Section 10.4.3 ). Correspondingly, a single estimate of relative effect is likely to be a more appropriate summary than a single estimate of absolute effect. If a relative effect is indeed consistent across studies, then different comparator group risks will have different implications for absolute benefit. For instance, if the risk ratio is consistently 0.75, then the experimental intervention would reduce a comparator group risk of 80% to 60% in the intervention group (an absolute risk reduction of 20 percentage points), but would also reduce a comparator group risk of 20% to 15% in the intervention group (an absolute risk reduction of 5 percentage points).

‘Summary of findings’ tables are built around the assumption of a consistent relative effect. It is therefore important to consider the implications of this effect for different comparator group risks (these can be derived or estimated from a number of sources, see Section 14.1.6.3 ), which may require an assessment of the certainty of evidence for prognostic evidence (Spencer et al 2012, Iorio et al 2015). For any comparator group risk, it is possible to estimate a corresponding intervention group risk (i.e. the absolute risk with the intervention) from the meta-analytic risk ratio or odds ratio. Note that the numbers provided in the ‘Corresponding risk’ column are specific to the ‘risks’ in the adjacent column.

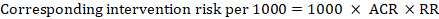

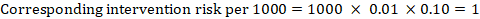

For the meta-analytic risk ratio (RR) and assumed comparator risk (ACR) the corresponding intervention risk is obtained as:

As an example, in Figure 14.1.a , the meta-analytic risk ratio for symptomless deep vein thrombosis (DVT) is RR = 0.10 (95% CI 0.04 to 0.26). Assuming a comparator risk of ACR = 10 per 1000 = 0.01, we obtain:

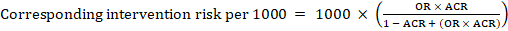

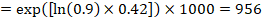

For the meta-analytic odds ratio (OR) and assumed comparator risk, ACR, the corresponding intervention risk is obtained as:

Upper and lower confidence limits for the corresponding intervention risk are obtained by replacing RR or OR by their upper and lower confidence limits, respectively (e.g. replacing 0.10 with 0.04, then with 0.26, in the example). Such confidence intervals do not incorporate uncertainty in the assumed comparator risks.

When dealing with risk ratios, it is critical that the same definition of ‘event’ is used as was used for the meta-analysis. For example, if the meta-analysis focused on ‘death’ (as opposed to survival) as the event, then corresponding risks in the ‘Summary of findings’ table must also refer to ‘death’.

In (rare) circumstances in which there is clear rationale to assume a consistent risk difference in the meta-analysis, in principle it is possible to present this for relevant ‘assumed risks’ and their corresponding risks, and to present the corresponding (different) relative effects for each assumed risk.

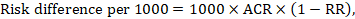

The risk difference expresses the difference between the ACR and the corresponding intervention risk (or the difference between the experimental and the comparator intervention).

For the meta-analytic risk ratio (RR) and assumed comparator risk (ACR) the corresponding risk difference is obtained as (note that risks can also be expressed using percentage or percentage points):

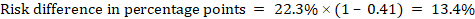

As an example, in Figure 14.1.b the meta-analytic risk ratio is 0.41 (95% CI 0.29 to 0.55) for diarrhoea in children less than 5 years of age. Assuming a comparator group risk of 22.3% we obtain:

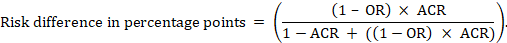

For the meta-analytic odds ratio (OR) and assumed comparator risk (ACR) the absolute risk difference is obtained as (percentage points):

Upper and lower confidence limits for the absolute risk difference are obtained by re-running the calculation above while replacing RR or OR by their upper and lower confidence limits, respectively (e.g. replacing 0.41 with 0.28, then with 0.55, in the example). Such confidence intervals do not incorporate uncertainty in the assumed comparator risks.

14.1.5.2 Time-to-event outcomes

Time-to-event outcomes measure whether and when a particular event (e.g. death) occurs (van Dalen et al 2007). The impact of the experimental intervention relative to the comparison group on time-to-event outcomes is usually measured using a hazard ratio (HR) (see Chapter 6, Section 6.8.1 ).

A hazard ratio expresses a relative effect estimate. It may be used in various ways to obtain absolute risks and other interpretable quantities for a specific population. Here we describe how to re-express hazard ratios in terms of: (i) absolute risk of event-free survival within a particular period of time; (ii) absolute risk of an event within a particular period of time; and (iii) median time to the event. All methods are built on an assumption of consistent relative effects (i.e. that the hazard ratio does not vary over time).

(i) Absolute risk of event-free survival within a particular period of time Event-free survival (e.g. overall survival) is commonly reported by individual studies. To obtain absolute effects for time-to-event outcomes measured as event-free survival, the summary HR can be used in conjunction with an assumed proportion of patients who are event-free in the comparator group (Tierney et al 2007). This proportion of patients will be specific to a period of time of observation. However, it is not strictly necessary to specify this period of time. For instance, a proportion of 50% of event-free patients might apply to patients with a high event rate observed over 1 year, or to patients with a low event rate observed over 2 years.

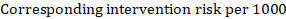

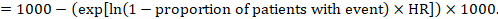

As an example, suppose the meta-analytic hazard ratio is 0.42 (95% CI 0.25 to 0.72). Assuming a comparator group risk of event-free survival (e.g. for overall survival people being alive) at 2 years of ACR = 900 per 1000 = 0.9 we obtain:

so that that 956 per 1000 people will be alive with the experimental intervention at 2 years. The derivation of the risk should be explained in a comment or footnote.

(ii) Absolute risk of an event within a particular period of time To obtain this absolute effect, again the summary HR can be used (Tierney et al 2007):

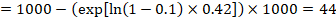

In the example, suppose we assume a comparator group risk of events (e.g. for mortality, people being dead) at 2 years of ACR = 100 per 1000 = 0.1. We obtain:

so that that 44 per 1000 people will be dead with the experimental intervention at 2 years.

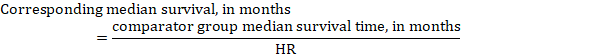

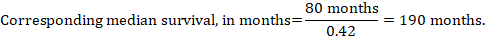

(iii) Median time to the event Instead of absolute numbers, the time to the event in the intervention and comparison groups can be expressed as median survival time in months or years. To obtain median survival time the pooled HR can be applied to an assumed median survival time in the comparator group (Tierney et al 2007):

In the example, assuming a comparator group median survival time of 80 months, we obtain:

For all three of these options for re-expressing results of time-to-event analyses, upper and lower confidence limits for the corresponding intervention risk are obtained by replacing HR by its upper and lower confidence limits, respectively (e.g. replacing 0.42 with 0.25, then with 0.72, in the example). Again, as for dichotomous outcomes, such confidence intervals do not incorporate uncertainty in the assumed comparator group risks. This is of special concern for long-term survival with a low or moderate mortality rate and a corresponding high number of censored patients (i.e. a low number of patients under risk and a high censoring rate).

14.1.6 Detailed contents of a ‘Summary of findings’ table

14.1.6.1 table title and header.

The title of each ‘Summary of findings’ table should specify the healthcare question, framed in terms of the population and making it clear exactly what comparison of interventions are made. In Figure 14.1.a , the population is people taking long aeroplane flights, the intervention is compression stockings, and the control is no compression stockings.

The first rows of each ‘Summary of findings’ table should provide the following ‘header’ information:

Patients or population This further clarifies the population (and possibly the subpopulations) of interest and ideally the magnitude of risk of the most crucial adverse outcome at which an intervention is directed. For instance, people on a long-haul flight may be at different risks for DVT; those using selective serotonin reuptake inhibitors (SSRIs) might be at different risk for side effects; while those with atrial fibrillation may be at low (< 1%), moderate (1% to 4%) or high (> 4%) yearly risk of stroke.

Setting This should state any specific characteristics of the settings of the healthcare question that might limit the applicability of the summary of findings to other settings (e.g. primary care in Europe and North America).

Intervention The experimental intervention.

Comparison The comparator intervention (including no specific intervention).

14.1.6.2 Outcomes

The rows of a ‘Summary of findings’ table should include all desirable and undesirable health outcomes (listed in order of importance) that are essential for decision making, up to a maximum of seven outcomes. If there are more outcomes in the review, review authors will need to omit the less important outcomes from the table, and the decision selecting which outcomes are critical or important to the review should be made during protocol development (see Chapter 3 ). Review authors should provide time frames for the measurement of the outcomes (e.g. 90 days or 12 months) and the type of instrument scores (e.g. ranging from 0 to 100).

Note that review authors should include the pre-specified critical and important outcomes in the table whether data are available or not. However, they should be alert to the possibility that the importance of an outcome (e.g. a serious adverse effect) may only become known after the protocol was written or the analysis was carried out, and should take appropriate actions to include these in the ‘Summary of findings’ table.

The ‘Summary of findings’ table can include effects in subgroups of the population for different comparator risks and effect sizes separately. For instance, in Figure 14.1.b effects are presented for children younger and older than 5 years separately. Review authors may also opt to produce separate ‘Summary of findings’ tables for different populations.

Review authors should include serious adverse events, but it might be possible to combine minor adverse events as a single outcome, and describe this in an explanatory footnote (note that it is not appropriate to add events together unless they are independent, that is, a participant who has experienced one adverse event has an unaffected chance of experiencing the other adverse event).

Outcomes measured at multiple time points represent a particular problem. In general, to keep the table simple, review authors should present multiple time points only for outcomes critical to decision making, where either the result or the decision made are likely to vary over time. The remainder should be presented at a common time point where possible.

Review authors can present continuous outcome measures in the ‘Summary of findings’ table and should endeavour to make these interpretable to the target audience. This requires that the units are clear and readily interpretable, for example, days of pain, or frequency of headache, and the name and scale of any measurement tools used should be stated (e.g. a Visual Analogue Scale, ranging from 0 to 100). However, many measurement instruments are not readily interpretable by non-specialist clinicians or patients, for example, points on a Beck Depression Inventory or quality of life score. For these, a more interpretable presentation might involve converting a continuous to a dichotomous outcome, such as >50% improvement (see Chapter 15, Section 15.5 ).

14.1.6.3 Best estimate of risk with comparator intervention

Review authors should provide up to three typical risks for participants receiving the comparator intervention. For dichotomous outcomes, we recommend that these be presented in the form of the number of people experiencing the event per 100 or 1000 people (natural frequency) depending on the frequency of the outcome. For continuous outcomes, this would be stated as a mean or median value of the outcome measured.

Estimated or assumed comparator intervention risks could be based on assessments of typical risks in different patient groups derived from the review itself, individual representative studies in the review, or risks derived from a systematic review of prognosis studies or other sources of evidence which may in turn require an assessment of the certainty for the prognostic evidence (Spencer et al 2012, Iorio et al 2015). Ideally, risks would reflect groups that clinicians can easily identify on the basis of their presenting features.

An explanatory footnote should specify the source or rationale for each comparator group risk, including the time period to which it corresponds where appropriate. In Figure 14.1.a , clinicians can easily differentiate individuals with risk factors for deep venous thrombosis from those without. If there is known to be little variation in baseline risk then review authors may use the median comparator group risk across studies. If typical risks are not known, an option is to choose the risk from the included studies, providing the second highest for a high and the second lowest for a low risk population.

14.1.6.4 Risk with intervention

For dichotomous outcomes, review authors should provide a corresponding absolute risk for each comparator group risk, along with a confidence interval. This absolute risk with the (experimental) intervention will usually be derived from the meta-analysis result presented in the relative effect column (see Section 14.1.6.6 ). Formulae are provided in Section 14.1.5 . Review authors should present the absolute effect in the same format as the risks with comparator intervention (see Section 14.1.6.3 ), for example as the number of people experiencing the event per 1000 people.

For continuous outcomes, a difference in means or standardized difference in means should be presented with its confidence interval. These will typically be obtained directly from a meta-analysis. Explanatory text should be used to clarify the meaning, as in Figures 14.1.a and 14.1.b .

14.1.6.5 Risk difference

For dichotomous outcomes, the risk difference can be provided using one of the ‘Summary of findings’ table formats as an additional option (see Figure 14.1.b ). This risk difference expresses the difference between the experimental and comparator intervention and will usually be derived from the meta-analysis result presented in the relative effect column (see Section 14.1.6.6 ). Formulae are provided in Section 14.1.5 . Review authors should present the risk difference in the same format as assumed and corresponding risks with comparator intervention (see Section 14.1.6.3 ); for example, as the number of people experiencing the event per 1000 people or as percentage points if the assumed and corresponding risks are expressed in percentage.

For continuous outcomes, if the ‘Summary of findings’ table includes this option, the mean difference can be presented here and the ‘corresponding risk’ column left blank (see Figure 14.1.b ).

14.1.6.6 Relative effect (95% CI)

The relative effect will typically be a risk ratio or odds ratio (or occasionally a hazard ratio) with its accompanying 95% confidence interval, obtained from a meta-analysis performed on the basis of the same effect measure. Risk ratios and odds ratios are similar when the comparator intervention risks are low and effects are small, but may differ considerably when comparator group risks increase. The meta-analysis may involve an assumption of either fixed or random effects, depending on what the review authors consider appropriate, and implying that the relative effect is either an estimate of the effect of the intervention, or an estimate of the average effect of the intervention across studies, respectively.

14.1.6.7 Number of participants (studies)

This column should include the number of participants assessed in the included studies for each outcome and the corresponding number of studies that contributed these participants.

14.1.6.8 Certainty of the evidence (GRADE)

Review authors should comment on the certainty of the evidence (also known as quality of the body of evidence or confidence in the effect estimates). Review authors should use the specific evidence grading system developed by the GRADE Working Group (Atkins et al 2004, Guyatt et al 2008, Guyatt et al 2011a), which is described in detail in Section 14.2 . The GRADE approach categorizes the certainty in a body of evidence as ‘high’, ‘moderate’, ‘low’ or ‘very low’ by outcome. This is a result of judgement, but the judgement process operates within a transparent structure. As an example, the certainty would be ‘high’ if the summary were of several randomized trials with low risk of bias, but the rating of certainty becomes lower if there are concerns about risk of bias, inconsistency, indirectness, imprecision or publication bias. Judgements other than of ‘high’ certainty should be made transparent using explanatory footnotes or the ‘Comments’ column in the ‘Summary of findings’ table (see Section 14.1.6.10 ).

14.1.6.9 Comments

The aim of the ‘Comments’ field is to help interpret the information or data identified in the row. For example, this may be on the validity of the outcome measure or the presence of variables that are associated with the magnitude of effect. Important caveats about the results should be flagged here. Not all rows will need comments, and it is best to leave a blank if there is nothing warranting a comment.

14.1.6.10 Explanations