- Reporting Bias: Definition, Types, Examples & Mitigation

Reporting bias is a type of selection bias that occurs when only certain observations are reported or published. Reporting bias can greatly impact the accuracy of results, and it is important to consider reporting bias when conducting research. In this article, we will discuss reporting bias, the types, and the examples.

What is Reporting Bias?

Reporting bias is a type of selection bias that occurs when the results of a study are skewed due to the way they are reported. Reporting bias happens when researchers or scientists choose to report only certain data, even though other data may exist that would have influenced their findings.

For example, if you were to conduct a study on the effects of eating chocolate on mice and only used mice from a particular region, then your results could be skewed because they would not be representative of all mice. Reporting bias can also occur when the data is manipulated before it’s reported, as in the case of cherry-picking or data mining.

This can result in unreliable or biased results reported by an organization or individual. The most common form of reporting bias is selection bias. This is when participants in a study are chosen based on their ability to influence the outcome or for other reasons that might create an inaccurate picture of reality.

Read: What is Participant Bias? How to Detect & Avoid It

Another reporting bias occurs when researchers do not report all the results of their studies. They may leave out information because they don’t think it’s important or because they want to make their findings seem more impressive than they are.

This is why there’s a field of science dedicated to trying to determine what happened in studies where there was reporting bias: a meta-analysis. A meta-analysis is an analysis of multiple studies on the same topic done by different researchers, which can help provide more insight into whether a particular finding is factual or not.

Read: Selection Bias in Research: Types, Examples & Impact

Types of Reporting Bias

1. outcome reporting bias.

Outcome reporting bias occurs when an outcome that was not originally planned for (or expected) is reported in favor of the hypothesis being tested. This can be due to either a conscious or unconscious decision made by the researcher.

For example, suppose you wanted to test whether eating more vegetables improves health outcomes. If you find that people who ate more vegetables were healthier than those who didn’t and then decide that this means eating vegetables improves health, you may have fallen victim to outcome reporting bias because it was never your intention to study how vegetables affect health outcomes.

2. Publication bias

Publication bias is another form of reporting bias where journals or other publications only print positive results from studies conducted on their topic(s). This means that only positive results are published, leading readers to believe that there’s no need for further research on the topic because all of the relevant evidence has already been collected. This can also lead to the “file drawer” phenomenon where negative results are not published because they will not contribute positively to the researcher’s reputation or career advancement.

Read: 21 Chrome Extensions for Academic Researchers in 2022

3. Knowledge reporting bias

Knowledge reporting bias refers to the fact that researchers may not report all their knowledge about a topic or experiment because they feel it isn’t important enough or doesn’t fit their hypothesis.

Here’s an example: let’s say two researchers are studying whether people feel healthier if they eat vegetables every day versus if they eat vegetables once or twice per week and one researcher finds no difference between eating vegetables daily vs. once per week, but the other did find a health improvement when people ate veggies daily versus once per week. The first researcher might decide not to report this finding and then prevent the readers from knowing that possibility.

4. Multiple (duplicate) publication bias

Multiple publication bias is when a study is published repeatedly either due to changes in methodology or because the same data is analyzed differently by different researchers. This can lead to false conclusions about the effectiveness of a treatment or program because it skews the results towards positive outcomes.

5. Time lag bias

Time lag bias occurs when a study is conducted with no follow-up measures, so it doesn’t get published until much later when someone revisits the topic and does another study on it (with follow-up data). When this happens, researchers are unable to determine whether or not there has been any change over time due to intervention or other factors because they don’t have any data from before intervention began.

6. Citation bias

Citation bias occurs when one researcher cites another researcher’s work as evidence for their argument without acknowledging that it was cited from someone else’s paper first; essentially making it look like they came up with an idea on their own instead of building off someone else’s work.

Explore: Citation Styles in Research Writing: MLA, APA, IEEE

Examples of Reporting Bias

For example, if you were studying how many people with blue eyes wear glasses and found that only 40% of people with blue eyes wear glasses, then it could be because they didn’t include all the people with blue eyes in your study. It could also be because there are other factors at play that make fewer people with blue eyes wear glasses such as those who don’t wear glasses may not feel like wearing them.

Reporting bias can also happen when someone running a survey or experiment asks leading questions. For example, instead of asking “Do you like eating bananas?” they might say “Bananas are delicious,” or instead of asking “Have you ever eaten bananas?” they might say “Wouldn’t it be nice if we could eat bananas every day?” The first question does not affect the outcome, but if someone answers yes to the second question, it is because they think they have to agree with the pollster’s statement.

Read: Undercoverage Bias: Definition, Examples in Survey Research

If you ask people to rate their performance as a manager on a scale from 1 to 5, and then you ask them about their coworkers’ performance, they might tell you that all their coworkers are doing great (because they don’t want to look bad). This is an example of reporting bias because it skews the results of your study by making it seem like everyone is performing well when in reality, it may be that some people are doing poorly.

Effects and Implications of Reporting Bias

Reporting bias can lead to false conclusions being drawn from experiments and may even lead to harm for patients or subjects involved in a study. For example, if a researcher does not report all of their data, it could lead them to think that their treatment works better than it does.

This would result in them prescribing this treatment to patients who don’t need it or causing other researchers to replicate their experiment with incorrect results. When a study sample is selected based on reporting, the results are likely to be biased in favor of positive results: if people are more likely to report positive events than negative events, then they’ll also be more likely to not report at all.

Reporting bias can have serious implications for your survey and your business. If you’re trying to figure out whether your product/service is effective at solving problems for customers (or not), then reporting bias can make it hard for you to get an accurate lead on how well it works across different demographics and situations.

Read: Survey Errors To Avoid: Types, Sources, Examples, Mitigation

How to Prevent or Manage Reporting Bias

- Be honest with yourself about whether or not your results are statistically significant enough to be considered valid. If they are not, then don’t pretend they are.

- You can also try running some more experiments before publishing your findings so that there’s more evidence available for readers who might want more proof before believing what was found during your experiment.

- Consider using statistics or data to back up your claims whenever possible.

- You can also manage it by collecting and reporting data from additional sources to provide an alternative view of the data. This can be done by asking colleagues who have not been involved in the project to review the results for plausibility, or by running a second analysis that uses more conservative assumptions.

Read: Research Bias: Definition, Types + Examples

Reporting bias is a phenomenon in which the reporting of a study is biased by the researcher’s expectations of what they want to find and it can be caused by many factors. You must practice transparency in your research as that would help you better manage reporting bias.

Connect to Formplus, Get Started Now - It's Free!

- citation bias

- knowledge reporting bias

- outcome-reporting bias

- publication bias

- recall bias

- recall limitation

- reporting tools

- time lag bias

- busayo.longe

You may also like:

Projective Techniques In Surveys: Definition, Types & Pros & Cons

Introduction When you’re conducting a survey, you need to find out what people think about things. But how do you get an accurate and...

Recall Bias: Definition, Types, Examples & Mitigation

This article will discuss the impact of recall bias in studies and the best ways to avoid them during research.

Selection Bias in Research: Types, Examples & Impact

In this article, we’ll discuss the effects of selection bias, how it works, its common effects and the best ways to minimize it.

What is Publication Bias? (How to Detect & Avoid It)

In this article, we will do a deep dive into publication bias, how to reduce or avoid it, and other types of biases in research.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

Catalogue of Bias

Reporting biases

A systematic distortion that arises from the selective disclosure or withholding of information by parties involved in the design, conduct, analysis, or dissemination of a study or research findings

Table of Contents

Preventive steps.

- Further resources

Reporting biases is an umbrella term that covers a range of different types of biases. It is described as the most significant form of scientific misconduct ( Al-Marzouki et al. 2005 ). Reporting biases have been recognised for hundreds of years, dating back to the 17th century ( Dickersin & Chambers, 2010 ). Since then, various definitions of reporting biases have been proposed:

- The Dictionary of Epidemiology defines reporting bias as the “s elective revelation or suppression of information (e.g., about past medical history, smoking, sexual experiences) or of study results .”

- The Cochrane Handbook states it arises “ when the dissemination of research findings is influenced by the nature and direction of results .”

- The James Lind Library states “ biased reporting of research occurs when the direction or statistical significance of results influence whether and how research is reported. ”

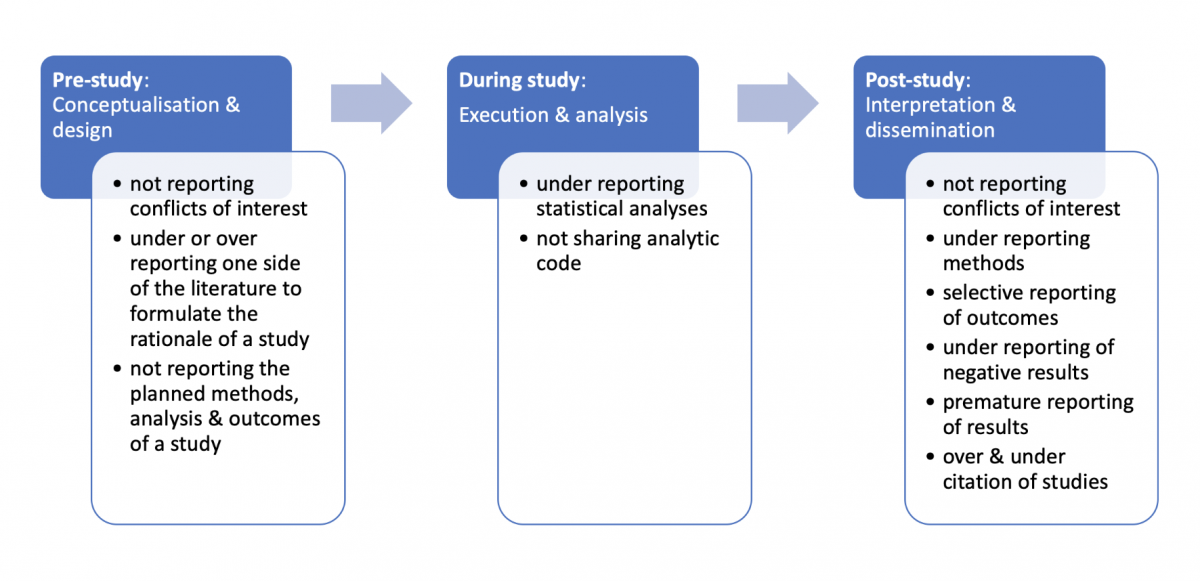

Our definition of reporting biases is a distortion of presented information from research due to the selective disclosure or withholding of information by parties involved with regards to the topic selected for study and the design, conduct, analysis, or dissemination of study methods, findings or both. Researchers have previously described seven types of reporting biases, including publication bias , time-lag bias, multiple (duplicate) publication bias, location bias, citation bias, language bias and outcome reporting bias ( Higgins & Green. 2011 ). Figure 1 illustrates where reporting biases can occur in the lifecycle of research and provides several examples of reporting biases.

Download the powerpoint: Reporting biases

A narrative review conducted by McGauran and colleagues (2010) found reporting biases are a widespread phenomenon in the medical literature. They identified reporting biases in 50 types of pharmacological, surgical, diagnostic and preventative interventions which included the withholding of study data or the active attempt by manufacturers to suppress the publication of findings.

A systematic review by Jones and colleagues (2015) compared the outcomes of randomised controlled trials specified in registered protocols with those in subsequent peer-reviewed journal articles. There were discrepancies between prespecified and reported outcomes in a third of the studies. Thirteen per cent of trials introduced a new outcome in the published articles compared with those specified in the registered protocols.

In a cohort study of Cochrane systematic reviews, Saini and colleagues (2014) found 86% of reviews did not report data on the main harm outcome of interest.

Another cohort study found considerable inconsistency in the reporting of adverse events when comparing sponsors databases with study protocols ( Scharf & Colevas, 2006 ). In 14 of the 22 included studies, the number of adverse events in the sponsor’s database differed from the published articles by 20% or more.

When more detailed information for interventions was analysed for oseltamivir trials , over half (55%) of the previous risk of bias assessments were reclassified from ‘low’ risk of bias to ‘high’ ( Jefferson et al. 2014 ).

Trials and systematic reviews are used by clinicians and policymakers to develop evidence-based guidelines and make decisions about treatment or prevention of health problems. When the evidence base available to clinicians, policymakers or patients is incomplete or distorted, healthcare decisions and recommendations are made on biased evidence.

Vioxx (Rofecoxib), a Cox-2 inhibitor prescribed for osteoarthritis pain, provides an important example of under-reporting and misreporting of data which led to significant patient harm.

The first safety analysis of the largest study of Rofecoxib found a 79% greater risk of death or serious cardiovascular event in one treatment group compared with the other ( Krumholz et al., 2007 ). This information was not disclosed by the manufacturer (Merck), and the trial continued. The cardiovascular risk associated with Rofecoxib was obscured in several ways.

A number of significant conflicts of interest among board members of Merck were undisclosed, and not made public while the trial was in progress or when it was published ( Krumholz et al., 2007 ). Merck now faces legal claims from nearly 30,000 people who experienced adverse cardiovascular events while taking Rofecoxib.

If benefits are over-reported and harms are under-reported, clinicians, patients and the public will have a false sense of security about the safety of treatments. This results in unnecessary suffering and death ( Cowley et al. 1993 ), perpetuates research waste and misguides future research ( Glasziou & Chalmers, 2018 ).

Transparency is the most important action to safeguard health research.

Pre-study: The results of prospectively registered trials are significantly more likely to be published than those of unregistered trials (adjusted OR 4.53, 95% CI 1.12-18.34; Chan et al., 2017 ). Prospective registration of all clinical trials should be required, and encouraged for other study designs, by journal editors, regulators, research ethics committees, funders, and sponsors.

During the study: Open science practices, such as making de-identified data and analytical code publicly available through platforms like GitHub or the Open Science Framework aids reproducibility, prevents duplication, reduces waste, accelerates innovation, identifies errors and prevents reporting biases.

Post-study: Reporting guidelines such as CONSORT can help guide researchers to improve their reporting of randomised trials ( Moher et al., 2010 ).

Other checklists and tools have been developed to assess the risk of reporting biases in studies, including the Cochrane Risk of Bias Tool , GRADE and ORBIT-II ( Page et al., 2018 ).

Catalogue of Bias. Richards GC, Onakpoya IJ. Reporting biases. In: Catalog Of Bias 2019: www.catalogueofbiases.org/reportingbiases

Related biases

- Outcome reporting bias

- Publication bias

- Selection bias

Al-Marzouki et al. The effect of scientific misconduct on the results of clinical trials: a Delphi survey . Contemp Clin Trials. 2005. 26: 331-337.

Dickersin & Chalmers. Recognising, investigating and dealing with incomplete and biased reporting of clinical research: from Francis Bacon to the World Health Organisation . JLL Bulletin: Commentaries on the history of treatment evaluation. 2010.

Higgins & Green . Definitions of some types of reporting biases. Cochrane Handbook for Systematic Reviews of Interventions, v5.1.0 . 2011.

McGauran et al. Reporting bias in medical research – a narrative review . Trials 2010. 11:37.

Jones et al. Comparison of registered and published outcomes in randomized controlled trials: a systematic review . BMC Med. 2015; 13:282.

Saini et al. Selective reporting bias of harm outcomes within studies: findings from a cohort of systematic reviews . BMJ 2014; 349

Scharf & Colevas. Adverse event reporting in publications compared with sponsor database for cancer clinical trials . J Clin Oncol 2006. 24:24 pp 3933-8.

Jefferson et al. Risk of bias in industry-funded oseltamivir trials: comparison of core reports versus full clinical study reports . BMJ Open 2014;4:e005253.

Krumholz et al. What have we learnt from Vioxx? BMJ, 2007. 334(7585): 120-123

Cowley et al. The effect of lorcainide on arrhythmias and survival in patients with acute myocardial infarction: an example of publication bias . Int J Cardiol 1993. 1;40(2): 161-6.

Glasziou & Chalmers. Research waste is still a scandal . The BMJ 2018; 363:k4645.

Chan et al. Association of trial registration with reporting of primary outcomes in protocols and publications . JAMA 2017; 318:17, 1709-1711.

Moher et al. CONSORT 2010 Explanation and Elaboration: updated guidelines for reporting parallel group randomised trials . 2010. 340:c869

PubMed feed

- Ali G Alghamdi. Assessing Medical Student Perceptions of Open-Book Exams for Self-Directed Learning

- Carolina Quintero Arias. Food insecurity in high-risk rural communities before and during the COVID-19 pandemic

- Thi-Phuong-Thao Pham. Comparative efficacy of antioxidant therapies for sepsis and septic shock in the intensive care unit: A frequentist network meta-analysis

- Jie He. miR-29 as diagnostic biomarkers for tuberculosis: a systematic review and meta-analysis

- Domonkos File. The imbalance of self-reported wanting and liking is associated with the degree of attentional bias toward smoking-related stimuli in low nicotine dependence smokers

View more →

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Research Bias 101: What You Need To Know

By: Derek Jansen (MBA) | Expert Reviewed By: Dr Eunice Rautenbach | September 2022

If you’re new to academic research, research bias (also sometimes called researcher bias) is one of the many things you need to understand to avoid compromising your study. If you’re not careful, research bias can ruin the credibility of your study.

In this post, we’ll unpack the thorny topic of research bias. We’ll explain what it is , look at some common types of research bias and share some tips to help you minimise the potential sources of bias in your research.

Overview: Research Bias 101

- What is research bias (or researcher bias)?

- Bias #1 – Selection bias

- Bias #2 – Analysis bias

- Bias #3 – Procedural (admin) bias

So, what is research bias?

Well, simply put, research bias is when the researcher – that’s you – intentionally or unintentionally skews the process of a systematic inquiry , which then of course skews the outcomes of the study . In other words, research bias is what happens when you affect the results of your research by influencing how you arrive at them.

For example, if you planned to research the effects of remote working arrangements across all levels of an organisation, but your sample consisted mostly of management-level respondents , you’d run into a form of research bias. In this case, excluding input from lower-level staff (in other words, not getting input from all levels of staff) means that the results of the study would be ‘biased’ in favour of a certain perspective – that of management.

Of course, if your research aims and research questions were only interested in the perspectives of managers, this sampling approach wouldn’t be a problem – but that’s not the case here, as there’s a misalignment between the research aims and the sample .

Now, it’s important to remember that research bias isn’t always deliberate or intended. Quite often, it’s just the result of a poorly designed study, or practical challenges in terms of getting a well-rounded, suitable sample. While perfect objectivity is the ideal, some level of bias is generally unavoidable when you’re undertaking a study. That said, as a savvy researcher, it’s your job to reduce potential sources of research bias as much as possible.

To minimize potential bias, you first need to know what to look for . So, next up, we’ll unpack three common types of research bias we see at Grad Coach when reviewing students’ projects . These include selection bias , analysis bias , and procedural bias . Keep in mind that there are many different forms of bias that can creep into your research, so don’t take this as a comprehensive list – it’s just a useful starting point.

Bias #1 – Selection Bias

First up, we have selection bias . The example we looked at earlier (about only surveying management as opposed to all levels of employees) is a prime example of this type of research bias. In other words, selection bias occurs when your study’s design automatically excludes a relevant group from the research process and, therefore, negatively impacts the quality of the results.

With selection bias, the results of your study will be biased towards the group that it includes or favours, meaning that you’re likely to arrive at prejudiced results . For example, research into government policies that only includes participants who voted for a specific party is going to produce skewed results, as the views of those who voted for other parties will be excluded.

Selection bias commonly occurs in quantitative research , as the sampling strategy adopted can have a major impact on the statistical results . That said, selection bias does of course also come up in qualitative research as there’s still plenty room for skewed samples. So, it’s important to pay close attention to the makeup of your sample and make sure that you adopt a sampling strategy that aligns with your research aims. Of course, you’ll seldom achieve a perfect sample, and that okay. But, you need to be aware of how your sample may be skewed and factor this into your thinking when you analyse the resultant data.

Need a helping hand?

Bias #2 – Analysis Bias

Next up, we have analysis bias . Analysis bias occurs when the analysis itself emphasises or discounts certain data points , so as to favour a particular result (often the researcher’s own expected result or hypothesis). In other words, analysis bias happens when you prioritise the presentation of data that supports a certain idea or hypothesis , rather than presenting all the data indiscriminately .

For example, if your study was looking into consumer perceptions of a specific product, you might present more analysis of data that reflects positive sentiment toward the product, and give less real estate to the analysis that reflects negative sentiment. In other words, you’d cherry-pick the data that suits your desired outcomes and as a result, you’d create a bias in terms of the information conveyed by the study.

Although this kind of bias is common in quantitative research, it can just as easily occur in qualitative studies, given the amount of interpretive power the researcher has. This may not be intentional or even noticed by the researcher, given the inherent subjectivity in qualitative research. As humans, we naturally search for and interpret information in a way that confirms or supports our prior beliefs or values (in psychology, this is called “confirmation bias”). So, don’t make the mistake of thinking that analysis bias is always intentional and you don’t need to worry about it because you’re an honest researcher – it can creep up on anyone .

To reduce the risk of analysis bias, a good starting point is to determine your data analysis strategy in as much detail as possible, before you collect your data . In other words, decide, in advance, how you’ll prepare the data, which analysis method you’ll use, and be aware of how different analysis methods can favour different types of data. Also, take the time to reflect on your own pre-conceived notions and expectations regarding the analysis outcomes (in other words, what do you expect to find in the data), so that you’re fully aware of the potential influence you may have on the analysis – and therefore, hopefully, can minimize it.

Bias #3 – Procedural Bias

Last but definitely not least, we have procedural bias , which is also sometimes referred to as administration bias . Procedural bias is easy to overlook, so it’s important to understand what it is and how to avoid it. This type of bias occurs when the administration of the study, especially the data collection aspect, has an impact on either who responds or how they respond.

A practical example of procedural bias would be when participants in a study are required to provide information under some form of constraint. For example, participants might be given insufficient time to complete a survey, resulting in incomplete or hastily-filled out forms that don’t necessarily reflect how they really feel. This can happen really easily, if, for example, you innocently ask your participants to fill out a survey during their lunch break.

Another form of procedural bias can happen when you improperly incentivise participation in a study. For example, offering a reward for completing a survey or interview might incline participants to provide false or inaccurate information just to get through the process as fast as possible and collect their reward. It could also potentially attract a particular type of respondent (a freebie seeker), resulting in a skewed sample that doesn’t really reflect your demographic of interest.

The format of your data collection method can also potentially contribute to procedural bias. If, for example, you decide to host your survey or interviews online, this could unintentionally exclude people who are not particularly tech-savvy, don’t have a suitable device or just don’t have a reliable internet connection. On the flip side, some people might find in-person interviews a bit intimidating (compared to online ones, at least), or they might find the physical environment in which they’re interviewed to be uncomfortable or awkward (maybe the boss is peering into the meeting room, for example). Either way, these factors all result in less useful data.

Although procedural bias is more common in qualitative research, it can come up in any form of fieldwork where you’re actively collecting data from study participants. So, it’s important to consider how your data is being collected and how this might impact respondents. Simply put, you need to take the respondent’s viewpoint and think about the challenges they might face, no matter how small or trivial these might seem. So, it’s always a good idea to have an informal discussion with a handful of potential respondents before you start collecting data and ask for their input regarding your proposed plan upfront.

Let’s Recap

Ok, so let’s do a quick recap. Research bias refers to any instance where the researcher, or the research design , negatively influences the quality of a study’s results, whether intentionally or not.

The three common types of research bias we looked at are:

- Selection bias – where a skewed sample leads to skewed results

- Analysis bias – where the analysis method and/or approach leads to biased results – and,

- Procedural bias – where the administration of the study, especially the data collection aspect, has an impact on who responds and how they respond.

As I mentioned, there are many other forms of research bias, but we can only cover a handful here. So, be sure to familiarise yourself with as many potential sources of bias as possible to minimise the risk of research bias in your study.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

This is really educational and I really like the simplicity of the language in here, but i would like to know if there is also some guidance in regard to the problem statement and what it constitutes.

Do you have a blog or video that differentiates research assumptions, research propositions and research hypothesis?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Reporting Biases

- Reference work entry

- First Online: 20 July 2022

- pp 2045–2071

- Cite this reference work entry

- S. Swaroop Vedula 3 ,

- Asbjørn Hróbjartsson 4 &

- Matthew J. Page 5

188 Accesses

Clinical trials are experiments in human beings. Findings from these experiments, either by themselves or within research syntheses, are often meant to evidence-based clinical decision-making. These decisions can be misled when clinical trials are reported in a biased manner. For clinical trials to inform healthcare decisions without bias, their reporting should be complete, timely, transparent, and accessible. Reporting of clinical trials is biased when it is influenced by the nature and direction of its results. Reporting biases in clinical trials may manifest in different ways, including results not being reported at all, reported in part, with delay, or in sources of scientific literature that are harder to access. Biased reporting of clinical trials in turn can introduce bias into research syntheses, with the eventual consequence being misinformed healthcare decisions. Clinical trial registration, access to protocols and statistical analysis plans, and guidelines for transparent and complete reporting are critical to prevent reporting biases.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Abraha I, Cherubini A, Cozzolino F, De Florio R, Luchetta ML, Rimland JM, Folletti I, Marchesi M, Germani A, Orso M, Eusebi P, Montedori A (2015) Deviation from intention to treat analysis in randomised trials and treatment effect estimates: meta-epidemiological study. BMJ 350:h2445

Article Google Scholar

Barbour V, Burch D, Godlee F, Heneghan C, Lehman R, Perera R et al (2016) Characterisation of trials where marketing purposes have been influential in study design: a descriptive study. Trials 17:31

Begg CB (1985) A measure to aid in the interpretation of published clinical trials. Stat Med 4(1):1–9

Article MathSciNet Google Scholar

Bekelman JE, Li Y, Gross CP (2003) Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA 289(4):454–465

Bero L (2017) Addressing bias and conflict of interest among biomedical researchers. JAMA 317(17):1723–1724

Bombardier C, Laine L, Reicin A, Shapiro D, Burgos-Vargas R, Davis B et al (2000) Comparison of upper gastrointestinal toxicity of Rofecoxib and Naproxen in patients with rheumatoid arthritis. N Engl J Med 343(21):1520–1528

Boutron I, Ravaud P (2018) Misrepresentation and distortion of research in biomedical literature. Proc Natl Acad Sci 115(11):2613–2619

Boutron I, Dutton S, Ravaud P, Altman DG (2010) Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA 303(20):2058–2064

Boutron I, Altman DG, Hopewell S, Vera-Badillo F, Tannock I, Ravaud P (2014) Impact of spin in the abstracts of articles reporting results of randomized controlled trials in the field of cancer: the SPIIN randomized controlled trial. J Clin Oncol 32(36):4120–4126

Boutron I, Page MJ, Higgins JPT, Altman DG, Lundh A, Hróbjartsson A (2019) Chapter 7: considering bias and conflicts of interest among the included studies. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (eds) Cochrane handbook for systematic reviews of interventions. Version 6.0 (updated July 2019). Available from www.training.cochrane.org/handbook. Cochrane

Brassington I (2017) The ethics of reporting all the results of clinical trials. Br Med Bull 121(1):19–29

Cardiac Arrhythmia Suppression Trial (CAST) Investigators (1989) Preliminary report: effect of encainide and flecainide on mortality in a randomized trial of arrhythmia suppression after myocardial infarction. N Engl J Med 321(6):406–412

Chan AW, Hróbjartsson A (2018) Promoting public access to clinical trial protocols: challenges and recommendations. Trials 19(1):116

Chan AW, Hróbjartsson A, Haahr MT, Gøtzsche PC, Altman DG (2004a) Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA 291(20):2457–2465

Chan AW, Krleza-Jerić K, Schmid I, Altman DG (2004b) Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research. CMAJ 171(7):735–740

Chan AW, Hróbjartsson A, Jørgensen KJ, Gøtzsche PC, Altman DG (2008) Discrepancies in sample size calculations and data analyses reported in randomised trials: comparison of publications with protocols. BMJ 337:a2299

Chan A-W, Tetzlaff JM, Altman DG, Laupacis A, Gøtzsche PC, Krleža-Jerić K et al (2013) SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med 158(3):200–207

Chan A-W, Song F, Vickers A, Jefferson T, Dickersin K, Gøtzsche PC et al (2014) Increasing value and reducing waste: addressing inaccessible research. Lancet 383(9913):257–266

Chiu K, Grundy Q, Bero L (2017) ‘Spin’ in published biomedical literature: a methodological systematic review. PLoS Biol 15(9):e2002173

Cowley AJ, Skene A, Stainer K, Hampton JR (1993) The effect of lorcainide on arrhythmias and survival in patients with acute myocardial infarction: an example of publication bias. Int J Cardiol 40(2):161–166

Cronin E, Sheldon T (2004) Factors influencing the publication of health research. Int J Technol Assess Health Care 20(3):351–355

de Vries YA, Roest AM, Turner EH, de Jonge P (2019) Hiding negative trials by pooling them: a secondary analysis of pooled-trials publication bias in FDA-registered antidepressant trials. Psychol Med 49(12):2020–2026

Dechartres A, Atal I, Riveros C, Meerpohl J, Ravaud P (2018) Association between publication characteristics and treatment effect estimates: a meta-epidemiologic study. Ann Intern Med 169:385–393

Dickersin K (1990) The existence of publication bias and risk factors for its occurrence. JAMA 263(10):1385–1389

Dickersin K, Chalmers I (2011) Recognizing, investigating and dealing with incomplete and biased reporting of clinical research: from Francis Bacon to the WHO. J R Soc Med 104(12):532–538

Dickersin K, Min YI (1993) NIH clinical trials and publication bias. Online J Curr Clin Trials Doc No 50

Google Scholar

Dickersin K, Chan S, Chalmers TC, Sacks HS, Smith H (1987) Publication bias and clinical trials. Control Clin Trials 8(4):343–353

Dickersin K, Min Y-I, Meinert CL (1992) Factors influencing publication of research results: follow-up of applications submitted to two Institutional Review Boards. JAMA 267(3):374–378

Duyx B, Urlings MJE, Swaen GMH, Bouter LM, Zeegers MP (2017) Scientific citations favor positive results: a systematic review and meta-analysis. J Clin Epidemiol 88:92–101

Dwan K, Gamble C, Williamson PR, Kirkham JJ (2013) Systematic review of the empirical evidence of study publication bias and outcome reporting bias – an updated review. PLoS One 8(7):1–37

Easterbrook PJ, Gopalan R, Berlin JA, Matthews DR (1991) Publication bias in clinical research. Lancet 337(8746):867–872

Egger M, Smith GD, Schneider M, Minder C (1997) Bias in meta-analysis detected by a simple, graphical test. BMJ 315(7109):629–634

Eyding D, Lelgemann M, Grouven U, Härter M, Kromp M, Kaiser T et al (2010) Reboxetine for acute treatment of major depression: systematic review and meta-analysis of published and unpublished placebo and selective serotonin reuptake inhibitor controlled trials. BMJ 341:c4737

Ferguson JM, Mendels J, Schwartz GE (2002) Effects of reboxetine on Hamilton depression rating scale factors from randomized, placebo-controlled trials in major depression. Int Clin Psychopharmacol 17(2):45–51

Frank RA, Sharifabadi AD, Salameh J-P, McGrath TA, Kraaijpoel N, Dang W et al (2019) Citation bias in imaging research: are studies with higher diagnostic accuracy estimates cited more often? Eur Radiol 29(4):1657–1664

Gehr BT, Weiss C, Porzsolt F (2006) The fading of reported effectiveness. A meta-analysis of randomised controlled trials. BMC Med Res Methodol 6(1):25

Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S et al (2014) Reducing waste from incomplete or unusable reports of biomedical research. Lancet 383(9913):267–276

Gøtzsche PC (1987) Reference bias in reports of drug trials. Br Med J Clin Res Ed 295(6599):654–656

Hahn S, Williamson PR, Hutton JL (2002) Investigation of within-study selective reporting in clinical research: follow-up of applications submitted to a local research ethics committee. J Eval Clin Pract 8(3):353–359

Hall R, de Antueno C, Webber A (2007) Publication bias in the medical literature: a review by a Canadian research ethics board. Can J Anesth 54(5):380–388

Hart B, Lundh A, Bero L (2012) Effect of reporting bias on meta-analyses of drug trials: reanalysis of meta-analyses. BMJ 344:d7202

Hartling L, Craig WR, Russell K, Stevens K, Klassen TP (2004) Factors influencing the publication of randomized controlled trials in child health research. Arch Pediatr Adolesc Med 158(10):983–987

Hartling L, Featherstone R, Nuspl M, Shave K, Dryden D, Vandermeer B (2017) Grey literature in systematic reviews: a cross-sectional study of the contribution of non-English reports, unpublished studies and dissertations to the results of meta-analyses in child-relevant reviews. Syst Rev 17:64

Heres S, Wagenpfeil S, Hamann J, Kissling W, Leucht S (2004) Language bias in neuroscience – is the Tower of Babel located in Germany? Eur Psychiatry 19(4):230–232

Hetherington J, Dickersin K, Chalmers I, Meinert CL (1989) Retrospective and prospective identification of unpublished controlled trials: lessons from a survey of obstetricians and pediatricians. Pediatrics 84(2):374–380

Hill KP, Ross JS, Egilman DS, Krumholz HM (2008) The ADVANTAGE seeding trial: a review of internal documents. Ann Intern Med 149(4):251

Hopewell S, Clarke M, Stewart L, Tierney J (2007) Time to publication for results of clinical trials. Cochrane Database Syst Rev 2007(2):MR000011

Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K (2009) Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev 1:MR000006

Jefferson T, Doshi P, Boutron I, Golder S, Heneghan C, Hodkinson A et al (2018) When to include clinical study reports and regulatory documents in systematic reviews. BMJ Evid-Based Med 23(6):210–217

Jennions MD, Møller AP (2002) Relationships fade with time: a meta-analysis of temporal trends in publication in ecology and evolution. Proc R Soc Lond B Biol Sci 269(1486):43–48

Jones CW, Keil LG, Holland WC, Caughey MC, Platts-Mills TF (2015) Comparison of registered and published outcomes in randomized controlled trials: a systematic review. BMC Med 13:282

Jüni P, Holenstein F, Sterne J, Bartlett C, Egger M (2002) Direction and impact of language bias in meta-analyses of controlled trials: empirical study. Int J Epidemiol 31(1):115–123

Kicinski M (2014) How does under-reporting of negative and inconclusive results affect the false-positive rate in meta-analysis? A simulation study. BMJ Open 4(8):e004831

Kirkham JJ, Dwan KM, Altman DG, Gamble C, Dodd S, Smyth R, Williamson PR (2010) The impact of outcome reporting bias in randomised controlled trials on a cohort of systematic reviews. BMJ 340:c365

Kirsch I, Deacon BJ, Huedo-Medina TB, Scoboria A, Moore TJ, Johnson BT (2008) Initial severity and antidepressant benefits: a meta-analysis of data submitted to the Food and Drug Administration. PLoS Med 5(2):e45

Kjaergard LL, Gluud C (2002) Citation bias of hepato-biliary randomized clinical trials. J Clin Epidemiol 55(4):407–410

Lee KP, Boyd EA, Holroyd-Leduc JM, Bacchetti P, Bero LA (2006) Predictors of publication: characteristics of submitted manuscripts associated with acceptance at major biomedical journals. Med J Aust 184(12):621–626

Lexchin J, Bero LA, Djulbegovic B, Clark O (2003) Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ 326(7400):1167–1170

Li T, Mayo-Wilson E, Fusco N, Hong H, Dickersin K (2018) Caveat emptor: the combined effects of multiplicity and selective reporting. Trials 19(1):497

Liebeskind DS, Kidwell CS, Sayre JW, Saver JL (2006) Evidence of publication bias in reporting acute stroke clinical trials. Neurology 67(6):973–979

Lundh A, Lexchin J, Mintzes B, Schroll JB, Bero L (2017) Industry sponsorship and research outcome. Cochrane Database Syst Rev 2(2):MR000033

Mahoney MJ (1977) Publication prejudices: an experimental study of confirmatory bias in the peer review system. Cogn Ther Res 1(2):161–175

Malberg JE, Eisch AJ, Nestler EJ, Duman RS (2000) Chronic antidepressant treatment increases neurogenesis in adult rat hippocampus. J Neurosci 20(24):9104–9110

Marks-Anglin A, Chen Y (2020) A historical review of publication bias. Res Synth Methods 11(6):725–742

Matheson A (2017) Marketing trials, marketing tricks – how to spot them and how to stop them. Trials 18:105

Mayo-Wilson E, Li T, Fusco N, Bertizzolo L, Canner JK, Cowley T et al (2017) Cherry-picking by trialists and meta-analysts can drive conclusions about intervention efficacy. J Clin Epidemiol 91(Suppl C):95–110

Mayo-Wilson E, Fusco N, Li T, Hong H, Canner JK, Dickersin K et al (2019) Harms are assessed inconsistently and reported inadequately Part 2: nonsystematic adverse events. J Clin Epidemiol 113:11–19

McCormack JP, Rangno R (2002) Digging for data from the COX-2 trials. CMAJ 166(13):1649–1650

McGauran N, Wieseler B, Kreis J, Schüler Y-B, Kölsch H, Kaiser T (2010) Reporting bias in medical research – a narrative review. Trials 11:37

Melander H, Ahlqvist-Rastad J, Meijer G, Beermann B (2003) Evidence b(i)ased medicine – selective reporting from studies sponsored by pharmaceutical industry: review of studies in new drug applications. BMJ 326(7400):1171–1173

Morrison A, Polisena J, Husereau D, Moulton K, Clark M, Fiander M, Mierzwinski-Urban M, Clifford T, Hutton B, Rabb D (2012) The effect of English-language restriction on systematic review-based meta-analyses: a systematic review of empirical studies. Int J Technol Assess Health Care 28:138–144

Mukherjee D, Nissen SE, Topol EJ (2001) Risk of cardiovascular events associated with selective COX-2 inhibitors. JAMA 286(8):954–959

Olson CM, Rennie D, Cook D, Dickersin K, Flanagin A, Hogan JW et al (2002) Publication bias in editorial decision making. JAMA 287(21):2825–2828

Østengaard L, Lundh A, Tjørnhøj-Thomsen T, Abdi S, Gelle MHA, Stewart LA, Boutron I, Hróbjartsson A (2020) Influence and management of conflicts of interest in randomised clinical trials: qualitative interview study. BMJ 371:m3764

Ottenbacher K, DiFabio RP (1985) Efficacy of spinal manipulation/mobilization therapy. A meta-analysis. Spine 10(9):833–837

Page MJ, McKenzie JE, Higgins JPT (2018) Tools for assessing risk of reporting biases in studies and syntheses of studies: a systematic review. BMJ Open 8(3):e019703

Page MJ, Higgins JPT, Sterne JAC (2019) Chapter 13: assessing risk of bias due to missing results in a synthesis. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (eds) Cochrane handbook for systematic reviews of interventions. Version 6.0 (updated July 2019). Available from www.training.cochrane.org/handbook . Cochrane

Psaty BM, Kronmal RA (2008) Reporting mortality findings in trials of Rofecoxib for Alzheimer disease or cognitive impairment: a case study based on documents from Rofecoxib litigation. JAMA 299(15):1813–1817

Scherer RW, Ugarte-Gil C, Schmucker C, Meerpohl JJ (2015) Authors report lack of time as main reason for unpublished research presented at biomedical conferences: a systematic review. J Clin Epidemiol 68(7):803–810

Scherer RW, Meerpohl JJ, Pfeifer N, Schmucker C, Schwarzer G, von Elm E (2018) Full publication of results initially presented in abstracts. Cochrane Database Syst Rev 11(11):MR000005

Schmucker C, Schell LK, Portalupi S, Oeller P, Cabrera L, Bassler D, Schwarzer G, Scherer RW, Antes G, von Elm E, Meerpohl JJ (2014) Extent of non-publication in cohorts of studies approved by research ethics committees or included in trial registries. PLoS One 9:e114023

Schmucker CM, Blumle A, Schell LK, Schwarzer G, Oeller P, Cabrera L, von Elm E, Briel M, Meerpohl JJ (2017) Systematic review finds that study data not published in full text articles have unclear impact on meta-analyses results in medical research. PLoS One 12:e0176210

Simes RJ (1986) Publication bias: the case for an international registry of clinical trials. J Clin Oncol 4(10):1529–1541

Sismondo S (2007) Ghost management: how much of the medical literature is shaped behind the scenes by the pharmaceutical industry? PLoS Med 4(9):e286

Sismondo S (2008a) Pharmaceutical company funding and its consequences: a qualitative systematic review. Contemp Clin Trials 29(2):109–113

Sismondo S (2008b) How pharmaceutical industry funding affects trial outcomes: causal structures and responses. Soc Sci Med 66(9):1909–1914

Smith ML (1980) Publication bias and meta-analysis. Eval Educ 4:22–24

Smyth RM, Kirkham JJ, Jacoby A, Altman DG, Gamble C, Williamson PR (2011) Frequency and reasons for outcome reporting bias in clinical trials: interviews with trialists. BMJ 342:c7153

Song F, Parekh S, Hooper L, Loke YK, Ryder J, Sutton AJ, Hing C, Kwok CS, Pang C, Harvey I (2010) Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess 14(8):iii, ix–xi, 1–193

Song F, Loke Y, Hooper L (2014) Why are medical and health-related studies not being published? A systematic review of reasons given by investigators. PLoS One 9(10):e110418

Sterling TD (1959) Publication decisions and their possible effects on inferences drawn from tests of significance – or vice versa. J Am Stat Assoc 54(285):30–34

Stern JM, Simes RJ (1997) Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ 315(7109):640–645

Tendal B, Nüesch E, Higgins JPT, Jüni P, Gøtzsche PC (2011) Multiplicity of data in trial reports and the reliability of meta-analyses: empirical study. BMJ 343:d4829

Turner EH, Matthews AM, Linardatos E, Tell RA, Rosenthal R (2008) Selective publication of antidepressant trials and its influence on apparent efficacy. N Engl J Med 358(3):252–260

van Lent M, Overbeke J, Out HJ (2014) Role of editorial and peer review processes in publication bias: analysis of drug trials submitted to eight medical journals. PLoS One 9(8):e104846

Vandekerckhove P, O’Donovan PA, Lilford RJ, Harada TW (1993) Infertility treatment: from cookery to science. The epidemiology of randomised controlled trials. BJOG Int J Obstet Gynaecol 100(11):1005–1036

Vedula SS, Bero L, Scherer RW, Dickersin K (2009) Outcome reporting in industry-sponsored trials of gabapentin for off-label use. N Engl J Med 361(20):1963–1971

Vedula SS, Goldman PS, Rona IJ, Greene TM, Dickersin K (2012) Implementation of a publication strategy in the context of reporting biases. A case study based on new documents from Neurontin litigation. Trials 13:136

Vedula SS, Li T, Dickersin K (2013) Differences in reporting of analyses in internal company documents versus published trial reports: comparisons in industry-sponsored trials in off-label uses of gabapentin. PLoS Med 10(1):e1001378

Vera Badillo FE, Shapiro R, Ocana A, Amir E, Tannock I (2012) Bias in reporting of endpoints of efficacy and toxicity in randomized clinical trials (RCTs) for women with breast cancer (BC). J Clin Oncol 30(Suppl 15):6043–6043

Vevea JL, Coburn K, Sutton A (2019) Publication bias. In: Cooper H, Hedges LV, Valentine JC (eds) The handbook of research synthesis and meta-analysis. Russell Sage Foundation, pp 383–430, New York, USA

von Elm E, Poglia G, Walder B, Tramèr MR (2004) Different patterns of duplicate publication: an analysis of articles used in systematic reviews. JAMA 291(8):974–980

Wallach JD, Krumholz HM (2019) Not reporting results of a clinical trial is academic misconduct. Ann Intern Med 171(4):293

Wang AT, McCoy CP, Murad MH, Montori VM (2010) Association between industry affiliation and position on cardiovascular risk with rosiglitazone: cross sectional systematic review. BMJ 340:c1344

Download references

Author information

Authors and affiliations.

Malone Center for Engineering in Healthcare, Whiting School of Engineering, The Johns Hopkins University, Baltimore, MD, USA

S. Swaroop Vedula

Cochrane Denmark and Centre for Evidence-Based Medicine Odense, University of Southern Denmark, Odense, Denmark

Asbjørn Hróbjartsson

School of Public Health and Preventive Medicine, Monash University, Melbourne, VIC, Australia

Matthew J. Page

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to S. Swaroop Vedula .

Editor information

Editors and affiliations.

Department of Surgery, Division of Surgical Oncology, Brigham and Women’s Hospital, Harvard Medical School, Boston, MA, USA

Steven Piantadosi

Department of Epidemiology, School of Public Health, Johns Hopkins University, Baltimore, MD, USA

Curtis L. Meinert

Section Editor information

Department of Ophthalmology, University of Colorado Anschutz Medical Campus, Aurora, CO, USA

Tianjing Li

The Johns Hopkins Center for Clinical Trials and Evidence Synthesis, Johns Hopkins School of Public Health, Baltimore, MA, USA

Brigham and Women’s Hospital, Harvard Medical School, Boston, MA, USA

Rights and permissions

Reprints and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this entry

Cite this entry.

Vedula, S.S., Hróbjartsson, A., Page, M.J. (2022). Reporting Biases. In: Piantadosi, S., Meinert, C.L. (eds) Principles and Practice of Clinical Trials. Springer, Cham. https://doi.org/10.1007/978-3-319-52636-2_183

Download citation

DOI : https://doi.org/10.1007/978-3-319-52636-2_183

Published : 20 July 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-52635-5

Online ISBN : 978-3-319-52636-2

eBook Packages : Mathematics and Statistics Reference Module Computer Science and Engineering

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Health Research Reporting Guidelines, Study Execution Manuals, Critical Appraisal, Risk of Bias, & Non-reporting Biases

- Health Research Reporting Guidelines

- Study Execution Manuals

- Critical Appraisal

- Risk of Bias

What are reporting biases?

List of reporting biases, tools to assess non-reporting biases.

- Quality of Evidence

- HSLS Systematic Review LibGuide

For additional information, contact:

Helena VonVille, MLS, MPH

- HSLS Pitt Public Health Liaison

- HSLS Research & Instruction Librarian

- Email me: [email protected]

- Schedule an appointment with me

Cochrane Handbook for Systematic Reviews of Interventions

Chapter 13: Assessing risk of bias due to missing results in a synthesis focuses on the causes of missing evidence, such as studies that aren't published or non-significant outcomes aren't reported. It then describes a framework for describing the assessment of missing results.

The language on this topic has changed over the years.

- PRISMA (2009) referred to this as " risk of bias across studies "

- PRISMA-P (2015; Item 16) refers to this as " meta-biases "

- PRISMA 2020 (Item 14) refers to this as " reporting bias assessment "

- The Cochrane Handbook also refers to these as " non-reporting biases "

While the terminology has changed, the intent is the same:

- Determine what evidence is missing in the final analysis; and

- Determine how that impacts the summary of evidence.

Where are these reported in an SR?

Any systematic deviation that results in a potential skewing away from the truth of the results should be evaluated and reported. The PRISMA reporting guidelines for a protocol and for the manuscript all include items for assessing these types of biases.

- Item #16: Specify any planned assessment of meta-bias(es) (sometimes called non-reporting biases across studies)

- Item #14: Describe any methods used to assess risk of bias due to missing results in a synthesis (arising from reporting biases) (See the grey box at the bottom of page 18 of the PRISMA E&E )

- Item #21: Present assessments of risk of bias due to missing results (arising from reporting biases) for each synthesis assessed.

What are non-reporting biases?

Non-reporting biases encompass a variety of categories. The Catalogue of Bias ( Catalogue of Biases . Richards GC, Onakpoya IJ. Reporting biases. In: Catalog Of Bias 2019) refers to reporting bias as, "A systematic distortion that arises from the selective disclosure or withholding of information by parties involved in the design, conduct, analysis, or dissemination of a study or research findings". It lists the following types of reporting biases:

- Publication bias

- Time-lag bias

- Multiple (duplicate) publication bias

- Location bias

- Citation bias

- Language bias

- Outcome reporting bias

This page will be updated as new tools and resources become available.

Risk of Bias Tools (Cochrane)

- A cribsheet summarizing the tool .

- A template for completing the assessment .

- Full guidance for the updated version is not available yet.

Chiocchia V, Nikolakopoulou A, Higgins JPT, Page MJ, Papakonstantinou T, Cipriani A, Furukawa TA, Siontis GCM, Egger M, Salanti G. ROB-MEN: a tool to assess risk of bias due to missing evidence in network meta-analysis. BMC Med. 2021 Nov 23;19(1):304. doi: 10.1186/s12916-021-02166-3 . PMID: 34809639 ; PMCID: PMC8609747 .

- << Previous: Risk of Bias

- Next: Quality of Evidence >>

- Last Updated: May 23, 2024 2:33 PM

- URL: https://hsls.libguides.com/reporting-study-tools

- Open access

- Published: 13 April 2010

Reporting bias in medical research - a narrative review

- Natalie McGauran 1 ,

- Beate Wieseler 1 ,

- Julia Kreis 1 ,

- Yvonne-Beatrice Schüler 1 ,

- Heike Kölsch 1 &

- Thomas Kaiser 1

Trials volume 11 , Article number: 37 ( 2010 ) Cite this article

100k Accesses

289 Citations

132 Altmetric

Metrics details

Reporting bias represents a major problem in the assessment of health care interventions. Several prominent cases have been described in the literature, for example, in the reporting of trials of antidepressants, Class I anti-arrhythmic drugs, and selective COX-2 inhibitors. The aim of this narrative review is to gain an overview of reporting bias in the medical literature, focussing on publication bias and selective outcome reporting. We explore whether these types of bias have been shown in areas beyond the well-known cases noted above, in order to gain an impression of how widespread the problem is. For this purpose, we screened relevant articles on reporting bias that had previously been obtained by the German Institute for Quality and Efficiency in Health Care in the context of its health technology assessment reports and other research work, together with the reference lists of these articles.

We identified reporting bias in 40 indications comprising around 50 different pharmacological, surgical (e.g. vacuum-assisted closure therapy), diagnostic (e.g. ultrasound), and preventive (e.g. cancer vaccines) interventions. Regarding pharmacological interventions, cases of reporting bias were, for example, identified in the treatment of the following conditions: depression, bipolar disorder, schizophrenia, anxiety disorder, attention-deficit hyperactivity disorder, Alzheimer's disease, pain, migraine, cardiovascular disease, gastric ulcers, irritable bowel syndrome, urinary incontinence, atopic dermatitis, diabetes mellitus type 2, hypercholesterolaemia, thyroid disorders, menopausal symptoms, various types of cancer (e.g. ovarian cancer and melanoma), various types of infections (e.g. HIV, influenza and Hepatitis B), and acute trauma. Many cases involved the withholding of study data by manufacturers and regulatory agencies or the active attempt by manufacturers to suppress publication. The ascertained effects of reporting bias included the overestimation of efficacy and the underestimation of safety risks of interventions.

In conclusion, reporting bias is a widespread phenomenon in the medical literature. Mandatory prospective registration of trials and public access to study data via results databases need to be introduced on a worldwide scale. This will allow for an independent review of research data, help fulfil ethical obligations towards patients, and ensure a basis for fully-informed decision making in the health care system.

Peer Review reports

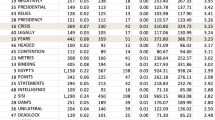

The reporting of research findings may depend on the nature and direction of results, which is referred to as "reporting bias" [ 1 , 2 ]. For example, studies in which interventions are shown to be ineffective are sometimes not published, meaning that only a subset of the relevant evidence on a topic may be available [ 1 , 2 ]. Various types of reporting bias exist (Table 1 ), including publication bias and outcome reporting bias, which concern bias from missing outcome data on 2 levels: the study level, i.e. "non-publication due to lack of submission or rejection of study reports", and the outcome level, i.e. "the selective non-reporting of outcomes within published studies" [ 3 ].

Reporting bias on a study level

Results of clinical research are largely underreported or reported with delay. Various analyses of research protocols submitted to institutional review boards and research ethics committees in Europe, the United States, and Australia found that on average, only about half of the protocols had been published, with higher publication rates in Anglo-Saxon countries [ 4 – 10 ].

Similar analyses have been performed of trials submitted to regulatory authorities: a cohort study of trials supporting new drugs approved by the Food and Drug Administration (FDA) identified over 900 trials of 90 new drugs in FDA reviews; only 43% of the trials were published [ 11 ]. Wide variations in publication rates have been shown for specific indications [ 12 – 16 ]. The selective submission of clinical trials with positive outcomes to regulatory authorities has also been described [ 17 ]. Even if trials are published, the time lapse until publication may be substantial [ 8 , 18 , 19 ].

There is no simple classification of a clinical trial into "published" or "unpublished", as varying degrees of publication exist. These range from full-text publications in peer-reviewed journals that are easily identifiable through a search in bibliographic databases, to study information entered in trial registries, so-called grey literature (e.g. abstracts and working papers), and data on file in drug companies and regulatory agencies, which may or may not be provided to health technology assessment (HTA) agencies or other researchers after being requested. If such data are transmitted, they may then be fully published or not (e.g. the German Institute for Quality and Efficiency in Health Care [Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen, IQWiG] publishes all data used in its assessment reports [ 20 ], whereas the UK National Institute for Clinical Excellence [NICE] may accept "commercial in confidence" data [ 21 ]).

Even if studies are presented at meetings, this does not necessarily mean subsequent full publication: an analysis of nearly 30,000 meeting abstracts from various disciplines found a publication rate of 63% for randomized or controlled clinical trials [ 22 ].

Reporting bias on an outcome level

Selective reporting within a study may involve (a) selective reporting of analyses or (b) selective reporting of outcomes. This may include, for example, the reporting of (a) per-protocol (PP) versus intention-to-treat (ITT) analyses or adjusted versus unadjusted analyses, and (b) outcomes from different time points or statistically significant versus non-significant outcomes [ 3 , 23 ].

Various reviews have found extensive selective reporting in study publications [ 3 , 14 , 24 – 28 ]. For example, comparisons of publications with study protocols have shown that primary outcomes had been newly introduced, omitted, or changed in about 40% to 60% of cases [ 3 , 24 ]. Selective reporting particularly concerns the underreporting of adverse events [ 12 , 29 – 32 ]. For example, an analysis of 192 randomized drug trials in various indications showed that only 46% of publications stated the frequency of specific reasons for treatment discontinuation due to toxicity [ 29 ]. Outcomes are not only selectively reported, but negative results are reported in a positive manner and conclusions are often not supported by results data [ 16 , 26 , 33 – 35 ]. For instance, a comparison of study characteristics reported in FDA reviews of New Drug Applications (NDAs) with those reported in publications found that 9 of 99 conclusions had been changed in the publications, all in favour of the new drug [ 26 ].

Factors associated with reporting bias

Characteristics of published studies.

The fact that studies with positive or favourable results are more likely to be published than those with negative or unfavourable results was already addressed in the 1950s [ 36 ], and has since been widely confirmed [ 3 , 6 – 8 , 14 , 37 – 40 ]. Studies with positive or favourable results have been associated with various other factors such as faster publication [ 8 , 18 , 19 , 37 ], publication in higher impact factor journals [ 7 , 41 ], a greater number of publications [ 7 ] (including covert duplicate publications [ 42 ]), more frequent citation [ 43 – 45 ], and more likely publication in English [ 46 ].

Several other factors have been linked to successful publication, for example, methodological quality [ 47 ], study type [ 47 ], sample size [ 5 , 7 , 48 ], multicentre status [ 5 , 6 , 41 ], and non-commercial funding [ 5 , 6 , 49 , 50 ]. However, for some factors, these associations are inconsistent [ 6 , 37 ].

Submission and rejection of studies

One of the main reasons for the non-publication of negative studies seems to be the non-submission of manuscripts by investigators, not the rejection of manuscripts by medical journals. A follow-up of studies approved by US institutional review boards showed that only 6 of 124 unpublished studies had actually been rejected for publication [ 6 ]. A prospective cohort study of 745 manuscripts submitted to JAMA showed no statistically significant difference in publication rates between studies with positive and those with negative results [ 51 ], which has been confirmed by further analyses of other journals [ 47 , 52 ]. Author surveys have shown that the most common reasons for not submitting papers were negative results and a lack of interest, time, or other resources [ 39 – 41 , 53 ].

The role of the pharmaceutical industry

An association has been shown between industry sponsorship or industry affiliation of authors and positive research outcomes and conclusions, both in publications of primary studies and in systematic reviews [ 49 , 54 – 63 ]. For example, in a systematic review of the scope and impact of financial conflicts of interest in biomedical research, an aggregation of the results of 8 analyses of the relation between industry sponsorship and outcomes showed a statistically significant association between industry sponsorship and pro-industry conclusions [ 55 ]. A comparison of the methodological quality and conclusions in Cochrane reviews with those in industry-supported meta-analyses found that the latter were less transparent, less critical of methodological limitations of the included trials, and drew more favourable conclusions [ 57 ]. In addition, publication constraints and active attempts to prevent publication have been identified in industry-sponsored research [ 55 , 64 – 68 ]. Other aspects of industry involvement, such as design bias, are beyond the scope of this paper.

Rationale, aim and procedure

IQWiG produces HTA reports of drug and non-drug interventions for the decision-making body of the statutory health care funds, the Federal Joint Committee. The process of report production includes requesting information on published and unpublished studies from manufacturers; unfortunately, compliance by manufacturers is inconsistent, as recently shown in the attempted concealment of studies on antidepressants [ 69 ]. Reporting bias in antidepressant research has been shown before [ 16 , 70 ]; other well-known cases include Class I anti-arrhythmic drugs [ 71 , 72 ] and selective COX-2 inhibitors [ 73 , 74 ].

The aim of this narrative review was to gain an overview of reporting bias in the medical literature, focussing on publication bias and selective outcome reporting. We wished to explore whether this type of bias has been shown in areas beyond the well-known cases noted above, in order to obtain an impression of how widespread this problem is. The review was based on the screening of full-text publications on reporting bias that had either been obtained by the Institute in the context of its HTA reports and other research work or were identified by the screening of the reference lists of the on-site publications. The retrieved examples were organized according to indications and interventions. We also discuss the effects of reporting bias, as well as the measures that have been implemented to solve this problem.

The term "reporting bias" traditionally refers to the reporting of clinical trials and other types of studies; if one extends this term beyond experimental settings, for example, to the withholding of information on any beneficial medical innovation, then an early example of reporting bias was noted by Rosenberg in his article "Secrecy in medical research", which describes the invention of the obstetrical forceps. This device was developed by the Chamberlen brothers in Europe in the 17th century; however, it was kept secret for commercial reasons for 3 generations and as a result, many women and neonates died during childbirth [ 75 ]. In the context of our paper, we also considered this extended definition of reporting bias.

We identified reporting bias in 40 indications comprising around 50 different interventions. Examples were found in various sources, e.g. journal articles of published versus unpublished data, reviews of reporting bias, editorials, letters to the editor, newspaper reports, expert and government reports, books, and online sources. The following text summarizes the information presented in these examples. More details and references to the background literature are included in Additional file 1 : Table S2.

Mental and behavioural disorders

Reporting bias is common in psychiatric research (see below). This also includes industry-sponsorship bias [ 76 – 82 ].

Turner et al compared FDA reviews of antidepressant trials including over 12,000 patients with the matching publications and found that 37 out of 38 trials viewed as positive by the FDA were published [ 16 ]. Of the 36 trials having negative or questionable results according to the FDA, 22 were unpublished and 11 of the 14 published studies conveyed a positive outcome. According to the publications, 94% of the trials had positive results, which was in contrast to the proportion reported by the FDA (51%). The overall increase in effect size in the published trials was 32%. In a meta-analysis of data from antidepressant trials submitted to the FDA, Kirsch et al requested data on 6 antidepressants from the FDA under the Freedom of Information Act. However, the FDA did not disclose relevant data from 9 of 47 trials, all of which failed to show a statistically significant benefit over placebo. Data from 4 of these trials were available on the GlaxoSmithKline (GSK) website. In total, the missing data represented 38% of patients in sertraline trials and 23% of patients in citalopram trials. The analysis of trials investigating the 4 remaining antidepressants showed that drug-placebo differences in antidepressant efficacy were relatively small, even for severely depressed patients [ 83 ].

Selective serotonin reuptake inhibitors (SSRIs)

One of the biggest controversies surrounding unpublished data was the withholding of efficacy and safety data from SSRI trials. In a lawsuit launched by the Attorney General of the State of New York it was alleged that GSK had published positive information about the paediatric use of paroxetine in major depressive disorder (MDD), but had concealed negative safety and efficacy data [ 84 ]. The company had conducted at least 5 trials on the off-label use of paroxetine in children and adolescents but published only one, which showed mixed results for efficacy. The results of the other trials, which did not demonstrate efficacy and suggested a possible increased risk of suicidality, were suppressed [ 84 ]. As part of a legal settlement, GSK agreed to establish an online clinical trials registry containing results summaries for all GSK-sponsored studies conducted after a set date [ 85 , 86 ].

Whittington et al performed a systematic review of published versus unpublished data on SSRIs in childhood depression. While published data indicated a favourable risk-benefit profile for some SSRIs, the inclusion of unpublished data indicated a potentially unfavourable risk-benefit profile for all SSRIs investigated except fluoxetine [ 70 ].

Newer antidepressants

IQWiG published the preliminary results of an HTA report on reboxetine, a selective norepinephrine reuptake inhibitor, and other antidepressants. At least 4600 patients had participated in 16 reboxetine trials, but the majority of data were unpublished. Despite a request for information the manufacturer Pfizer refused to provide these data. Only data on about 1600 patients were analysable and IQWiG concluded that due to the risk of publication bias, no statement on the benefit or harm of reboxetine could be made [ 69 , 87 ]. The preliminary HTA report mentioned above also included an assessment of mirtazapine, a noradrenergic and specific serotonergic antidepressant. Four potentially relevant trials were identified in addition to 27 trials included in the assessment, but the manufacturer Essex Pharma did not provide the study reports. Regarding the other trials, the manufacturer did not send the complete study reports, so the full analyses were not available. IQWiG concluded that the results of the assessment of mirtazapine may have been biased by unpublished data [ 69 , 87 ]. After the behaviour of Pfizer and Essex Pharma had been widely publicized, the companies provided the majority of study reports for the final HTA report. The preliminary report's conclusion on the effects of mirtazapine was not affected by the additional data. For reboxetine, the analysis of the published and unpublished data changed the conclusion from "no statement possible" to "no benefit proven" [ 88 ].

Bipolar disorder

Lamotrigine.

A review by Nassir Ghaemi et al of data on lamotrigine in bipolar disorder provided on the GSK website showed that data from negative trials were available on the website but that the studies had not been published in detail or publications emphasized positive secondary outcomes instead of negative primary ones. Outside of the primary area of efficacy (prophylaxis of mood episodes), the drug showed very limited efficacy in indications such as acute bipolar depression, for which clinicians were supporting its use [ 35 ].

Gabapentin, a GABA analogue, was approved by the FDA in 1993 for a certain type of epilepsy, and in 2002 for postherpetic neuralgia. As of February 1996, 83% of gabapentin use was for epilepsy, and 17% for off-label indications (see the expert report by Abramson [ 89 ]). As the result of a comprehensive marketing campaign by Pfizer, the number of patients in the US taking gabapentin rose from about 430,000 to nearly 6 million between 1996 and 2001; this increase was solely due to off-label use for indications, including bipolar disorder. As of September 2001, 93.5% of gabapentin use was for off-label indications [ 89 ]. In a further expert report, Dickersin noted "extensive evidence of reporting bias" [ 34 ], which she further analysed in a recent publication with Vedula et al [ 90 ]. Concerning the trials of gabapentin for bipolar disorders, 2 of the 3 trials (all published) were negative for the primary outcome. However, these publications showed "extensive spin and misrepresentation of data" [ 34 ].

Schizophrenia

The Washington Post reported that a trial on quetiapine, an atypical antipsychotic, was "silenced" in 1997, the same year it was approved by the FDA to treat schizophrenia. The study ("Study 15") was not published. Patients taking quetiapine had shown high rates of treatment discontinuations and had experienced significant weight increases. However, data presented by the manufacturer AstraZeneca in 1999 at European and US meetings actually indicated that the drug helped psychotic patients lose weight [ 91 ].

Panic disorder

Turner described an example of reporting bias in the treatment of panic disorder: according to a review article, 3 "well designed studies" had apparently shown that the controlled-release formulation of paroxetine had been effective in patients with this condition. However, according to the corresponding FDA statistical review, only one study was strongly positive, the second study was non-significant regarding the primary outcome (and marginally significant for a secondary outcome), and the third study was clearly negative [ 92 ].

Further examples of reporting bias in research on mental and behavioural disorders are included in Additional file 1 : Table S2.

Disorders of the nervous system

Alzheimer's disease.

Internal company analyses and information provided by the manufacturer Merck & Co to the FDA on rofecoxib, a selective COX-2 inhibitor, were released during litigation procedures. The documents referred to trials investigating the effects of rofecoxib on the occurrence or progression of Alzheimer's disease. Psaty and Kronmal performed a review of these documents and 2 trial publications and showed that, although presenting mortality data, the publications had not included analyses or statistical tests of these data and both had concluded that regarding safety, rofecoxib was "well tolerated". In contrast, in April 2001, Merck's internal ITT analyses of pooled data from these 2 trials showed a significant increase in total mortality. However, this information was neither disclosed to the FDA nor published in a timely fashion [ 74 ]. Rofecoxib was taken off the market by Merck in 2004 [ 93 ], among allegations that the company had been aware of the safety risks since 2000 [ 73 ].

In their article "An untold story?", Lenzer and Brownlee reported the case of valdecoxib, another selective COX-2 inhibitor withdrawn from the market due to cardiovascular concerns [ 94 , 95 ]. In 2001, the manufacturer Pfizer had applied for approval in 4 indications, including acute pain. The application for acute pain was rejected and some of the information about the corresponding trials removed from the FDA website for confidentiality reasons. Further examples of reporting bias in research on pain are presented in Additional file 1 : Table S2.