- Agile project management

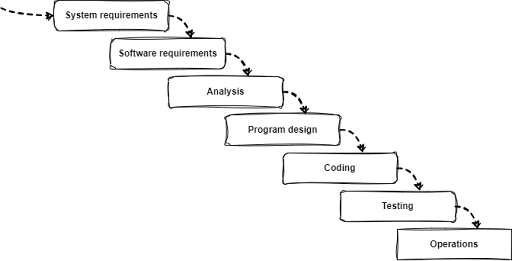

- Waterfall Methodology

Waterfall Methodology: A Comprehensive Guide

Browse topics.

If you've been in project management for a while, you must’ve encountered the Waterfall methodology. It's an old-school software development method from the 1970s.

In a Waterfall process, you must complete each project phase before moving to the next. It's pretty rigid and linear. The method relies heavily on all the requirements and thinking done before you begin.

Don't worry if you haven't heard of it. Let’s break the Waterfall method down and see how it works.

What is the Waterfall methodology?

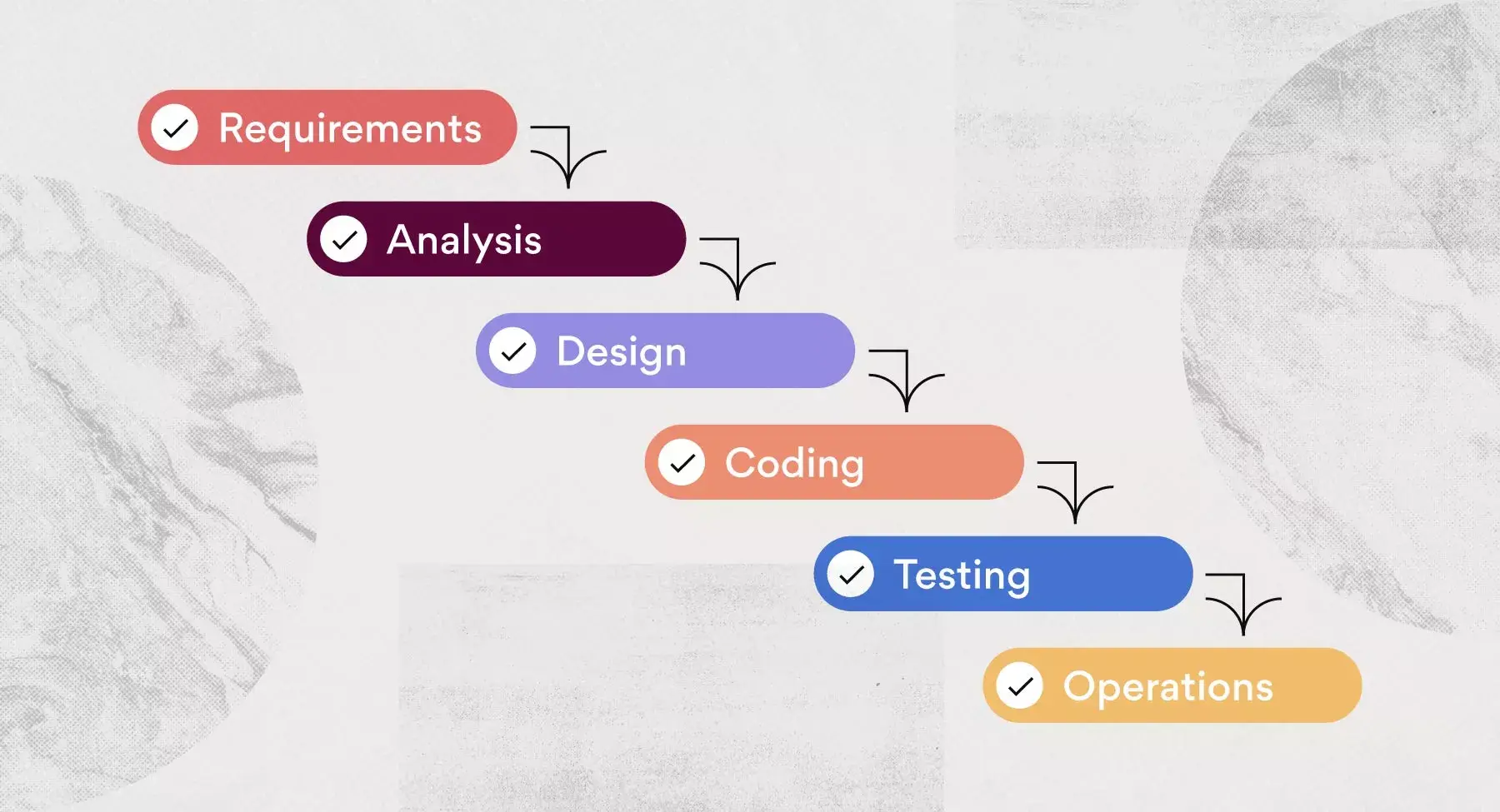

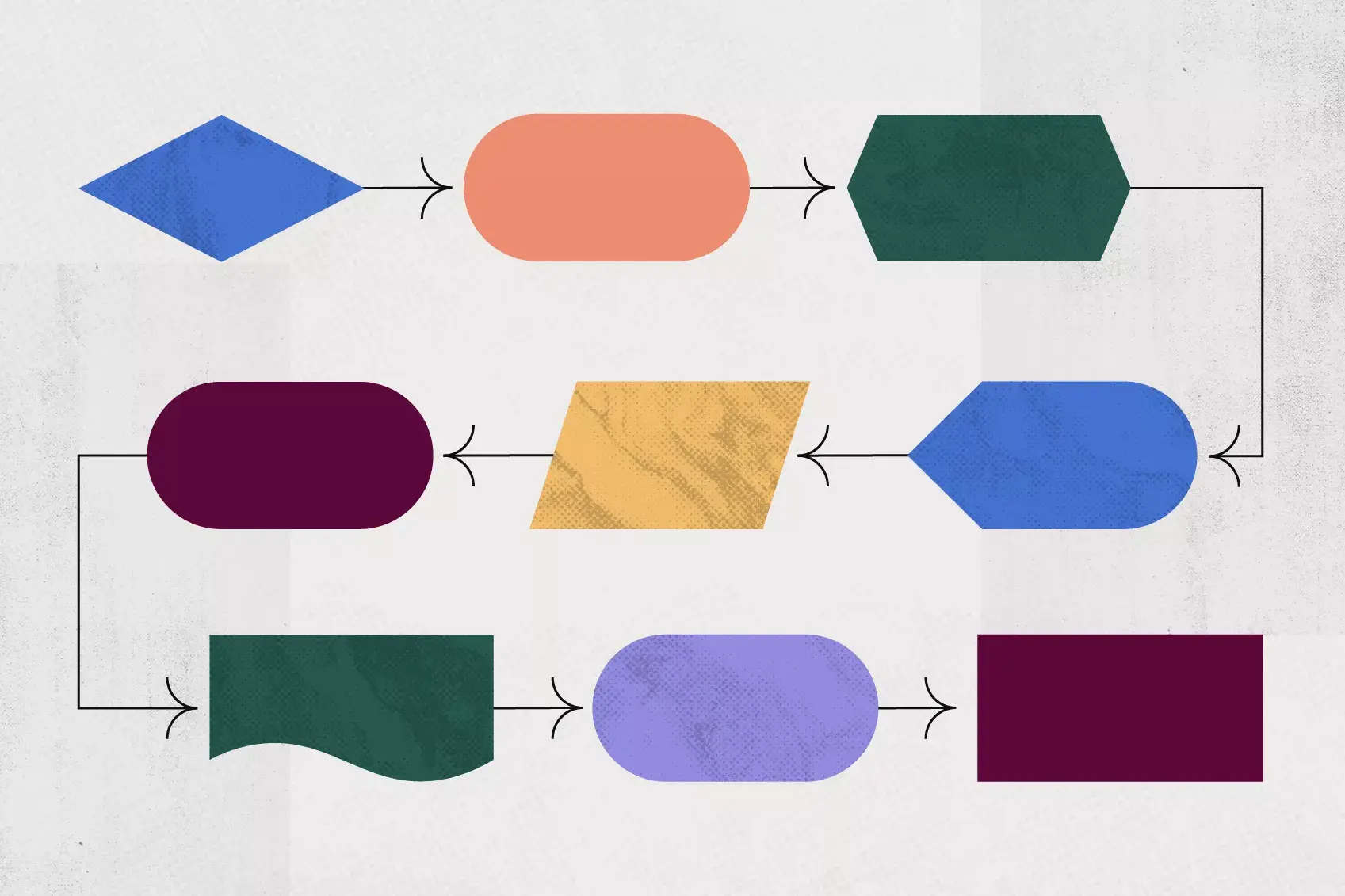

Waterfall methodology is a well-established project management workflow . Like a waterfall, each process phase cascades downward sequentially through five stages (requirements, design, implementation, verification, and maintenance).

The methodology comes from computer scientist Winston Royce’s 1970 research paper on software development. Although Royce never named this model “waterfall”, he gets credit for creating a linear, rigorous project management system.

Unlike other methods, such as the Agile methodology, Waterfall doesn't allow flexibility. You must finish one phase before beginning the next. Your team can’t move forward until they resolve any problems. Moreover, as our introduction to project management guide outlines, your team can’t address bugs or technical debt if it’s already moved on to the next project phase.

What are the stages of the Waterfall methodology?

Five phases comprise the Waterfall methodology: requirements, design, implementation, verification, and maintenance. Let's break down the five specific phases of Waterfall development and understand why it’s critical to complete each phase before progressing to the next.

Requirements

The requirements phase states what the system should do. At this stage, you determine the project's scope, from business obligations to user needs. This gives you a 30,000-foot overview of the entire project. The requirements should specify:

- resources required for the project.

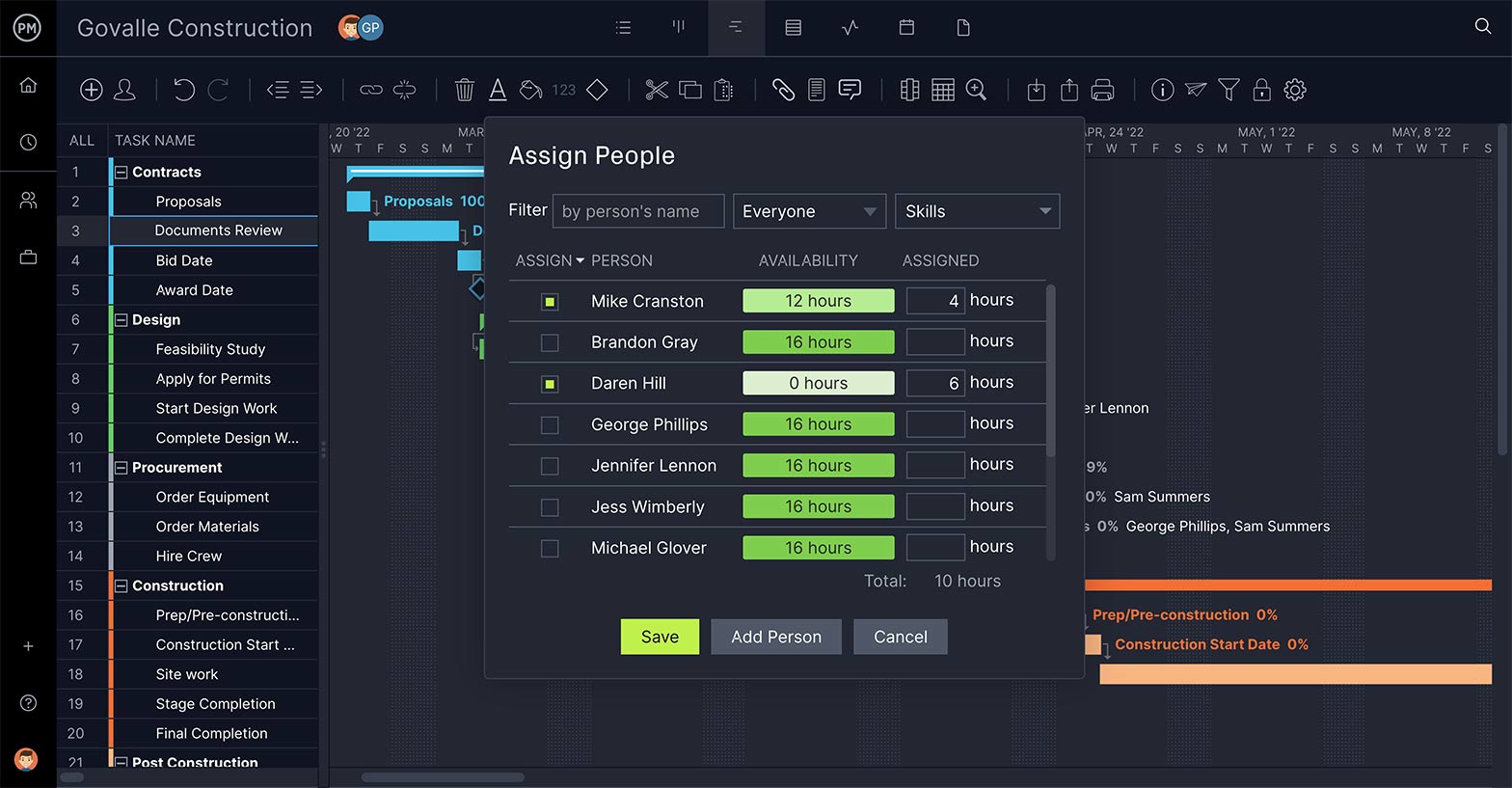

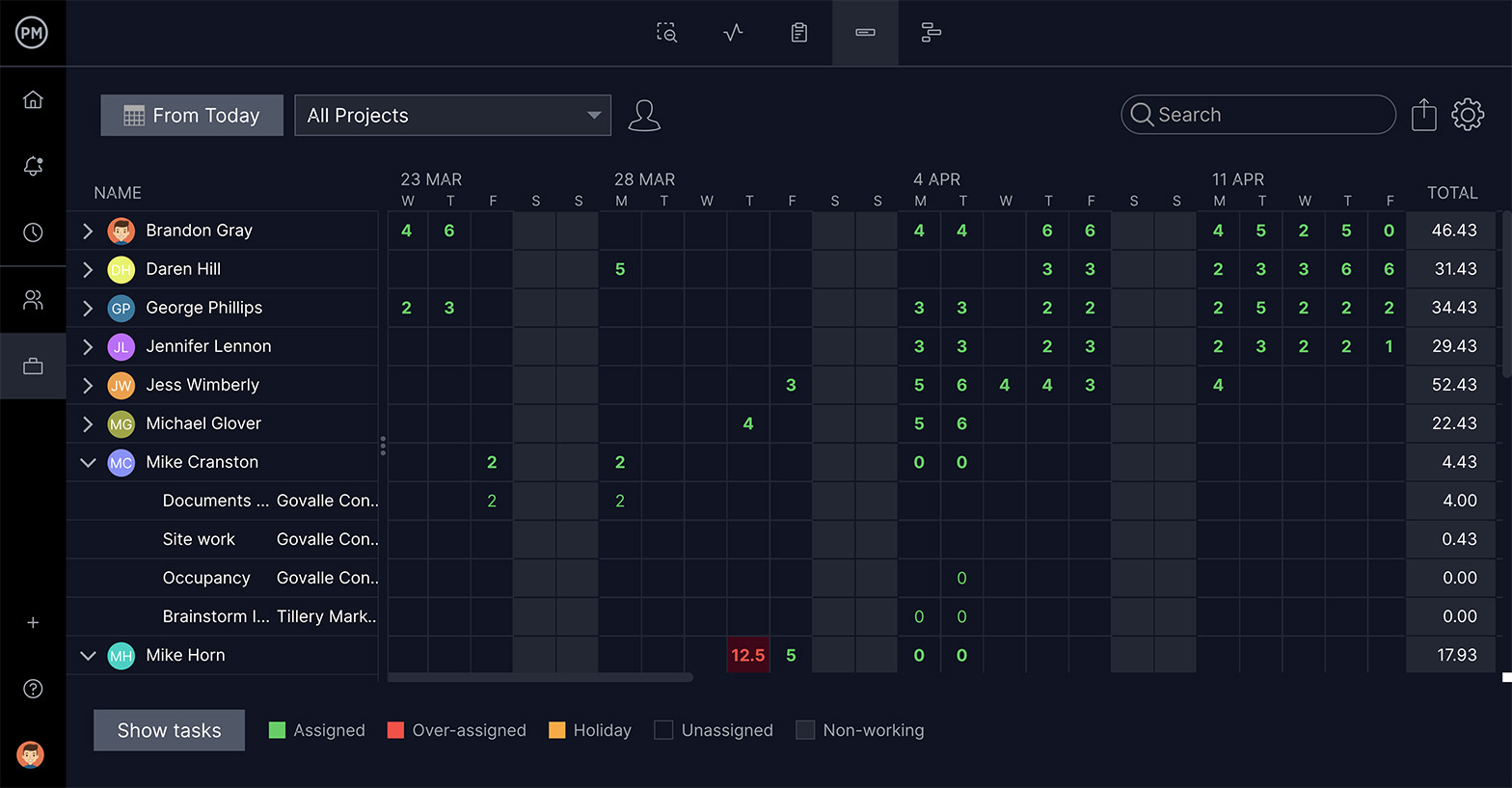

- what each team member will work on and at what stage.

- a timeline for the entire project, outlining how long each stage will take.

- details on each stage of the process.

But these requirements " may range from very abstract to a detailed mathematical specification ,” writes Steven Zeil , professor of computer science at Old Dominion University. That’s because requirements might not outline an exact implementation, and that’s something development addresses in later stages.

After gathering all the requirements, it's time to move on to the design stage. Here, designers develop solutions that meet the requirements. In this stage, designers:

- create schedules and project milestones.

- determine the exact deliverables.

- create designs and/or blueprints for deliverables.

Deliverables could include software or they could consist of a physical product. For instance, designers determine the system architecture and use cases for software. For a physical product, they figure out its exact specifications for production.

Implementation

Once the design is finalized and approved, it's time to implement it. Design hands off their specifications to developers to build.

To accomplish this, developers:

- create an implementation plan.

- collect any data or research needed for the build.

- assign specific tasks and allocate resources among the team.

Here is where you might even find out that parts of the design that can't be implemented. If it's a huge issue, you must step back and re-enter the design phase.

Verification

After the developers code the design, it’s time for quality assurance. It’s important to test for all use cases to ensure a good user experience. That's because you don't want to release a buggy product to customers.

- writes test cases.

- documents any bugs and errors to be fixed.

- tests one aspect at a time.

- determines which QA metrics to track.

- covers a variety of use case scenarios and environments.

Maintenance

After the product release, devs might have to squash bugs. Customers let your support staff know of any issues that come up. Then, it's up to the team to address those requests and release newer versions of your product.

As you can see, each stage depends on the one that comes before it. It doesn't allow for much error between or within phases.

For example, if a stakeholder wants to add a requirement when you're in the verification phase, you'll have to re-examine the entirety of your project. That could mean tossing the whole thing out and starting over.

Benefits of Waterfall methodology

The benefits of Waterfall methodology have made it a lasting workflow for projects that rely on a fixed outcome. A 2020 survey found that 56% of project professionals had used traditional, or Waterfall, models in the previous year.

A few benefits of Waterfall planning include:

- Clear project structure : Waterfall leaves little room for confusion because of rigorous planning. There is a clear end goal in sight that you're working toward.

- Set costs : The rigorous planning ensures that the time and cost of the project are known upfront.

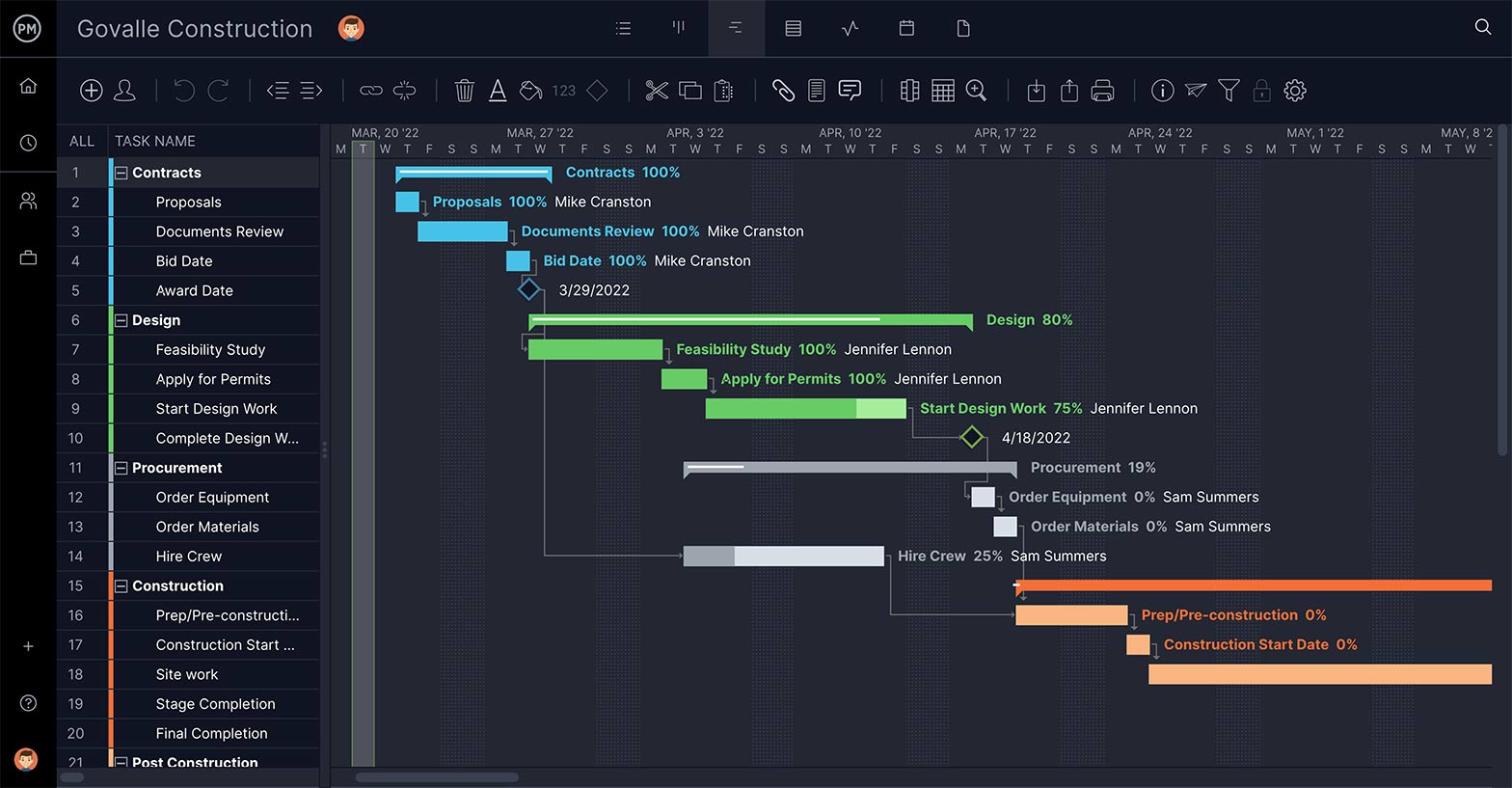

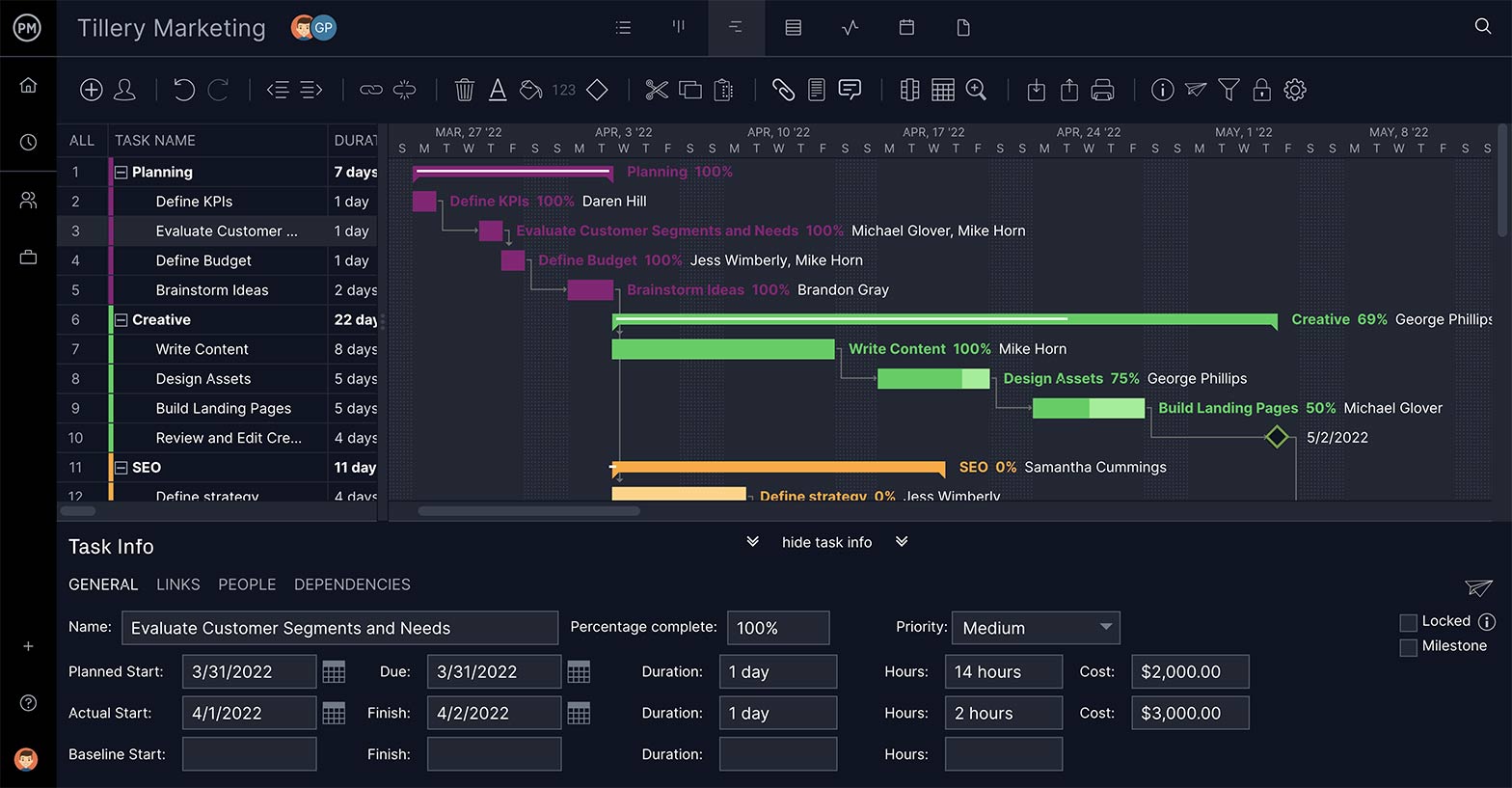

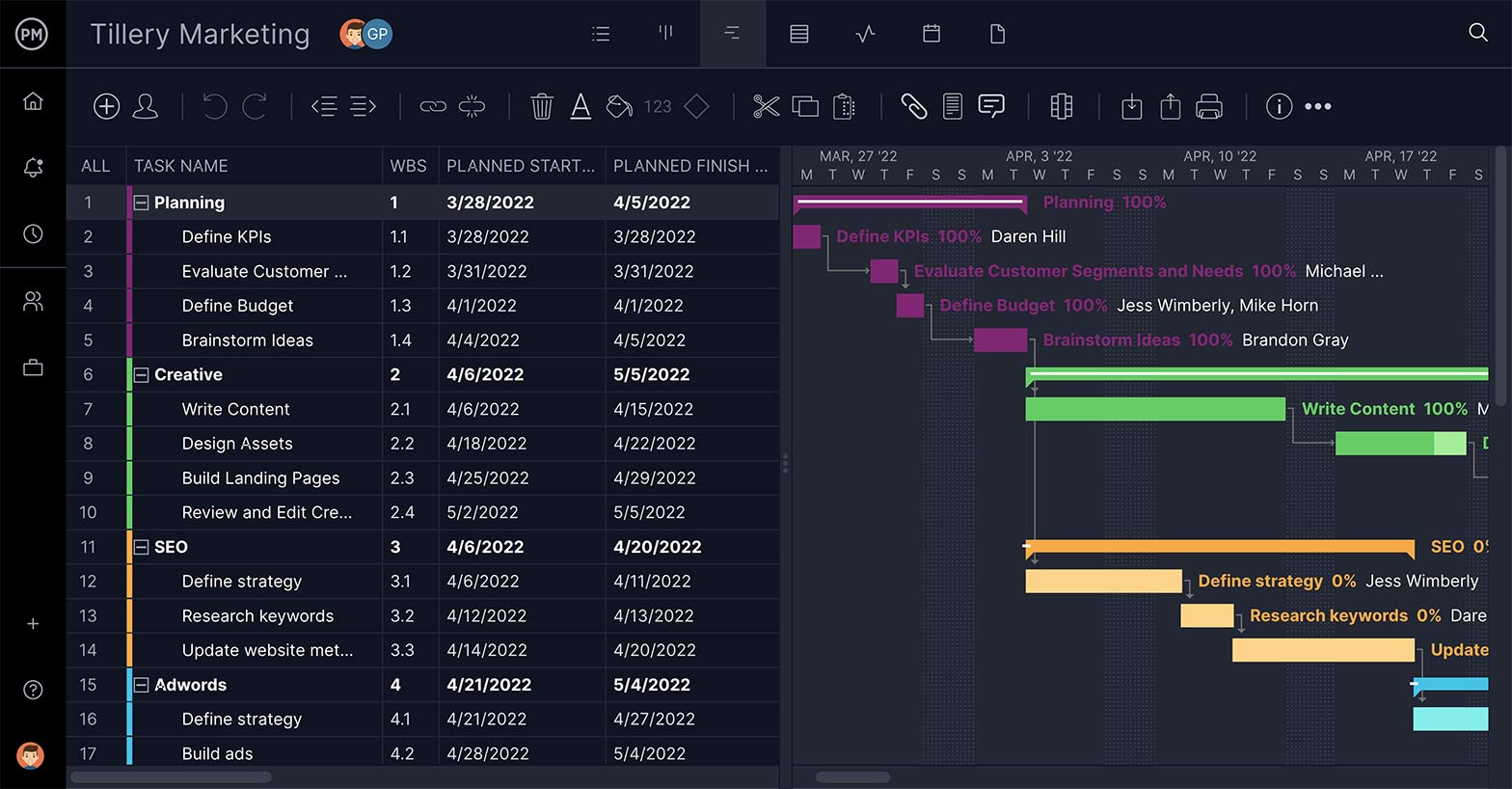

- Easier tracking : Assessing progress is faster because there is less cross-functional work. You can even manage the entirety of the project in a Gantt chart, which you can find in Jira.

- A replicable process : If a project succeeds, you can use the process again for another project with similar requirements.

- Comprehensive project documentation : The Waterfall methodology provides you with a blueprint and a historical project record so you can have a comprehensive overview of a project.

- Improved risk management : The abundance of upfront planning reduces risk. It allows developers to catch design problems before writing any code.

- Enhanced responsibility and accountability : Teams take responsibility within each process phase. Each phase has a clear set of goals, milestones, and timelines.

- More precise execution for a non-expert workforce : Waterfall allows less-experienced team members to plug into the process.

- Fewer delays because of additional requirements : Since your team knows the needs upfront, there isn't a chance for additional asks from stakeholders or customers.

Limitations of Waterfall methodology

Waterfall isn't without its limitations, which is why many product teams opt for an Agile methodology.

The Waterfall method works wonders for predictable projects but falls apart on a project with many variables and unknowns. Let's look at some other limitations of Waterfall planning:

- Longer delivery times : The delivery of the final product could take longer than usual because of the inflexible step-by-step process, unlike in an iterative process like Agile or Lean.

- Limited flexibility for innovation : Any unexpected occurrence can spell doom for a project with this model. One issue could move the project two steps back.

- Limited opportunities for client feedback : Once the requirement phase is complete, the project is out of the hands of the client.

- Tons of feature requests : Because clients have little say during the project's execution, there can be a lot of change requests after launch, such as addition of new features to the existing code. This can create further maintenance issues and prolong the launch.

- Deadline creep : If there's a significant issue in one phase, everything grinds to a halt. Nothing can move forward until the team addresses the problem. It may even require you to go back to a previous phase to address the issue.

Below is an illustration of a project using the waterfall approach. As you can see, the project is segmented into rigid blocks of time. This rigidity fosters an environment that encourages developers, product managers, and stakeholders to request the maximum amount of time allotted in each time block, since there may be no opportunity to iterate in the future.

How is the Waterfall method different from Agile project management?

Agile project management and the Waterfall methodology have the same end goal: crystal clear project execution. While Waterfall planning isolates teams into phases, Agile allows for cross-functional work across multiple phases of a project. Instead of rigid steps, teams work in a cycle of planning, executing, and evaluating, iterating as they go.

The " Agile Manifesto " explains the benefits of Agile over the Waterfall model:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change by following a plan

If you're looking for tools that support Agile project management and serve the same end goal as Waterfall, consider Jira . It’s best suited for Agile projects, and helps you:

- Track work : With Gantt charts , advanced roadmaps , timelines, and various other tools, you can easily track your progress throughout the project.

- Align your team : Tracking allows you to seamlessly plan across business teams, keeping everyone aligned on the same goals.

- Manage projects and workflows : With Jira, you can access project management templates that you can use for your Agile workflows .

- Plan at every stage : Jira Product Discovery , another product by Atlassian, offers product roadmaps for planning and prioritizing product features at every stage, from discovery to delivery.

Atlassian's Agile tools support the product development lifecycle. There are even Agile metrics for tracking purposes. Jira lets you drive forward the Agile process. It uses intake forms to track work being done by internal teams and offers a repeatable process for requests.

These Jira products integrate natively within the app, unifying teams so they can work faster.

Use Agile methodology for project management

Waterfall methodology has a long history in project management, but it's often not the right choice for modern software developers. Agile methodology offers greater flexibility.

Here’s why most teams prefer an Agile process:

- Adaptability to changes : If something arises, your team will be better able to adjust on the fly. Waterfall’s rigidity makes it difficult to deal with any roadblocks.

- Continuous feedback loop : Continuous improvement requires a feedback loop. With Agile, you can gather feedback from stakeholders during the process and iterate accordingly.

- Stronger communication : Teams work collaboratively in an Agile process. Waterfall is a series of handoffs between different teams, which hinders effective communication.

Here is where a project management tool such as Jira comes in handy for an Agile methodology. You can also use a project management template for your Agile projects. Your team can plan, collaborate, deliver, and report on projects in one tool. That keeps everyone aligned throughout any project and streamlines project management.

Waterfall methodology: Frequently asked questions

Who is best suited for waterfall methodology.

The Waterfall methodology works best for project managers working on projects that include:

- Less complex objectives : Projects that don't have complicated requirements are best suited for Waterfall.

- Predictable outcomes : Waterfall works best for those projects that are replicable and proven.

- Reduced likelihood of project scope creep : A project where clients aren't likely to come up with last-minute requirements is suitable for Waterfall.

Agile methodology is perfect for nimble teams with an iterative mindset, such as:

- Cross-functional teams : A team of people with different skill sets that allows them to work on various aspects of a project. These are collaborative types who are flexible.

- Self-organizing teams : Autonomous teams that don't need a lot of handholding. They embrace ambiguity in a project and are great problem solvers. This mindset also gives them more ownership over outcomes.

- Startups and small businesses : These benefit from the mindset of " move fast and break things ". So they can fail fast, learn, and improve.

Finally, Agile works well for customer-centric projects where their input allows you to iterate.

What factors should I consider before implementing a project management approach?

When deciding on the proper methodology to implement in project management, there are four main factors to consider: project complexity, organizational goals, team expertise, and stakeholder involvement.

Let’s break each one down:

- Project complexity : Waterfall can help break down larger, more complex projects into smaller sets of expectations and goals. But its rigidity doesn’t deal well with unknowns or changes. Agile is better for complex projects that have a lot of variables.

- Organizational goals : What does your organization want to achieve? Is it looking to innovate or keep the status quo? An Agile approach is best if your organization wants to break down silos. Teams will work more collaboratively with more autonomy.

- Team expertise : Agile is an excellent way to go if your team is cross-functional and can work across skill sets. If your team members rely heavily on a singular skill set, Waterfall may be better.

- Stakeholder involvement : If your stakeholders are going to be more hands-on, Agile will help you best because it allows for continuous feedback and iteration.

Get started building an agile workflow

Agile process flows help bring structure to scale your software development process. Learn more about workflow management to support your agile program.

Agile vs. waterfall project management

Agile project management is an incremental and iterative practice, while waterfall is a linear and sequential project management practice

Examples Of The Waterfall Model

Anjali works at a technology firm where she’s been assigned to lead a team to deliver an elaborate software program…

Anjali works at a technology firm where she’s been assigned to lead a team to deliver an elaborate software program within a very tight schedule. At first, Anjali tries to coordinate with her associates and create her own model. But as the pressure mounts, her model crumbles and the entire team is rattled.

Anjali spends a couple of days researching solutions and discovers the waterfall model. She goes through the waterfall model in detail and distributes the responsibilities for the project among several departments, based on the different phases of the model.

As the project requires utmost stability, Anjali creates a blueprint and a timeline that aren’t subject to change and feeds them into the waterfall model. Thereafter, the model takes care of everything. With a strict schedule for delivery in place and all departmental roles neatly assigned, the waterfall model brings the project to a close one week ahead of time and in the smoothest manner possible.

Anjali’s success becomes another excellent example of the waterfall model doing what it does best.

What Is The Waterfall Model?

When is the waterfall model used, how to explain the waterfall model with examples, solutions at your fingertips.

Before proceeding to explain the waterfall model with examples, let’s go over the basics of the waterfall model and what exactly it’s supposed to achieve.

The waterfall model was one of the first models to be introduced in project management. As a linear or sequential model, the waterfall model has a number of phases, each of which must be completed before moving onto the next one. This is why the model is known as the waterfall model because its movement from one phase to another in a downward manner similar to a waterfall.

For smooth functioning, the waterfall model uses the output from one phase as input for the next phase. At the end of each phase, you’re supposed to carry out a review to find out if the project is on the right path or whether it needs to be discarded and restarted.

The term “waterfall” was used for the first time in a 1976 paper co-authored by Thomas Bell and Thomas Thayer to describe their model. However, the first formal and detailed diagram of the model had been published before, in an article in 1970 written by Winston Royce. Royce’s article was largely critical of the waterfall model, particularly on how testing of the model could only be performed at the end of the process.

The waterfall model that you’re likely to come across today includes seven phases, which are listed as follows:

Recruitment Gathering

System Design

Implementation

Integration And Testing

Deployment Of System

Maintenance Or Fixing Issues

Nowadays, the waterfall model is one of several models that are frequently used for project management. Other models include iterative and agile models, which are much more flexible as compared to the waterfall approach.

In order to understand a real-life example of the waterfall model, let’s familiarize ourselves with situations when the waterfall model is usually used:

When the project requirements are laid down at the outset and remain more or less fixed throughout the entire process

When the product definition is stable and a lot of information is required before completing each phase

In cases where a strict timeline needs to be prepared and followed, without alterations

In sectors involving engineering design and software development that generally demand project management on a large scale

In manufacturing and construction industries, where design changes are usually very costly

In the closing decades of the 20th century, the waterfall model was used primarily to develop enterprise applications like Human Resource Management Systems (HRMS), Supply Chain Management Systems, Customer Relationship Management (CRM) systems, Inventory Management Systems, Point of Sales (POS) systems for retail chains, etc. The model was also extremely popular in software development.

With the evolution of technology, there were cases where large-scale enterprise systems, with the waterfall model as the default choice, were developed over a period of two to three years but became redundant by the time they were completed. Slowly, these enterprise systems switched over to more flexible and less expensive models, but the waterfall model continued to be preferred in systems where:

A human life is at stake and a system failure could result in fatalities

Money and time are secondary factors and what matters more is the safety and stability of a project

Military and aircraft programs where requirements are declared early on and remain constant

Projects with an extremely high degree of oversight and/or accountability such as those in the sectors of banking, healthcare and control systems for nuclear facilities

Now that you’ve grasped the several sectors in which the waterfall model used to be and is still deployed, here is a real-life example of the waterfall model at work.

Here, the waterfall model is used to manufacture a tractor, with each of its phases outlining the work that needs to be done. Before moving to the phases, however, the organization manufacturing the tractor would need to carry out a feasibility study, including planning the budget and adding new features to the tractor that’ll put it ahead of other tractors in the market.

Thereafter, the following phases (only including the most important ones) take over:

This phase of the waterfall model is used to determine the speed, mileage, engine specifications, color and seat requirements of the tractor to be manufactured.

This phase is concerned with developing and designing the frame material, the exterior and interior body quality and material as well as the tyre quality for the tractor.

Implementation:

This phase brings together the two previous phases by combining all the pre-decided features and actually manufacturing the tractor.

This phase is all about trying out the tractor under various circumstances and conditions, from evaluating its performance on different types of roads and weather conditions to checking its durability, fuel consumption and the amount of heat it produces.

Maintenance:

The final phase is about offering regular services to preserve the quality of the tractor and make whatever repairs or adjustments are necessary.

Let’s look at another real-life example of the waterfall model, where the different phases have been used to manufacture and deliver a software program that relies on university rankings and student scores to determine which universities and courses are best suited for students opting for an undergraduate degree.

As with the previous example of the waterfall model, the organization designing the software program needs to perform a feasibility study to find out what kind of programs are already present in the market that can achieve similar tasks in academia. Following this, the most important phases of the waterfall model can start functioning as follows:

This phase will be tasked with gathering all the information available on student scores and university rankings and devising the different parameters that’ll be used for determining a university’s suitability for a student.

In this example of the waterfall model, the design phase is all about fine-tuning the parameters established in the analysis phase and making sure that the structure of the software program is precise enough to avoid any manipulation of or confusion over large volumes of data.

This all-important phase involves doing dummy runs of the software program with a provisional set of data to see the accuracy with which the program can suggest appropriate universities for students. These suggestions should then be matched with results obtained from academic counselors who have arrived at the suggestions through their years of professional expertise.

As with any example of the waterfall model, the testing phase is about ensuring that all features of the software program function smoothly and that there are no glitches that can derail the utility of the overall program.

In the final phase, the software program should be checked for any necessary updates or alterations that may be required, besides the expected inclusion of new data, including a greater volume of student scores and a fresh set of university rankings.

The waterfall model is just one example of the many approaches adopted in project management . At Harappa, the Executing Solutions course is tailor-made for you to master several approaches, such as the Branding, Leadership And Selling Techniques ( BLAST ) approach (on how to develop a mindset for devising responsible solutions), the Bifocal Approach (a strategy that balances short-term and long-term views).

With the help of a world-class faculty, this course will allow you to closely monitor your progress, navigate crises, scrutinize frameworks and develop a holistic approach to managing all kinds of projects. Sign up for the Executing Solutions course today and join employees from organizations like NASSCOM, Uber and Standard Chartered in elevating your management skills.

Explore Harappa Diaries to learn more about topics such as How Does The Waterfall Model Help In Project Management, Advantages & Disadvantages Of Waterfall Model , What Is Project Management , Introduction To Operations Management & How To Do A PERT Analysis and monitor your projects efficiently.

- Product overview

- All features

- App integrations

CAPABILITIES

- project icon Project management

- Project views

- Custom fields

- Status updates

- goal icon Goals and reporting

- Reporting dashboards

- workflow icon Workflows and automation

- portfolio icon Resource management

- Time tracking

- my-task icon Admin and security

- Admin console

- asana-intelligence icon Asana Intelligence

- list icon Personal

- premium icon Starter

- briefcase icon Advanced

- Goal management

- Organizational planning

- Campaign management

- Creative production

- Content calendars

- Marketing strategic planning

- Resource planning

- Project intake

- Product launches

- Employee onboarding

- View all uses arrow-right icon

- Project plans

- Team goals & objectives

- Team continuity

- Meeting agenda

- View all templates arrow-right icon

- Work management resources Discover best practices, watch webinars, get insights

- What's new Learn about the latest and greatest from Asana

- Customer stories See how the world's best organizations drive work innovation with Asana

- Help Center Get lots of tips, tricks, and advice to get the most from Asana

- Asana Academy Sign up for interactive courses and webinars to learn Asana

- Developers Learn more about building apps on the Asana platform

- Community programs Connect with and learn from Asana customers around the world

- Events Find out about upcoming events near you

- Partners Learn more about our partner programs

- Support Need help? Contact the Asana support team

- Asana for nonprofits Get more information on our nonprofit discount program, and apply.

Featured Reads

- Project management |

- Guide to waterfall methodology: Free te ...

Guide to waterfall methodology: Free template and examples

Waterfall project management is a sequential project management methodology that's divided into distinct phases. Each phase begins only after the previous phase is completed. This article explains the stages of the waterfall methodology and how it can help your team achieve their goals.

But what if your project requires a more linear approach? Waterfall methodology is a linear project management methodology that can help you and your team achieve your shared goals—one task or milestone at a time. By prioritizing tasks and dependencies, the waterfall method helps keep your project on track.

What is waterfall methodology?

Waterfall methodology, a term coined by Dr. Winston W. Royce in 1970, is a sequential design process used in software development and product development where project progress flows steadily downwards through several phases—much like a waterfall. The waterfall model is structured around a rigid sequence of steps that move from conception, initiation, analysis, design, construction, testing, implementation, and maintenance.

Unlike more flexible models, such as Agile, the waterfall methodology requires each project phase to be completed fully before the next phase begins, making it easier to align with fixed budgets, timelines, and requirements.

By integrating comprehensive documentation and extensive upfront planning, waterfall methodology minimizes risk and tends to align well with traditional project management approaches that depend on detailed records and a clear, predetermined path to follow.

For example, here’s what a waterfall project might look like:

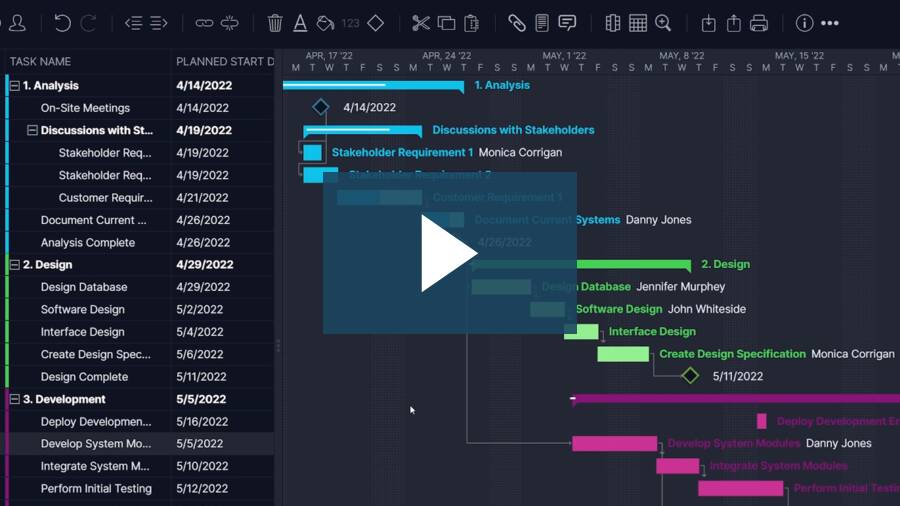

The waterfall methodology is often visualized in the form of a flow chart or a Gantt chart. This methodology is called waterfall because each task cascades into the next step. In a Gantt chart, you can see the previous phase "fall" into the next phase.

6 phases of the waterfall project management methodology

Any team can implement waterfall project management, but this methodology is most useful for processes that need to happen sequentially. If the project you’re working on has tasks that can be completed concurrently, try another framework, like the Agile methodology .

If you’re ready to get started with the waterfall methodology, follow these six steps:

1. Requirements phase

This is the initial planning process in which the team gathers as much information as possible to ensure a successful project. Because tasks in the waterfall method are dependent on previous steps, it requires a lot of forethought. This planning process is a crucial part of the waterfall model, and because of that, most of the project timeline is often spent planning.

To make this method work for you, compile a detailed project plan that explains each phase of the project scope. This includes everything from what resources are needed to what specific team members are working on the project. This document is commonly referred to as a project requirements document.

By the end of the requirements phase, you should have a very clear outline of the project from start to finish, including:

Each stage of the process

Who’s working on each stage

Key dependencies

Required resources

A timeline of how long each stage will take.

A well-crafted requirements document serves as a roadmap for the entire project, ensuring that all stakeholders are on the same page.

2. System design phase

In a software development process, the design phase is when the project team specifies what hardware the team will be using, and other detailed information such as programming languages, unit testing, and user interfaces. This phase of the waterfall methodology is key to ensuring that the software will meet the required functionality and performance metrics.

There are two steps in the system design phase: the high-level design phase and the low-level design phase. In the high-level design phase, the team builds out the skeleton of how the software will work and how information will be accessed. During the low-level design phase, the team builds the more specific parts of the software. If the high-level design phase is the skeleton, the low-level design phase is the organs of the project.

Those team members developing using the waterfall method should document each step so the team can refer back to what was done as the project progresses.

3. Implementation phase

This is the stage where everything is put into action. The team starts the full development process to build the software in accordance with both the requirements phase and the system design phase, using the requirements document from step one and the system design process from step two as guides.

During the implementation phase, developers work on coding and unit testing to ensure that the software meets the specified requirements.

4. Testing phase

This is the stage in which the development team hands the project over to the quality assurance testing team. QA testers search for any bugs or errors that need to be fixed before the project is deployed.

Testers should clearly document all of the issues they find when QAing. In the event that another developer comes across a similar bug, they can reference previous documentation to help fix the issue.

5. Deployment phase

For development projects, this is the stage at which the software is deployed to the end user. For other industries, this is when the final deliverable is launched and delivered to end customers. A successful deployment phase requires careful planning and coordination to ensure a smooth rollout.

6. Maintenance phase

Once a project is deployed, there may be instances where a new bug is discovered or a software update is required. This is known as the maintenance phase, and it's common in the software development life cycle to be continuously working on this phase.

Regular maintenance and updates are essential for keeping the software running smoothly and addressing any issues that arise post-deployment.

When to use waterfall methodology

The waterfall methodology is a common form of project management because it allows for thorough planning and detailed documentation. However, this framework isn’t right for every project. Here are a few examples of when to use this type of project management.

Project has a well-defined end goal

One of the strengths of the waterfall approach is that it allows for a clear path from point A to point B. If you're unsure of what point B is, your project is probably better off using an iterative form of project management like the Agile approach.

Projects with an easily defined end goal are well-suited for waterfall methodology because project managers can work backwards from the goal to create a clear and detailed path with all of the requirements necessary.

No restraints on budget or time

If your project has no restraints on budget or time, team members can spend as much time as possible in the requirements and system design phases. They can tweak and tailor the needs of the project as much as they want until they land on a well-thought-out and defined project plan.

Creating repeatable processes

The waterfall model requires documentation at almost every step of the process. This makes it easy to repeat your project for a new team member; each step is clearly detailed so you can recreate the process.

Creating repeatable processes also makes it easy to train new team members on what exactly needs to be done in similar projects. This makes the waterfall process an effective approach to project management for standardizing processes.

Waterfall vs. Agile methodologies

While the waterfall methodology follows a linear, sequential approach, Agile is an iterative and incremental methodology. In Agile, the project is divided into smaller, manageable chunks known as sprints. Each sprint includes planning, design, development, testing, and review phases.

The Agile method emphasizes flexibility, collaboration, and rapid iteration based on continuous feedback. It allows for changes and adaptations throughout the project's lifecycle. In contrast, the waterfall model has a more rigid structure with distinct phases and limited room for changes once a phase is complete.

The choice between waterfall and Agile depends on factors such as project complexity, clarity of requirements, team size, and client involvement. The waterfall model is suitable for projects with well-defined requirements and minimal changes expected, while the Agile method is favored for projects with evolving requirements and a need for frequent client feedback and course corrections.

Benefits of waterfall methodology

Consistent documentation makes it easy to backtrack.

When you implement the waterfall project management process, you’re creating documentation every step of the way. This can be beneficial—if your team needs to backtrack your processes, you can easily find mistakes. It's also great for creating repeatable processes for new team members, as mentioned earlier.

Tracking progress is easy

By laying out a waterfall project in a Gantt chart, you can easily track project progress. The timeline itself serves as a progress bar, so it’s always clear what stage a project is in.

![waterfall approach case study [Old Product UI] Mobile app launch project in Asana (Timeline)](https://assets.asana.biz/transform/a5f7977a-e36d-43fb-89e9-ca12b741ca11/inline-visual-project-management-kanban-timeline-calendar-2-2x?io=transform:fill,width:2560&format=webp)

Team members can manage time effectively

Because the waterfall methodology requires so much upfront planning during the requirement and design phase, it is easy for stakeholders to estimate how much time their specific part of the waterfall process will take.

Downsides of waterfall project management

Roadblocks can drastically affect timeline.

The waterfall methodology is linear by nature, so if there's a bump in the road or a task gets delayed, the entire timeline is shifted. For example, if a third-party vendor is late on sending a specific part to a manufacturing team, the entire process has to be put on hold until that specific piece is received.

Linear progress can make backtracking challenging

One of the major challenges of the waterfall methodology is that it's hard to go back to a phase once it's already been completed. For example, if someone is painting the walls of a house, they wouldn’t be able to go back and increase the size of one of the rooms.

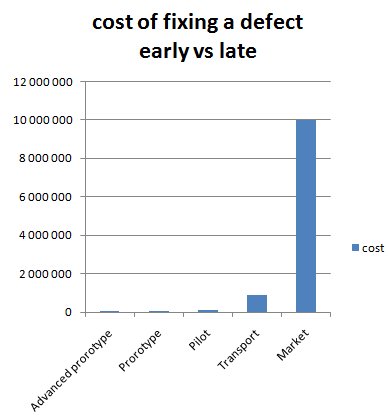

QA is late in the process

In comparison to some of the more iterative project management methodologies like Kanban and Agile, the review stage in a waterfall approach happens later in the process. If a mistake is made early on in the process, it can be challenging to go back and fix it. Because of how the waterfall process works, it doesn’t allow for room for iteration or searching for the best solution.

Waterfall methodology examples

To better understand how the waterfall methodology is applied in practice, let's look at a couple of real-world use cases:

1. Construction Project: Building a new office complex requires careful planning and sequential execution. The project manager first gathers all the requirements, such as building specifications, timelines, and budgets. Then, architects and engineers create detailed designs. After approval, construction starts and strict quality controls follow. Finally, the building is handed over to the client for use and maintenance.

2. Software Engineering Project: A company wants to develop a new mobile application using the software development life cycle (SDLC). The project manager defines the product requirements, including features, performance metrics, and integrations. Software architects create the high-level design and technical specifications. Developers then follow the SDLC phases of coding, unit testing, and deployment. The team follows the waterfall methodology throughout the product development process, making sure that each step is finished before going on to the next. After the successful launch, the mobile app enters the maintenance phase, where the team addresses user feedback and provides updates.

Managing your waterfall project

With waterfall projects, there are many moving pieces and different team members to keep track of. One of the best ways to stay on the same page is to use project management software to keep workflows, timelines, and deliverables all in one place.

If you're ready to try waterfall project management with your team, try a template in Asana . You can view Asana projects in several ways, including Timeline view, which visualizes your project as a linear timeline.

FAQ: Waterfall methodology

How do you handle changes in requirements during a waterfall project?

Handling changes in requirements during a waterfall project can be challenging, but it's essential to assess the impact of the change, communicate with stakeholders, update project documentation, adjust the project plan, and ensure all team members are informed of the changes. Implementing a change control process can help formally manage and track changes throughout the project.

Can you combine waterfall and agile methodologies in a single project?

Yes, it is possible to combine waterfall and agile methodologies in a single project using a hybrid approach. This involves using waterfall methodology for the upfront planning and requirements gathering phases and adopting agile practices during the implementation and testing phases. The balance between the waterfall model and Agile method can be adjusted based on the project scope.

How do you ensure successful team collaboration on a waterfall project?

Ensuring successful team collaboration in a waterfall project involves establishing clear communication, defining roles and responsibilities, scheduling regular meetings, using collaborative tools, fostering a positive team culture, and providing necessary support and resources. By focusing on these key aspects, teams can work together effectively and efficiently to achieve project goals.

What are the best project management tools for waterfall methodology?

For teams following a waterfall methodology, Asana is the best project management tool available. Its comprehensive set of features, such as Timeline view for visualizing project plans, task dependencies for ensuring proper sequencing, and seamless integrations, make it the ideal choice for managing linear projects. While other tools like Microsoft Project offer waterfall-specific features, Asana's ease of use, collaboration capabilities, and flexibility make it the top choice for teams looking to streamline their waterfall project management process.

Related resources

What is a flowchart? Symbols and types explained

What are story points? Six easy steps to estimate work in Agile

How to choose project management software for your team

7 steps to complete a social media audit (with template)

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

It’s Time to End the Battle Between Waterfall and Agile

- Antonio Nieto-Rodriguez

A hybrid approach can get the best out of both project management methodologies.

Too many project leaders think rigidly about Waterfall and Agile project management methodologies and believe that they need to choose between the two. But many projects — especially those with diverse stakeholder needs and complex structures — benefit from a hybrid approach that combines aspects of Waterfall and Agile. The rise of hybrid methods isn’t tied to a particular time or event; instead, they have evolved organically as a response to the needs of modern, complex projects. A review of the key components of Waterfall and Agile allows project leaders to select among them to build a hybrid approach based on the unique demands of each project.

When you are leading a high-stakes project, choosing between the rigor of Waterfall and the flexibility of Agile can make or break your initiative. For the last two decades, too many academics, leaders, project managers, and organizations have thought they have to choose one or the other. Worse, the emergence of Agile methods led to tribalism in the project community, stifling innovation and limiting the potential for truly effective solutions.

- Antonio Nieto-Rodriguez is the author of the Harvard Business Review Project Management Handbook , five other books, and the HBR article “ The Project Economy Has Arrived. ” His research and global impact on modern management have been recognized by Thinkers50. A pioneer and leading authority in teaching and advising executives the art and science of strategy implementation and modern project management, Antonio is a visiting professor in seven leading business schools and founder of Projects & Co mpany and co-founder Strategy Implementation Institute and PMOtto . You can follow Antonio through his website , his LinkedIn newsletter Lead Projects Successfully , and his online course Project Management Reinvented for Non–Project Managers .

Partner Center

- Contact sales

- Start free trial

The Ultimate Guide…

Waterfall Model

Brought to you by projectmanager, the online project planning tool used by over 35,000 users worldwide..

What Is the Waterfall Methodology in Project Management?

The phases of the waterfall model, waterfall software development life cycle.

- What Is Waterfall Software?

- Desktop vs Online Waterfall Software

Must-Have Features of Waterfall Software

- The Waterfall Model & ProjectManager.com

Waterfall vs. Agile

- Pros & Cons of the Waterfall Model

Benefits of Project Management Software for Waterfall Projects

Waterfall methodology resources.

The waterfall methodology is a linear project management approach, where stakeholder and customer requirements are gathered at the beginning of the project, and then a sequential project plan is created to accommodate those requirements. The waterfall model is so named because each phase of the project cascades into the next, following steadily down like a waterfall.

It’s a thorough, structured methodology and one that’s been around for a long time, because it works. Some of the industries that regularly use the waterfall model include construction, IT and software development. As an example, the waterfall software development life cycle, or waterfall SDLC, is widely used to manage software engineering projects.

Related: 15 Free IT Project Management Templates for Excel & Word

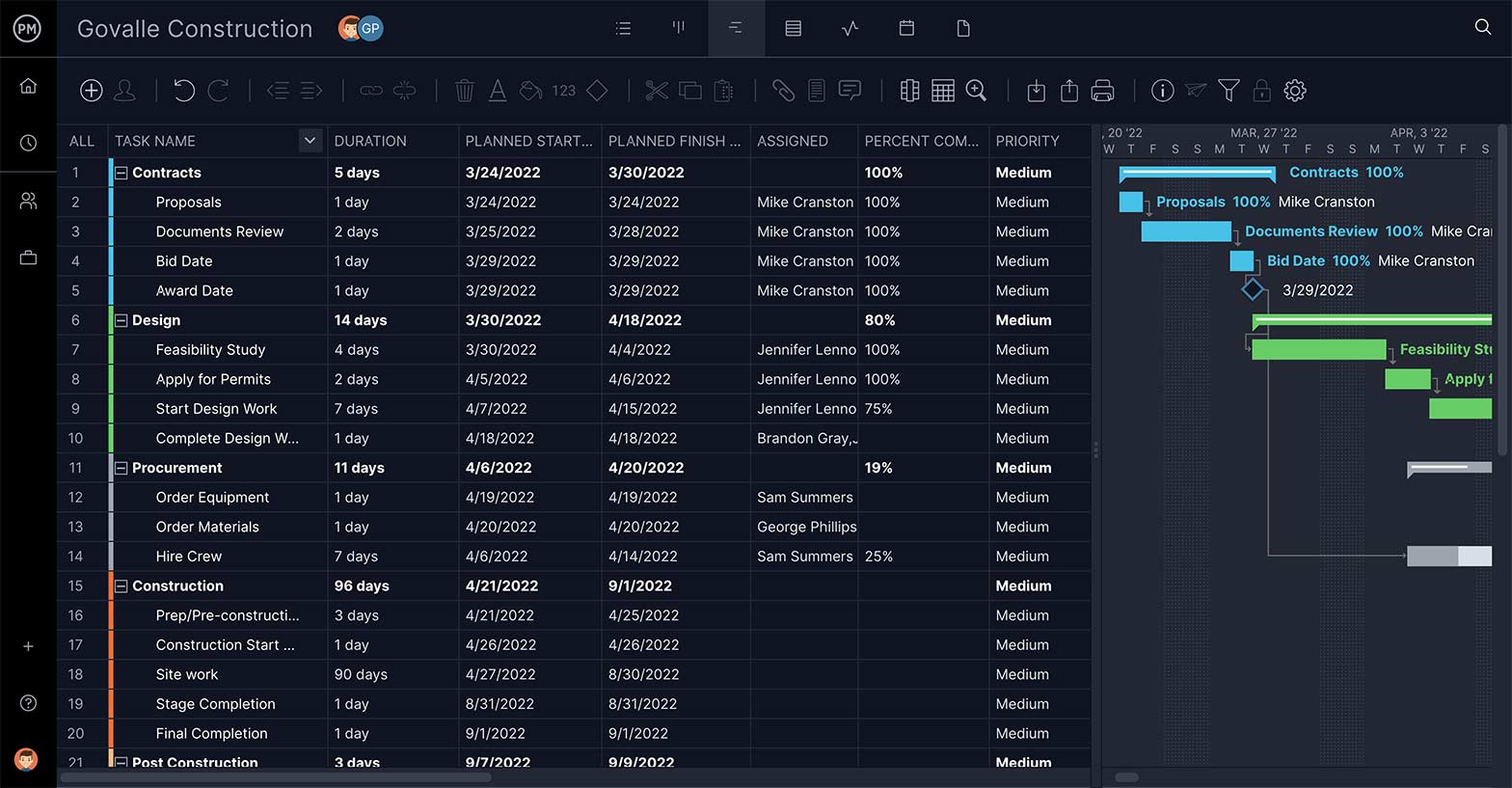

Gantt charts are the preferred tool for project managers working in waterfall method. Using a Gantt chart allows you to map subtasks, dependencies and each phase of the project as it moves through the waterfall lifecycle. ProjectManager’s waterfall software offers these features and more.

Manage waterfall projects in minutes with ProjectManager— learn more .

The waterfall approach has, at least, five to seven phases that follow in strict linear order, where a phase can’t begin until the previous phase has been completed. The specific names of the waterfall steps vary, but they were originally defined by its inventor, Winston W. Royce, in the following way:

Requirements: The key aspect of the waterfall methodology is that all customer requirements are gathered at the beginning of the project, allowing every other phase to be planned without further customer correspondence until the product is complete. It is assumed that all requirements can be gathered at this waterfall management phase.

Design: The design phase of the waterfall process is best broken up into two subphases: logical design and physical design. The logical design subphase is when possible solutions are brainstormed and theorized. The physical design subphase is when those theoretical ideas and schemas are made into concrete specifications.

Implementation: The implementation phase is when programmers assimilate the requirements and specifications from the previous phases and produce actual code.

Verification: This phase is when the customer reviews the product to make sure that it meets the requirements laid out at the beginning of the waterfall project. This is done by releasing the completed product to the customer.

Maintenance: The customer is regularly using the product during the maintenance phase, discovering bugs, inadequate features and other errors that occurred during production. The production team applies these fixes as necessary until the customer is satisfied.

Related: Free Gantt Chart Template for Excel

Let’s hypothesize a simple project, then plan and execute it with the waterfall approach phases that you just learned. For our waterfall software development life cycle example, we’ll say that you’re building an app for a client. The following are the steps you’d take to reach the final deliverable.

Requirements & Documents

First, you must gather all the requirements and documentation you need to get started on the app.

- Project Scope: This is one of the most important documents in your project, where you determine what the goals associated with building your app are: functional requirements, deliverables, features, deadlines, costs, and so on.

- Stakeholder Expectations: In order to align the project scope with the expectations of your stakeholders—the people who have a vested interest in the development of the app—you want to conduct interviews and get a clear idea of exactly what they want.

- Research: To better serve your plan, do some market research about competing apps, the current market, customer needs and anything else that will help you find the unserved niche your app can serve.

- Assemble Team: Now, you need to get the people and resources together who will create the app, from programmers to designers.

- Kickoff: The kickoff meeting is the first meeting with your team and stakeholders where you cover the information you’ve gathered and set expectations.

System Design

Next, you can begin planning the project proper. You’ve done the research, and you know what’s expected from your stakeholders . Now, you have to figure out how you’re going to get to the final deliverable by creating a system design. Based on the information you gathered during the first phase, you’ll determine hardware and software requirements and the system architecture needed for the project.

- Collect Tasks: Use a work breakdown structure to list all of the tasks that are necessary to get to the final deliverable.

- Create Schedule: With your tasks in place, you now need to estimate the time each task will take. Once you’ve figured that out, map them onto a Gantt chart , and diligently link dependencies. You can also add costs to the Gantt, and start building a budget.

Implementation

Now you’re ready to get started in earnest. This is the phase in which the app will be built and tested. The system from the previous phase is first developed in smaller programs known as units. Then each goes through a unit testing process before being integrated.

- Assign Team Tasks: Team members will own their tasks and be responsible for completing them, and for collaborating with the rest of the team. You can make these tasks from a Gantt chart and add descriptions, priority, etc.

- Monitor & Track: While the team is executing the tasks, you need to monitor and track their progress in order to make sure that the project is moving forward per your schedule.

- Manage Resources & Workload: As you monitor, you’ll discover issues and will need to reallocate resources and balance workload to avoid bottlenecks.

- Report to Stakeholders: Throughout the project, stakeholders need updates to show them progress. Meet with them and discuss a regular schedule for presentations.

- Test: Once the team has delivered the working app, it must go through extensive testing to make sure everything is working as designed.

- Deliver App: After all the bugs have been worked out, you’re ready to give the finished app to the stakeholders.

System Testing and Deployment

During this phase you’ll integrate all the units of your system and conduct an integration testing process to verify that the components of your app work properly together.

Once you verify that your app is working, you’re ready to deploy it.

Verification

Though the app has been delivered, the software development life cycle is not quite over until you’ve done some administrative tasks to tie everything up. This is technically the final step.

- Pay Contracts: Fulfil your contractual obligations to your team and any freelance contractors. This releases them from the project.

- Create Template: In software like ProjectManager, you can create a template from your project, so you have a head start when beginning another, similar one.

- Close Out Paperwork: Make sure all paperwork has been rubber stamped and archived.

- Celebrate: Get everyone together, and enjoy the conclusion of a successful project!

Maintenance

Of course, the nature of any software development project is that, through use by customers, new bugs will arise and must be squashed. So, past the verification stage, it’s typically expected that you will provide maintenance beyond launch. This is an ongoing, post-launch phase that extends for as long as your contract dictates.

What Is Waterfall Project Management Software?

Waterfall project management software is used to help you structure your project processes from start to finish. It allows managers to organize their tasks, sets up clear schedules in Gantt charts and monitor and control the project as it moves through its phases.

A waterfall project is broken up into phases, which can be achieved on a Gantt chart in the waterfall project management software. Managers can set the duration for each task on the Gantt and link tasks that are dependent on one another to start or finish.

While waterfall software can be less flexible and iterative than more agile frameworks, projects do change frequently—and there must be features that can capture these changes in real-time with dashboards and reports, so that the manager can clear up bottlenecks or reallocate resources to keep teams from having their work blocked. Microsoft Project is one of the most commonly used project management software, but it has major drawbacks that make ProjectManager a great alternative .

Desktop vs Online Project Management Waterfall Software

When it comes to waterfall software, you can choose from either a desktop application or online, cloud-based project management software. This might not seem to be a big issue, but there are important distinctions between these two types of offerings.

That’s because there are differences between the two applications, and knowing those differences will help you make an informed decision.

Desktop waterfall software tends to have a more expensive up-front cost, and that cost can rise exponentially if you are required to pay per-user licensing fees for every member of your team.

Online waterfall software, on the other hand, is typically paid for on a subscription basis, and that subscription is usually a tiered payment plan depending on the number of users.

Connectivity

Online software, naturally, must be connected to the internet. This means your speed and reliability can vary depending on your internet service provider. It also means that if you lose connectivity, you can’t work.

Although the difference is minor, desktop waterfall software never has to worry about connection outages.

If security is a concern, rest assured that both options are highly secure. Desktop software that operates on a company intranet is nigh impenetrable, which can provide your company with a greater sense of security.

Strides in web security, like two-factor authentication and single-sign have made online, cloud-based waterfall software far more secure. Also, online tools have their data saved to the cloud, so if you suffer a crash on your desktop that might mean the end of your work.

Accessibility

Desktops are tied to the computers they are installed to or, at best, your office’s infrastructure. That doesn’t help much if you have distributed teams or work off site, in the field, at home and so on.

Online software is accessible anywhere, any time—so long as you have an internet connection. This makes it always accessible, but even more importantly, it delivers real-time data, so you’re always working on the current state of the project.

Waterfall software helps to organize your projects and make them run smoothly. When you’re looking for the right software to match your needs, make sure it has the following features.

Keep Your Project Structured

Managing a project with the waterfall method is all about structure. One phase follows another. To break your project into these stages, you need an online Gantt chart that has a milestone feature. This indicates the date where one phase of the waterfall process stops and another begins.

Control Your Task and Schedule

The Gantt chart is a waterfall’s best friend. It organizes your tasks, sets the duration and links tasks that are dependent to keep work flowing later on. When scheduling, you want a Gantt that can automatically calculate your critical path to help you know how much float you have.

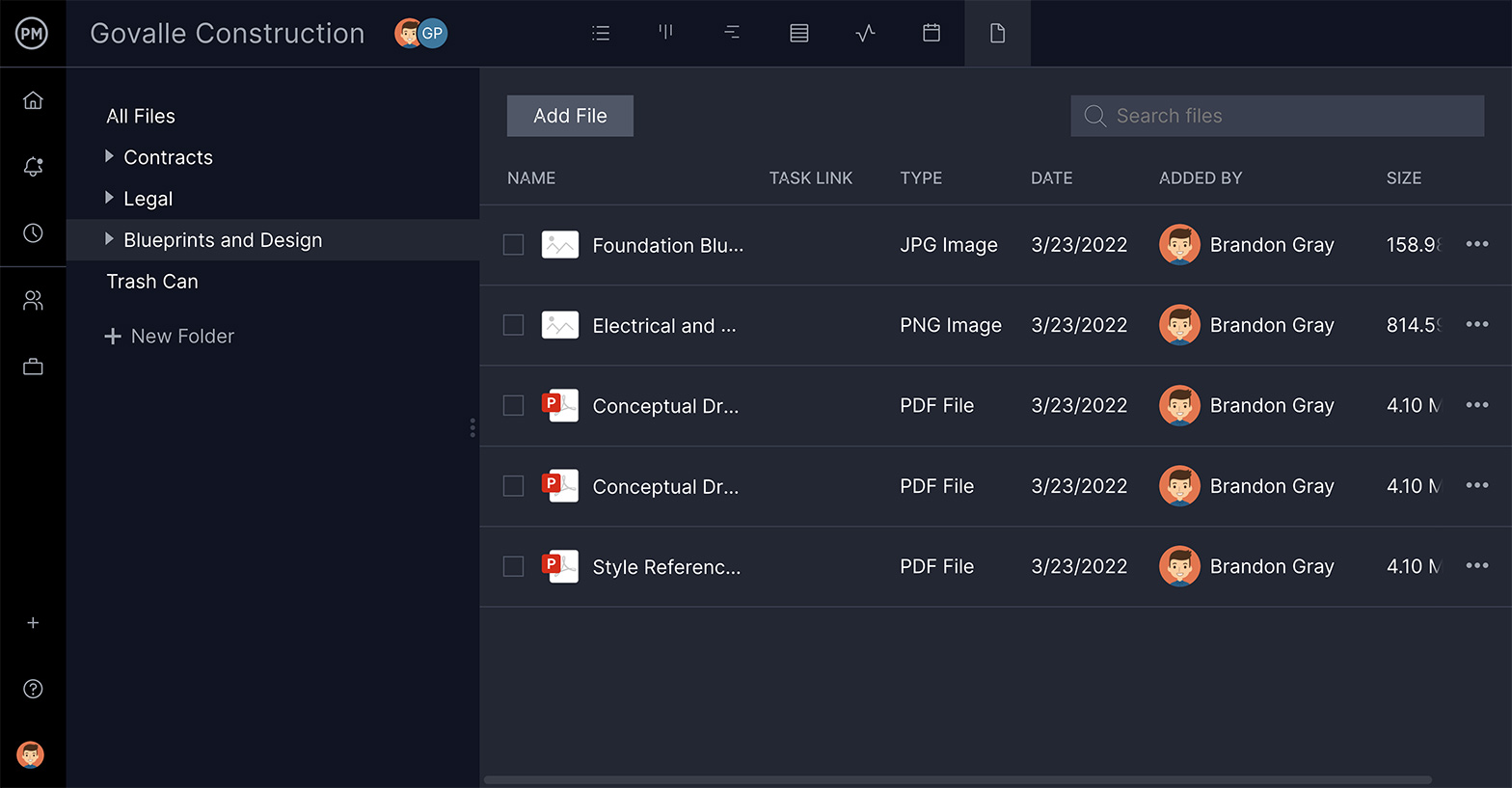

Have Your Files Organized

Waterfall projects, like all projects, collect a lot of paperwork. You want a tool with the storage capacity to hold all your documents and make them easy to find when you need them. Also, attaching files to tasks gives teams direction and helps them collaborate.

Know If You’re on Schedule

Keeping on track means having accurate information. Real-time data makes it timely, but you also need to set your baseline and have dashboard metrics and reporting to compare your actual progress to your planned progress. This makes sure you stay on schedule.

Get an Overview of Performance

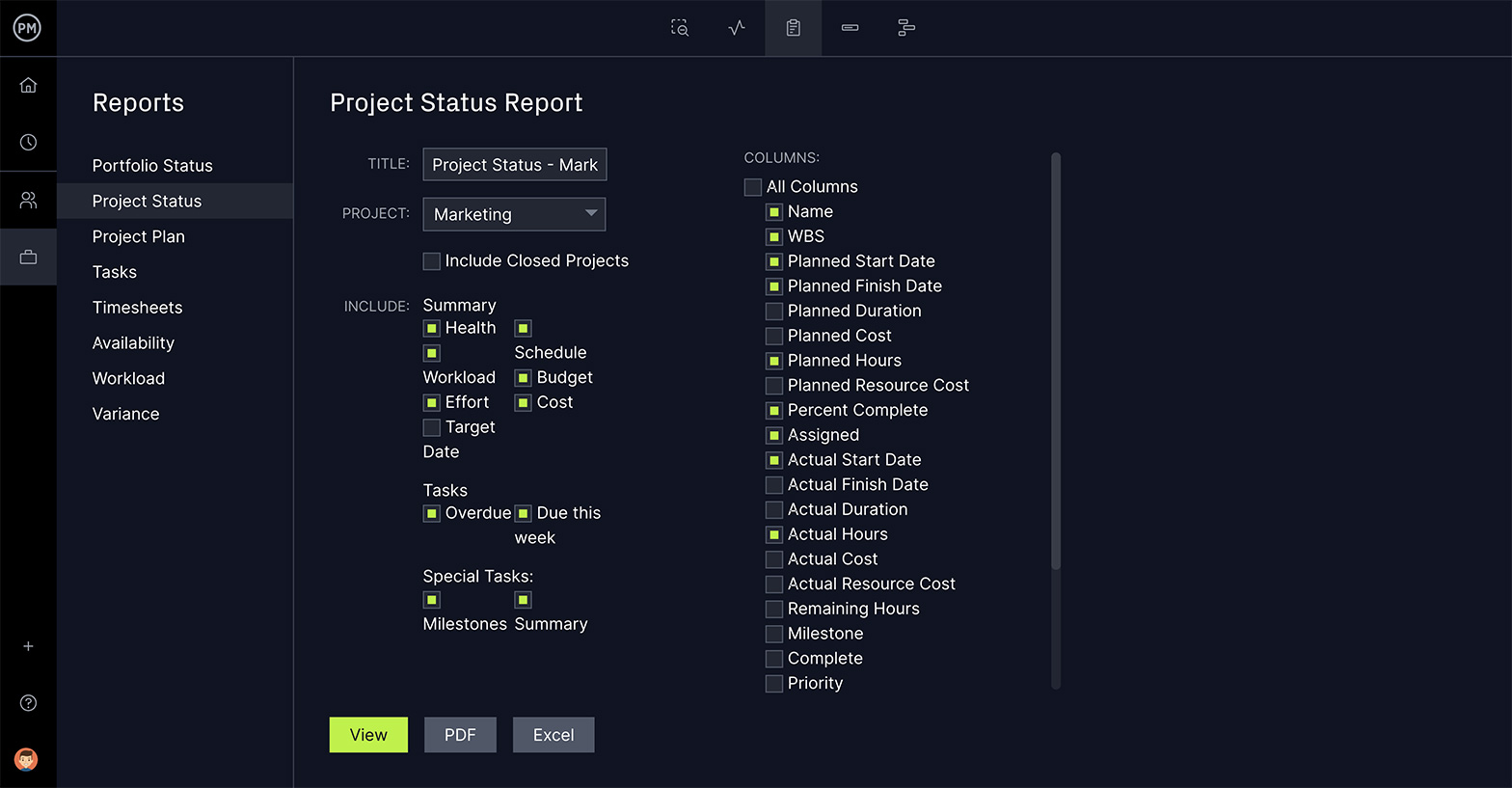

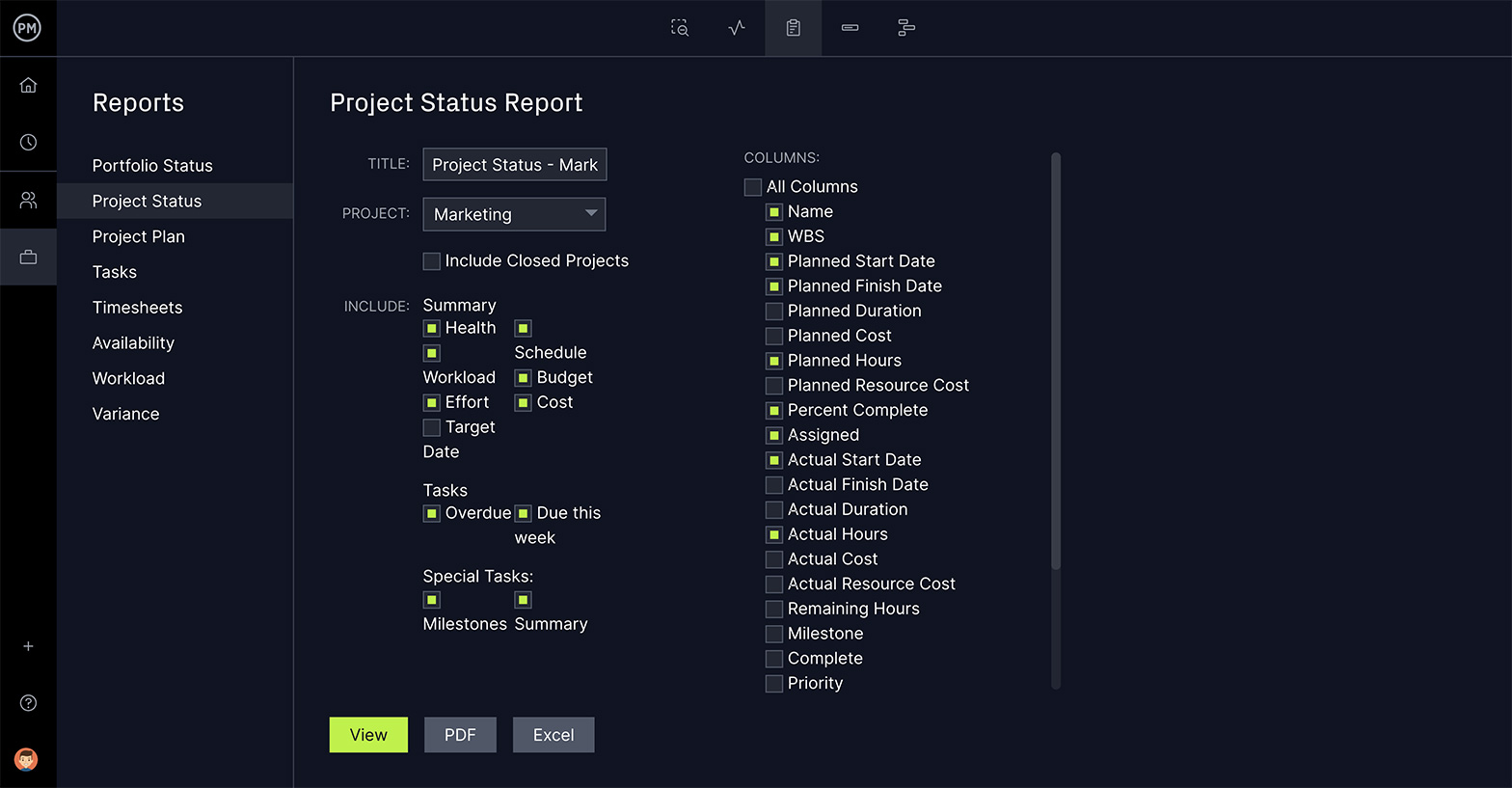

Dashboards are designed to collect data and display it over several metrics, such as overall health, workload and more. This high-level view is important, so you want to have a feature that automatically calculates this data and doesn’t require you to manually input it.

Make Data-Based Decisions

Reports dive deeper into data and get more details on a project’s progress and performance. Real-time data makes them accurate. Look for ease of use—it should only take a single click to generate and share. You’ll also want to filter the results to see only what you’re interested in.

The Waterfall Model & ProjectManager

ProjectManager is an award-winning project management software that organizes teams and projects. With features such as online Gantt charts, task lists, reporting tools and more, it’s an ideal tool to control your waterfall project management.

Sign up for a free 30-day trial and follow along to make a waterfall project in just a few easy steps. You’ll have that Gantt chart built in no time!

1. Upload Requirements & Documents

Waterfall project management guarantees one thing: a lot of paperwork. All the documentation and requirements needed to address for the project can quickly become overwhelming.

You can attach all documentation and relevant files to our software, or directly on a task. Now, all of your files are collected in one place and are easy to find. Don’t worry about running out of space—we have unlimited file storage.

2. Use a Work Breakdown Structure to Collect Tasks

Getting to your final deliverable will require many tasks. Planning the waterfall project means knowing every one of those tasks, no matter how small, and how they lead to your final deliverable. A work breakdown structure is a tool to help you figure out all those steps.

To start, use a work breakdown structure (WBS) to collect every task that is necessary to create your final deliverable. You can download a free WBS template here . Then, upload the task list to our software.

3. Open in Gantt Project View

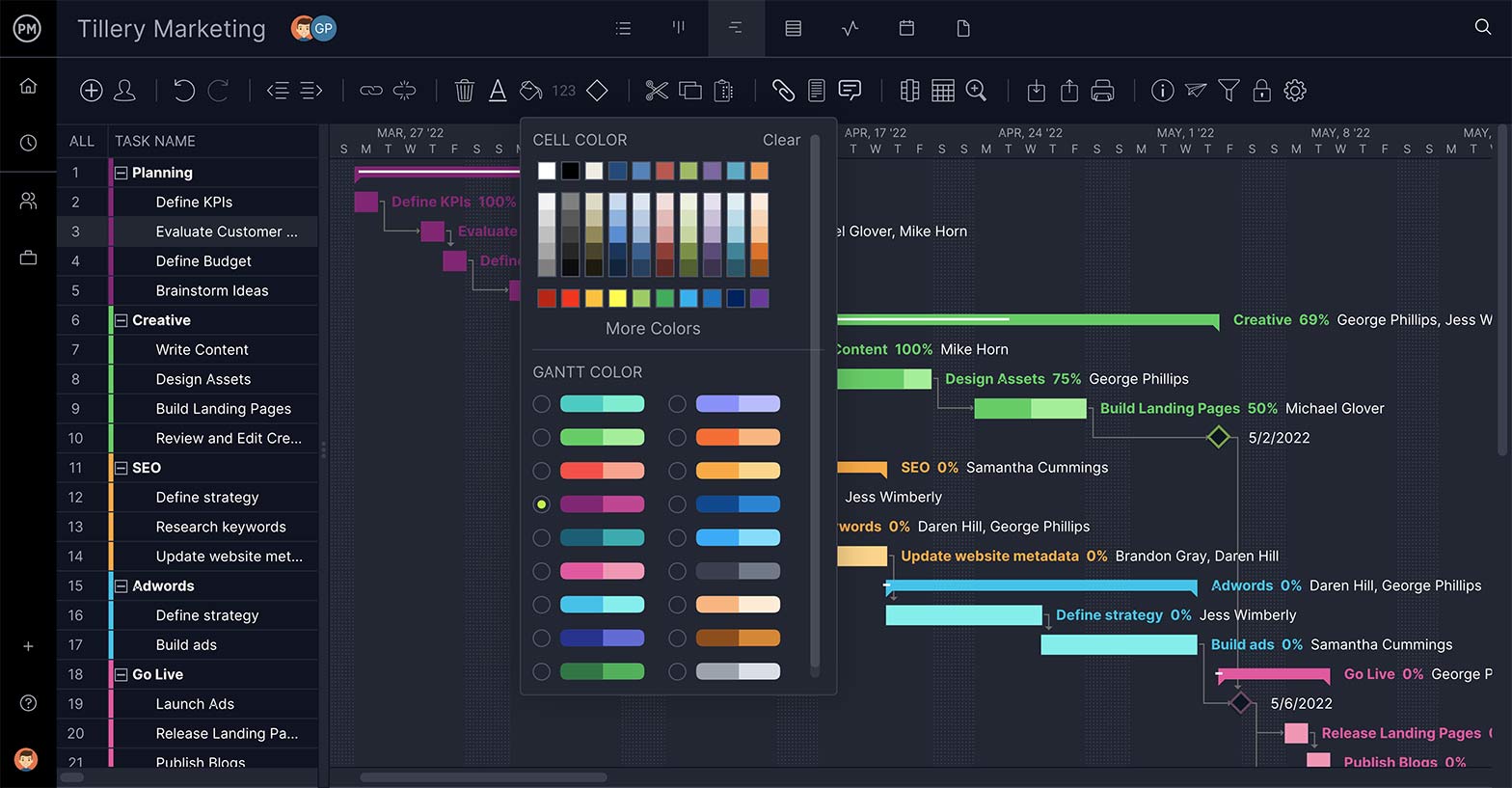

Gantt charts are essential project management tools used for planning and scheduling. They collect your tasks in one place on a timeline . From there, you can link dependencies, set milestones, manage resources and more.

In the software, open the Gantt chart view and add deadlines, descriptions, priorities and tags to each task.

4. Create Phases & Milestones

Milestones are what separates major phases in a waterfall method project. Waterfall methodology is all about structure and moving from one phase to the next, so breaking your project into milestones is key to the waterfall method.

In the Gantt view, create phases and milestones to break up the project. Using the milestone feature, determine when one task ends and a new one begins. Milestones are symbolized by a diamond on the Gantt.

5. Set Dependencies in a Gantt Chart

Dependent tasks are those that cannot start or finish until another starts or finishes. They create complexities in managing any waterfall project.

Link dependent tasks in the Gantt chart. Our software allows you to link all four types of dependencies: start-to-start, start-to-finish, finish-to-finish and finish-to-start. This keeps your waterfall project plan moving forward in a sequential order and prevents bottlenecks.

6. Assign From Gantt Charts

Although you’ve planned and scheduled a project, it’s still just an abstraction until you get your team assigned to execute those tasks. Assigning is a major step in managing your waterfall project and needs to happen efficiently.

Assign team members to tasks right from the Gantt chart. You can also attach any related images or files directly to the task. Collaboration is supported by comments at the task level. Anyone assigned or tagged will get an email alert to notify them of a comment or update.

7. Manage Resources & Workload

Resources are anything you need to complete the project. This means not only your team, but also the materials and tools that they need. The workload represents how many tasks your team is assigned, and balancing that work keeps them productive.

Keep track of project resources on the Workload view. See actual costs, and reallocate as needed to stay on budget. Know how many tasks your team is working on with easy-to-read color-coded charts, and balance their workload right on the page.

8. Track Progress in Dashboard & Gantt

Progress must be monitored to know if you’re meeting the targets you set in your waterfall method plan. The Gantt shows percentage complete, but a dashboard calculates several metrics and shows them in graphs and charts.

Monitor your project in real time and track progress across several metrics with our project dashboard . We automatically calculate project health, costs, tasks and more and then display them in a high-level view of your project. Progress is also tracked by shading on the Gantt’s duration bar.

9. Create Reports

Reporting serves two purposes: it gives project managers greater detail into the inner-workings of their waterfall project to help them make better decisions, and acts as a communication tool to keep stakeholders informed.

Easily generate data-rich reports that show project variance, timesheets , status and more. Get reports on your planned vs. the actual progress. Filter to show just the information you want. Then, share with stakeholders during presentations and keep everyone in the loop.

10. Duplicate Plan for New Projects

Having a means to quickly copy projects is helpful in waterfall methodology, as it jumpstarts the next project by recreating the major steps and allowing you to make tweaks as needed.

Create templates to quickly plan any recurring waterfall projects. If you know exactly what it takes to get the project done, then you can make it into a template. Plus, you can import proven project plans from MSP, and task lists from Excel and Word.

The waterfall methodology is one of two popular methods to tackle software engineering projects; the other method is known as Agile .

It can be easier to understand waterfall when you compare it to Agile. Waterfall and Agile are two very different project management methodologies , but both are equally valid, and can be more or less useful depending on the project.

Waterfall Project Management

If the waterfall model is to be executed properly, each of the phases we outlined earlier must be executed in a linear fashion. Meaning, each phase has to be completed before the next phase can begin, and phases are never repeated—unless there is a massive failure that comes to light in the verification or maintenance phase.

Furthermore, each phase is discrete, and pretty much exists in isolation from stakeholders outside of your team. This is especially true in the requirements phase. Once the customer’s requirements are collected, the customers cease to play any role in the actual waterfall software development life cycle.

Agile Project Management

The agile methodology differs greatly from the waterfall approach in two major ways; namely in regards to linear action and customer involvement. Agile is a nimble and iterative process, where the product is delivered in stages to the customer for them to review and provide feedback.

Instead of having everything planned out by milestones, like in waterfall, the Agile software development method operates in “sprints” where prioritized tasks are completed within a short window, typically around two weeks.

These prioritized tasks are fluid, and appear based on the success of previous sprints and customer feedback, rather than having all tasks prioritized at the onset in the requirements phase.

Understanding the Difference Between Waterfall & Agile

The important difference to remember is that a waterfall project is a fixed, linear plan. Everything is mapped out ahead of time, and customers interact only at the beginning and end of the project. The Agile method, on the other hand, is an iterative process, where new priorities and requirements are injected into the project after sprints and customer feedback sessions.

Pros & Cons of the Waterfall Project Management

There are several reasons why project managers choose to use the waterfall project management methodology. Here are some benefits:

- Project requirements are agreed upon in the first phase, so planning and scheduling is simple and clear.

- With a fully laid out project schedule , you can give accurate estimates for your project cost, resources and deadlines.

- It’s easy to measure progress as you move through the waterfall model phases and hit milestones.

- Customers aren’t perpetually adding new requirements to the project, which can delay production.

Of course, there are drawbacks to using the waterfall method as well. Here are some disadvantages to this approach:

- It can be difficult for customers to articulate all of their needs at the beginning of the project.

- If the customer is dissatisfied with the product in the verification phase, it can be very costly to go back and design the code again.

- A linear project plan is rigid, and lacks flexibility for adapting to unexpected events.

Although it has its drawbacks, a waterfall project management plan is very effective in situations where you are encountering a familiar scenario with several knowns, or in software engineering projects where your customer knows exactly what they want at the onset.

Using a project management software is a great way to get the most out of your waterfall project. You can map out the steps and link dependencies to see exactly what needs to go where.

As illustrated above, ProjectManager is made with waterfall methodology in mind, with a Gantt chart that can structure the project step-by-step. However, we have a full suite of features, including kanban boards that are great for Agile teams that need to manage their sprints.

With multiple project views, both agile and waterfall teams and more traditional ones can work from the same data, delivered in real time, only filtered through the project view most aligned to their work style. We take the waterfall methodology and bring it into the modern world.

Now that you know how to plan a waterfall project, give yourself the best tools for the job. Take a free 30-day trial and see how ProjectManager can help you plan with precision, track with accuracy and deliver your projects on time and under budget.

Start My Free Trial

- Gantt Chart Software

- Project Planning Software

- Project Scheduling Software

- Requirements Gathering Template

- Gantt Chart Template

- Change Request Form

- Project Management Trends (2022)

- SDLC – The Software Development Life Cycle

- IT Project Management: The Ultimate Guide

- Project Management Methodologies – An Overview

- Project Management Framework Types, Key Elements & Best Practices

Start your free 30-day trial

Deliver faster, collaborate better, innovate more effectively — without the high prices and months-long implementation and extensive training required by other products.

What Is the Waterfall Methodology?

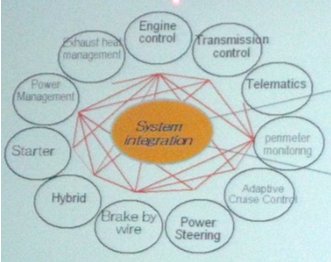

The waterfall methodology is an approach used by software and product development teams manage projects. The methodology separates the different parts of the project into phases specifying the necessary activities and steps. For example, at the beginning of the project, the waterfall methodology focuses on gathering all requirements from stakeholders that project team members will later use to design and implement the product.

However, waterfall has its, well…downfalls, which I’ll discuss in more detail below. In short, waterfall may not be suitable for every development process and you can find modified or extended versions of the waterfall methodology that try to solve some of these issues.

One example of an extended version of the waterfall methodology is the V-model . A key distinction of the V-model from the original Waterfall methodology is its emphasis on validation and testing during the entire project duration, as opposed to only testing after an implementation phase.

More From This Expert What Is JSON?

What Is the Waterfall Methodology in Software Engineering?

The waterfall methodology is a software development life cycle (SDLC) model used to build software projects.

One thing that distinguishes waterfall from other SDLC models (like Agile ) is that phases are performed sequentially. In other words, the project team must complete each phase in a specific order. If you look at the diagram below, you can see the flow is similar to a waterfall.

Working with SDLC models often includes additional software to keep track of planning, tasks and more. So it’s possible to find tools designed to support the waterfall methodology’s specific workflow, for example.

What Are the Different Phases of the Waterfall Methodology?

The waterfall methodology was one of the first established SDLC models. In fact, waterfall dates back to 1970 when Dr. Winston W. Royce described it in “ Managing the Development of Large Software Systems .” However, we should note that Royce didn’t refer to the methodology as “waterfall” in the paper. The waterfall nomenclature came later. In his original paper, Royce specified the following phases.

7 Stages of the Waterfall Model

- System requirements

- Software requirements

- Program design

The system and software requirement phase involves gathering and documenting the requirements defining the product. This process typically involves stakeholders such as the customer and project managers. The analysis phase involves steps such as analyzing the requirements to identify risks and documenting strategies.

The design phase focuses on designing architecture, business logic and concepts for the software. The design phase is followed by the coding phase which involves writing the source code for the software based on the planned design.

The testing phase concerns testing the software to ensure it meets expectations. The last phase, operations , involves deploying the application as well as planning support and maintenance.

Advantages of the Waterfall Methdology

Waterfall provides a systematic and predictable framework that helps reconcile expectations, improve planning, increase efficiency and ensure quality control. What’s more, waterfall documentation provides an entry for people outside the project to build on the software without having to rely on its creators, which is helpful if you need to bring in external assistance or implement changes to the project team.

Disadvantages of the Waterfall Methodology

The structural limitations of the waterfall methodology may introduce some problems for projects with many uncertainties. For instance, the methodology’s linear flow requires that each phase be completed before moving on to the next, which means the methodology doesn’t support revisiting and refining data based on new information that may come later in the project life cycle. A specific example of this limitation is the methodology’s focus on defining all requirements at the beginning of the project. After all, stakeholders may not know everything about the project at the very start or they may change their opinion later about what the product should actually do or what customer segment they’re trying to serve.

On the other hand, a project with well-defined and stable requirements may benefit from waterfall because it ensures the establishment and documentation of the requirements as soon as possible.

Another disadvantage of the waterfall methodology can be the late implementation of the actual software, which may result in a product not correlating with stakeholders’ expectations. For example, if the developers have misunderstood the customer’s idea about a specific feature due to poorly defined requirements, the final product will not behave as expected. Late testing can also lead to finding systemic problems too late in the project’s development when it’s more difficult to correct the design.

More From the Built In Tech Dictionary What Is Agile?

Waterfall Methodology vs. Agile

Another approach to software development is the Agile methodology . Agile is more flexible and open to changes than waterfall, which makes Agile more suitable for projects affected by rapid changes.

A key difference between the two methodologies is the project’s flow. While waterfall is a linear and sequential approach, Agile is an iterative and incremental approach. In practice this means that software created using Agile has development phases we perform several times with smaller chunks of implemented functionality.

The two methodologies also have different approaches to testing . The waterfall methodology tests implementation very late in the process while Agile integrates tests for each iteration.

Another key difference is the two methodologies’ approach to stakeholders. When we use waterfall, the customer doesn’t see the implemented software until quite late in the project. When we use Agile, customers have the opportunity to follow the progress along the way.

Which methodology you choose will come down to the project’s context. Stable and well-defined projects may benefit more from the waterfall methodology and other projects affected by rapid changes may benefit more from Agile.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

Case Study: Mayden's Transformation from Waterfall to Scrum

Mayden is a small and successful U.K. company that develops managed Web applications for the health care sector. They specialize in flexible, cloud-based software, delivered by a team of 44 from two locations in England. Celebrating their tenth anniversary in 2014, Mayden has built a track record of delivering value to its customers with applications that have the power to change the way that services are delivered by health care staff — and experienced by patients. Given a relatively young company that focuses on innovation and flexibility, you might think that Mayden grew up embracing business agility , but that wasn't the case. The company did have a reputation for being responsive to customer needs, but it tried to execute within a traditional project management environment. CEO Chris May explains the problems that surfaced as a result of trying to be flexible in a Waterfall environment: "Our best-laid plans were continually being hijacked for short-priority developments. The end result was that we reached a point where we had started lots of things but were finishing very little." This created what Operations Director Chris Eldridge refers to as an "illusion of progress" — projects were frequently assigned to only one person, so the work "often took months to complete." From a development team standpoint, this approach created individual expertise and worked against a team environment. People were seen as specialists, and some developers had a large backlog of work while others had insufficient work — but they were unable to assist their colleagues because they didn't have that specialist knowledge. This created individual silos and led to lack of variety as well as boredom and low morale. From a company standpoint, it also led to poor skills coverage, with multiple "single points of failure" in the development team.

Ready to Change

Fortunately, Mayden recognized that the situation wasn't ideal. When an opportunity to develop a brand-new product with brand-new technology presented itself, the staff was enthusiastic about trying a new approach. While there was some discussion of hybrid project execution approaches, the decision quickly came down to using Scrum or continuing with the traditional Waterfall-based method that the organization had in place. A number of people on the development team were interested in agile. One of them, Rob Cullingford, decided to do something about it. Without really knowing what to expect, he booked himself on a Certified ScrumMaster® (CSM) course with Paul Goddard of Agilify. At the end of the course, Cullingford was not only a CSM but a "complete convert." He presented his experience to the rest of the development team and convinced Mayden to bring Agilify and Goddard in to provide them with CSM training, with similarly positive results. Cullingford points out that Mayden management had a vital role to play in the decision to use Scrum. "The company's management team really grasped the concepts of Scrum and had the foresight to see how it could transform the way we delivered our projects, and moved decisively," he explains. Eldridge had a background in Lean manufacturing, and he saw a number of significant similarities that helped support his quick acceptance of Scrum. He freely admits, however, that the decision was driven by the enthusiasm within the development team. "The ultimate decision to take Scrum training forward was a no-brainer," he says. "Paul [Goddard] came in to talk to us one week, and we had 20 people on the ScrumMaster training the following week." Eldridge adds that Scrum was "enthusiastically embraced by all: the managers, support team, and developers. Everyone was really keen to give it a go." Clearly the environment at Mayden was ripe for change. There was a general recognition that the current method of executing projects wasn't working, combined with a potential solution in Scrum that all levels of the organization felt would offer tremendous benefits. However, enthusiasm for a new approach is not enough in itself — success has to come from the results, and it was here that Mayden shone.

Related: Easing the Transition from Waterfall to Agile

The Benefits