Z-Test Calculator

This Z-test calculator computes data for both one-sample and two-sample Z-tests. It also provides a diagram to show the position of the Z-score and the acceptance/rejection regions. When making a two-sample Z-test calculation, the population mean difference, d, represents the difference between the population means of sample one and sample two, which is μ 1 -μ 2 . To use this calculator, simply select the type of calculation from the tab, enter the values, and click the 'Calculate' button.

The Z-test is a statistical procedure used to determine whether there is a significant difference between means, either between a sample mean and a known population mean (one-sample Z-test) or between the means of two independent samples (two-sample Z-test). It assumes that the data is normally distributed and is particularly useful when the sample sizes are large (>30) and the population standard deviations are known. When analyzing data to make informed decisions, statistical hypothesis tests are indispensable tools used to determine if evidence exists to reject a prevailing assumption or theory, known as the null hypothesis. The Z-test is one of these tests.

One-Sample Z-Test

The one-sample Z-test is used when you want to compare the mean of a single sample to a known population mean to see if there is a significant difference. This is particularly common in quality control and other scenarios where the standard deviation of the population is known.

- Null Hypothesis (H 0 ): The sample mean is equal to the population mean (x̅=μ).

- Alternative Hypothesis (H 1 ): The sample mean is not equal to the population mean (x̅≠μ). This can also be one-tailed (x̅>μ or x̅<μ) depending on the direction of interest.

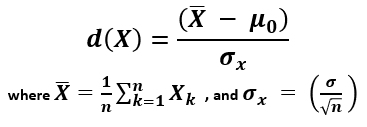

The formula for the Z-statistic in a one-sample Z-test is:

- x̅ is the sample mean

- μ is the population mean

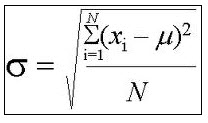

- σ is the population standard deviation

- n is the sample size

Example: Suppose a school administrator knows the national average score for a standardized test is 500 with a standard deviation of 50. A sample of 100 students from a new teaching program scores an average of 520. To determine if this program significantly differs from the national average:

This Z-value would then be compared against a critical value from the Z-distribution table typically at a 0.05 significance level. The critical value for a 0.05 significance level is approximately ±1.96. The Z-value of 4 is greater than 1.96. Therefore, the null hypothesis is rejected and the score of this program is considered significantly different from the national average at the 0.05 significance level.

Two-Sample Z-Test

The two-sample Z-test (or independent samples Z-test) compares the means from two independent groups to determine if there is a statistically significant difference between them.

- Null Hypothesis (H 0 ): The two population means have a difference of d (μ 1 -μ 2 =d). If d is 0, the null hypothesis states that the two population means are equal (μ 1 =μ 2 ).

- Alternative Hypothesis (H 1 ): The difference between two population means is not d (μ 1 -μ 2 ≠d), which can also be directional (μ 1 -μ 2 >d or μ 1 -μ 2 <d). If d is 0, the alternative hypothesis becomes μ 1 ≠μ 2 , or μ 1 >μ 2 or μ 1 <μ 2 if it is directional.

The formula for calculating the Z-statistic in a two-sample Z-test is:

- x̅ 1 and x̅ 2 are the sample means of groups 1 and 2, respectively

- μ 1 and μ 2 are the population means, with μ 1 - μ 2 = d. d is often hypothesized to be zero under the null hypothesis.

- σ 1 and σ 2 are the population standard deviations

- n 1 and n 2 are the sample sizes of the two groups

Example: Consider two groups of employees from different branches of a company undergoing training. Group A has 50 employees with an average score of 80 and a standard deviation of 10, and Group B has 50 employees with an average score of 75 and a standard deviation of 12. To test if there's a significant difference:

This Z-value is then compared to the critical Z-values to assess significance. The critical value of a 0.05 significance level is around ±1.95. The Z-value of 2.26 is more than 1.95. Therefore, the two group has significant difference at 0.05 significance level.

Significance Level

The significance level (α) is a critical concept in hypothesis testing. It represents the probability threshold below which the null hypothesis will be rejected. Common levels are 0.05 (5%) or 0.01 (1%). The choice of α affects the Z-critical value, which is used to determine whether to reject the null hypothesis based on the computed Z-score.

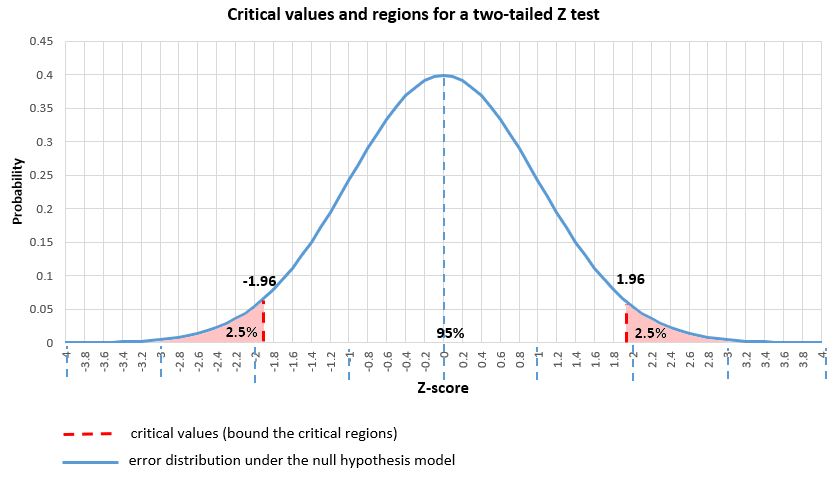

- Critical Value: This is a point on the Z-distribution that the test statistic must exceed to reject the null hypothesis. For instance, at a 5% significance level in a two-tailed test, the critical values are approximately ±1.96. The significance level (probability) and critical value (Z-score) can be converted with each other the Z-distribution table or use our Z/P converter .

Using the above examples, if the computed Z-scores exceed the respective critical values, the null hypotheses in each case would be rejected, indicating a statistically significant difference as per the alternative hypotheses. These examples demonstrate how the Z-test is applied in different scenarios to test hypotheses concerning population means.

Critical Value Calculator

Use this calculator for critical values to easily convert a significance level to its corresponding Z value, T score, F-score, or Chi-square value. Outputs the critical region as well. The tool supports one-tailed and two-tailed significance tests / probability values.

Related calculators

- Using the critical value calculator

- What is a critical value?

- T critical value calculation

- Z critical value calculation

- F critical value calculation

Using the critical value calculator

If you want to perform a statistical test of significance (a.k.a. significance test, statistical significance test), determining the value of the test statistic corresponding to the desired significance level is necessary. You need to know the desired error probability ( p-value threshold , common values are 0.05, 0.01, 0.001) corresponding to the significance level of the test. If you know the significance level in percentages, simply subtract it from 100%. For example, 95% significance results in a probability of 100%-95% = 5% = 0.05 .

Then you need to know the shape of the error distribution of the statistic of interest (not to be mistaken with the distribution of the underlying data!) . Our critical value calculator supports statistics which are either:

- Z -distributed (normally distributed, e.g. absolute difference of means)

- T -distributed (Student's T distribution, usually appropriate for small sample sizes, equivalent to the normal for sample sizes over 30)

- X 2 -distributed ( Chi square distribution, often used in goodness-of-fit tests, but also for tests of homogeneity or independence)

- F -distributed (Fisher-Snedecor distribution), usually used in analysis of variance (ANOVA)

Then, for distributions other than the normal one (Z), you need to know the degrees of freedom . For the F statistic there are two separate degrees of freedom - one for the numerator and one for the denominator.

Finally, to determine a critical region, one needs to know whether they are testing a point null versus a composite alternative (on both sides) or a composite null versus (covering one side of the distribution) a composite alternative (covering the other). Basically, it comes down to whether the inference is going to contain claims regarding the direction of the effect or not. Should one want to claim anything about the direction of the effect, the corresponding null hypothesis is direction as well (one-sided hypothesis).

Depending on the type of test - one-tailed or two-tailed, the calculator will output the critical value or values and the corresponding critical region. For one-sided tests it will output both possible regions, whereas for a two-sided test it will output the union of the two critical regions on the opposite sides of the distribution.

What is a critical value?

A critical value (or values) is a point on the support of an error distribution which bounds a critical region from above or below. If the statistics falls below or above a critical value (depending on the type of hypothesis, but it has to fall inside the critical region) then a test is declared statistically significant at the corresponding significance level. For example, in a two-tailed Z test with critical values -1.96 and 1.96 (corresponding to 0.05 significance level) the critical regions are from -∞ to -1.96 and from 1.96 to +∞. Therefore, if the statistic falls below -1.96 or above 1.96, the null hypothesis test is statistically significant.

You can think of the critical value as a cutoff point beyond which events are considered rare enough to count as evidence against the specified null hypothesis. It is a value achieved by a distance function with probability equal to or greater than the significance level under the specified null hypothesis. In an error-probabilistic framework, a proper distance function based on a test statistic takes the generic form [1] :

X (read "X bar") is the arithmetic mean of the population baseline or the control, μ 0 is the observed mean / treatment group mean, while σ x is the standard error of the mean (SEM, or standard deviation of the error of the mean).

Here is how it looks in practice when the error is normally distributed (Z distribution) with a one-tailed null and alternative hypotheses and a significance level α set to 0.05:

And here is the same significance level when applied to a point null and a two-tailed alternative hypothesis:

The distance function would vary depending on the distribution of the error: Z, T, F, or Chi-square (X 2 ). The calculation of a particular critical value based on a supplied probability and error distribution is simply a matter of calculating the inverse cumulative probability density function (inverse CPDF) of the respective distribution. This can be a difficult task, most notably for the T distribution [2] .

T critical value calculation

The T-distribution is often preferred in the social sciences, psychiatry, economics, and other sciences where low sample sizes are a common occurrence. Certain clinical studies also fall under this umbrella. This stems from the fact that for sample sizes over 30 it is practically equivalent to the normal distribution which is easier to work with. It was proposed by William Gosset, a.k.a. Student, in 1908 [3] , which is why it is also referred to as "Student's T distribution".

To find the critical t value, one needs to compute the inverse cumulative PDF of the T distribution. To do that, the significance level and the degrees of freedom need to be known. The degrees of freedom represent the number of values in the final calculation of a statistic that are free to vary whilst the statistic remains fixed at a certain value.

It should be noted that there is not, in fact, a single T-distribution, but there are infinitely many T-distributions, each with a different level of degrees of freedom. Below are some key values of the T-distribution with 1 degree of freedom, assuming a one-tailed T test is to be performed. These are often used as critical values to define rejection regions in hypothesis testing.

Z critical value calculation

The Z-score is a statistic showing how many standard deviations away from the normal, usually the mean, a given observation is. It is often called just a standard score, z-value, normal score, and standardized variable. A Z critical value is just a particular cutoff in the error distribution of a normally-distributed statistic.

Z critical values are computed by using the inverse cumulative probability density function of the standard normal distribution with a mean (μ) of zero and standard deviation (σ) of one. Below are some commonly encountered probability values (significance levels) and their corresponding Z values for the critical region, assuming a one-tailed hypothesis .

The critical region defined by each of these would span from the Z value to plus infinity for the right-tailed case, and from minus infinity to minus the Z critical value in the left-tailed case. Our calculator for critical value will both find the critical z value(s) and output the corresponding critical regions for you.

Chi Square (Χ 2 ) critical value calculation

Chi square distributed errors are commonly encountered in goodness-of-fit tests and homogeneity tests, but also in tests for independence in contingency tables. Since the distribution is based on the squares of scores, it only contains positive values. Calculating the inverse cumulative PDF of the distribution is required in order to convert a desired probability (significance) to a chi square critical value.

Just like the T and F distributions, there is a different chi square distribution corresponding to different degrees of freedom. Hence, to calculate a Χ 2 critical value one needs to supply the degrees of freedom for the statistic of interest.

F critical value calculation

F distributed errors are commonly encountered in analysis of variance (ANOVA), which is very common in the social sciences. The distribution, also referred to as the Fisher-Snedecor distribution, only contains positive values, similar to the Χ 2 one. Similar to the T distribution, there is no single F-distribution to speak of. A different F distribution is defined for each pair of degrees of freedom - one for the numerator and one for the denominator.

Calculating the inverse cumulative PDF of the F distribution specified by the two degrees of freedom is required in order to convert a desired probability (significance) to a critical value. There is no simple solution to find a critical value of f and while there are tables, using a calculator is the preferred approach nowadays.

References

1 Mayo D.G., Spanos A. (2010) – "Error Statistics", in P. S. Bandyopadhyay & M. R. Forster (Eds.), Philosophy of Statistics, (7, 152–198). Handbook of the Philosophy of Science . The Netherlands: Elsevier.

2 Shaw T.W. (2006) – "Sampling Student's T distribution – use of the inverse cumulative distribution function", Journal of Computational Finance 9(4):37-73, DOI:10.21314/JCF.2006.150

3 "Student" [William Sealy Gosset] (1908) - "The probable error of a mean", Biometrika 6(1):1–25. DOI:10.1093/biomet/6.1.1

Cite this calculator & page

If you'd like to cite this online calculator resource and information as provided on the page, you can use the following citation: Georgiev G.Z., "Critical Value Calculator" , [online] Available at: https://www.gigacalculator.com/calculators/critical-value-calculator.php URL [Accessed Date: 19 May, 2024].

Our statistical calculators have been featured in scientific papers and articles published in high-profile science journals by:

The author of this tool

Statistical calculators

Normal, T - Statistical power calculator

Information.

Calculates the test power for the specific sample size and draw a power analysis chart. For the two-tailed test, it calculates the strict interpretation, includes the probability to reject the null assumption in the opposite tail of the true effect

Distribution

Hypothesis Testing for Means & Proportions

- 1

- | 2

- | 3

- | 4

- | 5

- | 6

- | 7

- | 8

- | 9

- | 10

Hypothesis Testing: Upper-, Lower, and Two Tailed Tests

Type i and type ii errors.

All Modules

Z score Table

t score Table

The procedure for hypothesis testing is based on the ideas described above. Specifically, we set up competing hypotheses, select a random sample from the population of interest and compute summary statistics. We then determine whether the sample data supports the null or alternative hypotheses. The procedure can be broken down into the following five steps.

- Step 1. Set up hypotheses and select the level of significance α.

H 0 : Null hypothesis (no change, no difference);

H 1 : Research hypothesis (investigator's belief); α =0.05

- Step 2. Select the appropriate test statistic.

The test statistic is a single number that summarizes the sample information. An example of a test statistic is the Z statistic computed as follows:

When the sample size is small, we will use t statistics (just as we did when constructing confidence intervals for small samples). As we present each scenario, alternative test statistics are provided along with conditions for their appropriate use.

- Step 3. Set up decision rule.

The decision rule is a statement that tells under what circumstances to reject the null hypothesis. The decision rule is based on specific values of the test statistic (e.g., reject H 0 if Z > 1.645). The decision rule for a specific test depends on 3 factors: the research or alternative hypothesis, the test statistic and the level of significance. Each is discussed below.

- The decision rule depends on whether an upper-tailed, lower-tailed, or two-tailed test is proposed. In an upper-tailed test the decision rule has investigators reject H 0 if the test statistic is larger than the critical value. In a lower-tailed test the decision rule has investigators reject H 0 if the test statistic is smaller than the critical value. In a two-tailed test the decision rule has investigators reject H 0 if the test statistic is extreme, either larger than an upper critical value or smaller than a lower critical value.

- The exact form of the test statistic is also important in determining the decision rule. If the test statistic follows the standard normal distribution (Z), then the decision rule will be based on the standard normal distribution. If the test statistic follows the t distribution, then the decision rule will be based on the t distribution. The appropriate critical value will be selected from the t distribution again depending on the specific alternative hypothesis and the level of significance.

- The third factor is the level of significance. The level of significance which is selected in Step 1 (e.g., α =0.05) dictates the critical value. For example, in an upper tailed Z test, if α =0.05 then the critical value is Z=1.645.

The following figures illustrate the rejection regions defined by the decision rule for upper-, lower- and two-tailed Z tests with α=0.05. Notice that the rejection regions are in the upper, lower and both tails of the curves, respectively. The decision rules are written below each figure.

Rejection Region for Lower-Tailed Z Test (H 1 : μ < μ 0 ) with α =0.05

The decision rule is: Reject H 0 if Z < 1.645.

Rejection Region for Two-Tailed Z Test (H 1 : μ ≠ μ 0 ) with α =0.05

The decision rule is: Reject H 0 if Z < -1.960 or if Z > 1.960.

The complete table of critical values of Z for upper, lower and two-tailed tests can be found in the table of Z values to the right in "Other Resources."

Critical values of t for upper, lower and two-tailed tests can be found in the table of t values in "Other Resources."

- Step 4. Compute the test statistic.

Here we compute the test statistic by substituting the observed sample data into the test statistic identified in Step 2.

- Step 5. Conclusion.

The final conclusion is made by comparing the test statistic (which is a summary of the information observed in the sample) to the decision rule. The final conclusion will be either to reject the null hypothesis (because the sample data are very unlikely if the null hypothesis is true) or not to reject the null hypothesis (because the sample data are not very unlikely).

If the null hypothesis is rejected, then an exact significance level is computed to describe the likelihood of observing the sample data assuming that the null hypothesis is true. The exact level of significance is called the p-value and it will be less than the chosen level of significance if we reject H 0 .

Statistical computing packages provide exact p-values as part of their standard output for hypothesis tests. In fact, when using a statistical computing package, the steps outlined about can be abbreviated. The hypotheses (step 1) should always be set up in advance of any analysis and the significance criterion should also be determined (e.g., α =0.05). Statistical computing packages will produce the test statistic (usually reporting the test statistic as t) and a p-value. The investigator can then determine statistical significance using the following: If p < α then reject H 0 .

- Step 1. Set up hypotheses and determine level of significance

H 0 : μ = 191 H 1 : μ > 191 α =0.05

The research hypothesis is that weights have increased, and therefore an upper tailed test is used.

- Step 2. Select the appropriate test statistic.

Because the sample size is large (n > 30) the appropriate test statistic is

- Step 3. Set up decision rule.

In this example, we are performing an upper tailed test (H 1 : μ> 191), with a Z test statistic and selected α =0.05. Reject H 0 if Z > 1.645.

We now substitute the sample data into the formula for the test statistic identified in Step 2.

We reject H 0 because 2.38 > 1.645. We have statistically significant evidence at a =0.05, to show that the mean weight in men in 2006 is more than 191 pounds. Because we rejected the null hypothesis, we now approximate the p-value which is the likelihood of observing the sample data if the null hypothesis is true. An alternative definition of the p-value is the smallest level of significance where we can still reject H 0 . In this example, we observed Z=2.38 and for α=0.05, the critical value was 1.645. Because 2.38 exceeded 1.645 we rejected H 0 . In our conclusion we reported a statistically significant increase in mean weight at a 5% level of significance. Using the table of critical values for upper tailed tests, we can approximate the p-value. If we select α=0.025, the critical value is 1.96, and we still reject H 0 because 2.38 > 1.960. If we select α=0.010 the critical value is 2.326, and we still reject H 0 because 2.38 > 2.326. However, if we select α=0.005, the critical value is 2.576, and we cannot reject H 0 because 2.38 < 2.576. Therefore, the smallest α where we still reject H 0 is 0.010. This is the p-value. A statistical computing package would produce a more precise p-value which would be in between 0.005 and 0.010. Here we are approximating the p-value and would report p < 0.010.

In all tests of hypothesis, there are two types of errors that can be committed. The first is called a Type I error and refers to the situation where we incorrectly reject H 0 when in fact it is true. This is also called a false positive result (as we incorrectly conclude that the research hypothesis is true when in fact it is not). When we run a test of hypothesis and decide to reject H 0 (e.g., because the test statistic exceeds the critical value in an upper tailed test) then either we make a correct decision because the research hypothesis is true or we commit a Type I error. The different conclusions are summarized in the table below. Note that we will never know whether the null hypothesis is really true or false (i.e., we will never know which row of the following table reflects reality).

Table - Conclusions in Test of Hypothesis

In the first step of the hypothesis test, we select a level of significance, α, and α= P(Type I error). Because we purposely select a small value for α, we control the probability of committing a Type I error. For example, if we select α=0.05, and our test tells us to reject H 0 , then there is a 5% probability that we commit a Type I error. Most investigators are very comfortable with this and are confident when rejecting H 0 that the research hypothesis is true (as it is the more likely scenario when we reject H 0 ).

When we run a test of hypothesis and decide not to reject H 0 (e.g., because the test statistic is below the critical value in an upper tailed test) then either we make a correct decision because the null hypothesis is true or we commit a Type II error. Beta (β) represents the probability of a Type II error and is defined as follows: β=P(Type II error) = P(Do not Reject H 0 | H 0 is false). Unfortunately, we cannot choose β to be small (e.g., 0.05) to control the probability of committing a Type II error because β depends on several factors including the sample size, α, and the research hypothesis. When we do not reject H 0 , it may be very likely that we are committing a Type II error (i.e., failing to reject H 0 when in fact it is false). Therefore, when tests are run and the null hypothesis is not rejected we often make a weak concluding statement allowing for the possibility that we might be committing a Type II error. If we do not reject H 0 , we conclude that we do not have significant evidence to show that H 1 is true. We do not conclude that H 0 is true.

The most common reason for a Type II error is a small sample size.

return to top | previous page | next page

Content ©2017. All Rights Reserved. Date last modified: November 6, 2017. Wayne W. LaMorte, MD, PhD, MPH

- Calculators

- Descriptive Statistics

- Merchandise

- Which Statistics Test?

Single Sample Z Score Calculator

This tool calculates the z -score of the mean of a single sample. It can be used to make a judgement about whether the sample differs significantly on some axis from the population from which it was originally drawn.

By default, this tool works on the assumption that you already know the mean value of your sample scores and the number of individuals in your sample. If you want the tool to calculate these for you from raw values, please select the checkbox below.

To use this calculator, just input your population mean, population variance, sample mean and the number of individuals in the sample into the text boxes below. You also need to select a significance level and whether your hypothesis is one or two-tailed. Hit the calculate button (below) when you're ready.

Calculation not performed yet.

Z-test for Two Proportions

Instructions: This calculator conducts a Z-test for two population proportions (\(p_1\) and \(p_2\)), Please select the null and alternative hypotheses, type the significance level, the sample sizes, the number of favorable cases (or the sample proportions) and the results of the z-test will be displayed for you:

When Do You Use a Z-test for Two Proportions?

More about the z-test for two proportions so you can better understand the results yielded by this solver: A z-test for two proportions is a hypothesis test that attempts to make a claim about the population proportions p 1 and p 2 . Specifically, we are interested in assessing whether or not it is reasonable to claim that p 1 = p 2 , using sample information. The Z-test for two proportions has two non-overlapping hypotheses, the null and the alternative hypothesis.

What are the null and alternative hypotheses for the z-test for two proportions?

The null hypothesis is a statement about the population parameter which indicates no effect, and the alternative hypothesis is the complementary hypothesis to the null hypothesis. The main properties of a one sample z-test for two population proportions are:

- Depending on our knowledge about the "no effect" situation, the z-test can be two-tailed, left-tailed or right-tailed

- The main principle of hypothesis testing is that the null hypothesis is rejected if the test statistic obtained is sufficiently unlikely under the assumption that the null hypothesis is true

- The p-value is the probability of obtaining sample results as extreme or more extreme than the sample results obtained, under the assumption that the null hypothesis is true

- In a hypothesis tests there are two types of errors. Type I error occurs when we reject a true null hypothesis, and the Type II error occurs when we fail to reject a false null hypothesis

What is the z-test formula in this case?

The formula for a z-statistic for two population proportions is

where \(\bar p = \frac{X_1+X_2}{n_1+n_2}\) corresponds to the pooled proportion (Notice that in the above z test for proportions formula, we get in the denominator something like our "best guess" of what the population proportion is from information from the two samples, assuming that the null hypothesis of equality of proportions is true). The null hypothesis is rejected when the z-statistic lies on the rejection region, which is determined by the significance level (\(\alpha\)) and the type of tail (two-tailed, left-tailed or right-tailed).

The Case for one population proportion

In case you only have one sample proportion (so you are testing for one population proportion), you should use our z-test for one proportion calculator , which specifically addresses that case.

Related Calculators

log in to your account

Reset password.

Statistics Made Easy

Two-Tailed Hypothesis Tests: 3 Example Problems

In statistics, we use hypothesis tests to determine whether some claim about a population parameter is true or not.

Whenever we perform a hypothesis test, we always write a null hypothesis and an alternative hypothesis, which take the following forms:

H 0 (Null Hypothesis): Population parameter = ≤, ≥ some value

H A (Alternative Hypothesis): Population parameter <, >, ≠ some value

There are two types of hypothesis tests:

- One-tailed test : Alternative hypothesis contains either < or > sign

- Two-tailed test : Alternative hypothesis contains the ≠ sign

In a two-tailed test , the alternative hypothesis always contains the not equal ( ≠ ) sign.

This indicates that we’re testing whether or not some effect exists, regardless of whether it’s a positive or negative effect.

Check out the following example problems to gain a better understanding of two-tailed tests.

Example 1: Factory Widgets

Suppose it’s assumed that the average weight of a certain widget produced at a factory is 20 grams. However, one engineer believes that a new method produces widgets that weigh less than 20 grams.

To test this, he can perform a one-tailed hypothesis test with the following null and alternative hypotheses:

- H 0 (Null Hypothesis): μ = 20 grams

- H A (Alternative Hypothesis): μ ≠ 20 grams

This is an example of a two-tailed hypothesis test because the alternative hypothesis contains the not equal “≠” sign. The engineer believes that the new method will influence widget weight, but doesn’t specify whether it will cause average weight to increase or decrease.

To test this, he uses the new method to produce 20 widgets and obtains the following information:

- n = 20 widgets

- x = 19.8 grams

- s = 3.1 grams

Plugging these values into the One Sample t-test Calculator , we obtain the following results:

- t-test statistic: -0.288525

- two-tailed p-value: 0.776

Since the p-value is not less than .05, the engineer fails to reject the null hypothesis.

He does not have sufficient evidence to say that the true mean weight of widgets produced by the new method is different than 20 grams.

Example 2: Plant Growth

Suppose a standard fertilizer has been shown to cause a species of plants to grow by an average of 10 inches. However, one botanist believes a new fertilizer causes this species of plants to grow by an average amount different than 10 inches.

To test this, she can perform a one-tailed hypothesis test with the following null and alternative hypotheses:

- H 0 (Null Hypothesis): μ = 10 inches

- H A (Alternative Hypothesis): μ ≠ 10 inches

This is an example of a two-tailed hypothesis test because the alternative hypothesis contains the not equal “≠” sign. The botanist believes that the new fertilizer will influence plant growth, but doesn’t specify whether it will cause average growth to increase or decrease.

To test this claim, she applies the new fertilizer to a simple random sample of 15 plants and obtains the following information:

- n = 15 plants

- x = 11.4 inches

- s = 2.5 inches

- t-test statistic: 2.1689

- two-tailed p-value: 0.0478

Since the p-value is less than .05, the botanist rejects the null hypothesis.

She has sufficient evidence to conclude that the new fertilizer causes an average growth that is different than 10 inches.

Example 3: Studying Method

A professor believes that a certain studying technique will influence the mean score that her students receive on a certain exam, but she’s unsure if it will increase or decrease the mean score, which is currently 82.

To test this, she lets each student use the studying technique for one month leading up to the exam and then administers the same exam to each of the students.

She then performs a hypothesis test using the following hypotheses:

- H 0 : μ = 82

- H A : μ ≠ 82

This is an example of a two-tailed hypothesis test because the alternative hypothesis contains the not equal “≠” sign. The professor believes that the studying technique will influence the mean exam score, but doesn’t specify whether it will cause the mean score to increase or decrease.

To test this claim, the professor has 25 students use the new studying method and then take the exam. He collects the following data on the exam scores for this sample of students:

- t-test statistic: 3.6586

- two-tailed p-value: 0.0012

Since the p-value is less than .05, the professor rejects the null hypothesis.

She has sufficient evidence to conclude that the new studying method produces exam scores with an average score that is different than 82.

Additional Resources

The following tutorials provide additional information about hypothesis testing:

Introduction to Hypothesis Testing What is a Directional Hypothesis? When Do You Reject the Null Hypothesis?

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

One Reply to “Two-Tailed Hypothesis Tests: 3 Example Problems”

i owe u my first born child

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

COMMENTS

Choose the alternative hypothesis: two-tailed or left/right-tailed. In our Z-test calculator, you can decide whether to use the p-value or critical regions approach. In the latter case, set the significance level, α \alpha α. Enter the value of the test statistic, z z z.

The two-sample Z-test (or independent samples Z-test) compares the means from two independent groups to determine if there is a statistically significant difference between them. Hypotheses. Null Hypothesis (H 0): The two population means have a difference of d (μ 1-μ 2 =d). If d is 0, the null hypothesis states that the two population means ...

A two sample z-test is used to test whether or not the means of two populations are equal when the population standard deviations are known. ... (one-tailed) = 0.060963. p-value (two-tailed) = 0.121926. ... One Reply to "Two Sample Z-Test Calculator" Jon Arbuckle says: May 5, 2023 at 8:54 pm ...

Next, the test statistic is used to conduct the test using either the p-value approach or critical value approach. The particular steps taken in each approach largely depend on the form of the hypothesis test: lower tail, upper tail or two-tailed. The form can easily be identified by looking at the alternative hypothesis (H a). If there is a ...

Z-test claculator. Target: To check if the difference between the average (mean) of two groups is significant. Example1: A man of average height is expected to be 10cm taller than a woman of average height (d=10) Example2: The average weight of an apple grown in field 1 is expected to be equal in weight to the average apple grown in field 2 (d ...

This corresponds to a two-tailed test, and a z-test for two means, with known population standard deviations will be used. (2) Rejection Region. Based on the information provided, the significance level is \(\alpha = 0.05\), and the critical value for a two-tailed test is \(z_c = 1.96\).

Two-Sample Z Test Hypotheses. Null hypothesis ... To find the p-value that corresponds to a Z-score from a two-tailed analysis, we need to find the negative value of our Z-score (even when it's positive) and double it. ... Using an online calculator, the p-value for our Z test is a more precise 0.0196. This p-value is less than our ...

For example, in a two-tailed Z test with critical values -1.96 and 1.96 (corresponding to 0.05 significance level) the critical regions are from -∞ to -1.96 and from 1.96 to +∞. Therefore, if the statistic falls below -1.96 or above 1.96, the null hypothesis test is statistically significant.

Step 4: Calculate the p-value of the z test statistic. According to the Z Score to P Value Calculator, the two-tailed p-value associated with z = -1.718 is 0.0858. Step 5: Draw a conclusion. Since the p-value (0.0858) is not less than the significance level (.05), the scientist will fail to reject the null hypothesis.

In this example you are given the standard deviations for each sample thus you need to take the square of the standar deviations to find the variances: - Variance sample 1 = 0.26^2 = 0.0676. - Variance sample 2 = 0.22^2 = 0.0484. Now when you have the variances you use the formula for Z-test two independent samples or you can use the calculator ...

The decision rule of the hypothesis test is: If Z ≤ − z0.025 or Z ≥ z0.025, reject H0. If Z > − z0.025 or Z < z0.025, fail to reject H0. The decision rule (based on p-value approach) is: p − value ≤ α, Reject H0. p − value > α, Fail to reject H0. The critical values for a left-tailed test is: − z0.05 = − 1.645.

Calculates the test power for the specific sample size and draw a power analysis chart. For the two-tailed test, it calculates the strict interpretation, includes the probability to reject the null assumption in the opposite tail of the true effect. Use this test for one of the following tests: One Sample Z-Test One Sample T-Test Two Sample Z-Test

NOTE: From the z-table, the critical values for a two-tailed z-test at alpha = .05 is +/- 1.96 . Step 5: Create a conclusion. Our z-test result is 1.825. Because 1.825 < 1.96 it is NOT inside the rejection region. Recall that the rejection regions for a two tailed test with alpha set to .05 is any value above 1.96 OR any value below - 1.96.

Quick P Value from Z Score Calculator. P Value from Z Score Calculator. This is very easy: just stick your Z score in the box marked Z score, select your significance level and whether you're testing a one or two-tailed hypothesis (if you're not sure, go with the defaults), then press the button! If you need to derive a Z score from raw data ...

Two sample z test in Excel. Find a critical z value on the TI 83. Find a critical value on the TI 89 (left-tail). Two Proportion Z-Test. This tests for a difference in proportions. A two proportion z-test allows you to compare two proportions to see if they are the same. The null hypothesis (H 0) for the test is that the proportions are the same.

Since the alternative hypothesis is that boys are more likely to be born than girls, it's a one-tailed test, and we're interested in the right tail. For \( \alpha = 0.05 \), the critical z-value is approximately 1.645. Since the calculated z-statistic (5.502) is greater than the critical z-value (1.645), we reject the null hypothesis.

The level of significance which is selected in Step 1 (e.g., α =0.05) dictates the critical value. For example, in an upper tailed Z test, if α =0.05 then the critical value is Z=1.645. The following figures illustrate the rejection regions defined by the decision rule for upper-, lower- and two-tailed Z tests with α=0.05.

To use this calculator, just input your population mean, population variance, sample mean and the number of individuals in the sample into the text boxes below. You also need to select a significance level and whether your hypothesis is one or two-tailed. Hit the calculate button (below) when you're ready.

Instructions: This calculator conducts a Z-test for two population proportions ( p_1 p1 and p_2 p2 ), Please select the null and alternative hypotheses, type the significance level, the sample sizes, the number of favorable cases (or the sample proportions) and the results of the z-test will be displayed for you: Ho: p_1 p1 p_2 p2.

H0 (Null Hypothesis): μ = 20 grams. HA (Alternative Hypothesis): μ ≠ 20 grams. This is an example of a two-tailed hypothesis test because the alternative hypothesis contains the not equal "≠" sign. The engineer believes that the new method will influence widget weight, but doesn't specify whether it will cause average weight to ...