A Deep Dissertion of Data Science: Related Issues and its Applications

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- IEEE Xplore Digital Library

- IEEE Standards

- IEEE Spectrum

Publications

IEEE Talks Big Data - Check out our new Q&A article series with big Data experts!

Call for Papers - Check out the many opportunities to submit your own paper. This is a great way to get published, and to share your research in a leading IEEE magazine!

Publications - See the list of various IEEE publications related to big data and analytics here.

Call for Blog Writers!

IEEE Cloud Computing Community is a key platform for researchers, academicians and industry practitioners to share and exchange ideas regarding cloud computing technologies and services, as well as identify the emerging trends and research topics that are defining the future direction of cloud computing. Come be part of this revolution as we invite blog posts in this regard and not limited to the list provided below:

- Cloud Deployment Frameworks

- Cloud Architecture

- Cloud Native Design Patterns

- Testing Services and Frameworks

- Storage Architectures

- Big Data and Analytics

- Internet of Things

- Virtualization techniques

- Legacy Modernization

- Security and Compliance

- Pricing Methodologies

- Service Oriented Architecture

- Microservices

- Container Technology

- Cloud Computing Impact and Trends shaping today’s business

- High availability and reliability

Call for Papers

No call for papers at this time.

IEEE Publications on Big Data

Read more at IEEE Computer Society.

IEEE Computer Magazine Special Issue on Big Data Management

- Big Data: Promises and Problems

Connecting the Dots With Big Data

- Better Health Care Through Data

- The Future of Crime Prevention

- Census and Sensibility

- Landing a Job in Big Data

Read more at The Institute.

Download full issue. (PDF, 5 MB)

IEEE Internet Computing July/August 2014

Web-Scale Datacenters

This issue of Internet Computing surveys issues surrounding Web-scale datacenters, particularly in the areas of cloud provisioning as well as networking optimization and configuration. They include workload isolation, recovery from transient server availability, network configuration, virtual networking, and content distribution.

Read more at IEEE Computer Society .

Networking for Big Data

The most current information for communications professionals involved with the interconnection of computing systems, this bimonthly magazine covers all aspects of data and computer communications.

Read more at IEEE Communications Society .

Special Issue on Big Data

Big data is transforming our lives, but it is also placing an unprecedented burden on our compute infrastructure. As data expansion rates outpace Moore's law and supply voltage scaling grinds to a halt, the IT industry is being challenged in its ability to effectively store, process, and serve the growing volumes of data. Delivering on the premise of big data in the postDennard era calls for specialization and tight integration across the system stack, with the aim of maximizing energy efficiency, performance scalability, resilience, and security.

The Trusted Solution for Open Access Publishing

Fully Open Access Topical Journals

IEEE offers over 30 technically focused gold fully open access journals spanning a wide range of fields.

Hybrid Open Access Journals

IEEE offers 180+ hybrid journals that support open access, including many of the top-cited titles in the field. These titles have Transformative Status under Plan S.

IEEE Access

The multidisciplinary, gold fully open access journal of the IEEE, publishing high quality research across all of IEEE’s fields of interest.

About IEEE Open

Many authors in today’s publishing environment want to make access to research freely available to all reader communities. To help authors gain maximum exposure for their groundbreaking research, IEEE provides a variety of open access options to meet the needs of authors and institutions.

Call for Papers

Browse our fully open access topical journals and submit a paper.

News & Events

IEEE Announces 6 New Fully Open Access Journals and 3 Hybrid Journals Coming in 2024

IEEE Commits its Entire Hybrid Journal Portfolio to Transformative Journal Status Aligned with Plan S

IEEE and CRUI Sign Three-Year Transformative Agreement to Accelerate Open Access Publishing in Italy

New IEEE Open Access Journals Receive First Impact Factors

IEEE Access, a Multidisciplinary, Open Access Journal

IEEE Access is a multidisciplinary, online-only, gold fully open access journal, continuously presenting the results of original research or development across all IEEE fields of interest. Supported by article processing charges (APCs), its hallmarks are rapid peer review, a submission-to-publication time of 4 to 6 weeks, and articles that are freely available to all readers.

Now On-Demand

How to publish open access with ieee.

This newly published on-demand webinar will provide authors with best practices in preparing a manuscript, navigating the journal submission process, and important tips to help an author get published. It will also review the opportunities authors and academic institutions have to enhance the visibility and impact of their research by publishing in the many open access options available from IEEE.

Register Now

IEEE Publications Dominate Latest Citation Rankings

Each year, the Journal Citation Reports® (JCR) from Web of Science Group examines the influence and impact of scholarly research journals. JCR reveals the relationship between citing and cited journals, offering a systematic, objective means to evaluate the world’s leading journals. The 2022 JCR study, released in June 2023, reveals that IEEE journals continue to maintain rankings at the top of their fields.

data science Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Assessing the effects of fuel energy consumption, foreign direct investment and GDP on CO2 emission: New data science evidence from Europe & Central Asia

Documentation matters: human-centered ai system to assist data science code documentation in computational notebooks.

Computational notebooks allow data scientists to express their ideas through a combination of code and documentation. However, data scientists often pay attention only to the code, and neglect creating or updating their documentation during quick iterations. Inspired by human documentation practices learned from 80 highly-voted Kaggle notebooks, we design and implement Themisto, an automated documentation generation system to explore how human-centered AI systems can support human data scientists in the machine learning code documentation scenario. Themisto facilitates the creation of documentation via three approaches: a deep-learning-based approach to generate documentation for source code, a query-based approach to retrieve online API documentation for source code, and a user prompt approach to nudge users to write documentation. We evaluated Themisto in a within-subjects experiment with 24 data science practitioners, and found that automated documentation generation techniques reduced the time for writing documentation, reminded participants to document code they would have ignored, and improved participants’ satisfaction with their computational notebook.

Data science in the business environment: Insight management for an Executive MBA

Adventures in financial data science, gecoagent: a conversational agent for empowering genomic data extraction and analysis.

With the availability of reliable and low-cost DNA sequencing, human genomics is relevant to a growing number of end-users, including biologists and clinicians. Typical interactions require applying comparative data analysis to huge repositories of genomic information for building new knowledge, taking advantage of the latest findings in applied genomics for healthcare. Powerful technology for data extraction and analysis is available, but broad use of the technology is hampered by the complexity of accessing such methods and tools. This work presents GeCoAgent, a big-data service for clinicians and biologists. GeCoAgent uses a dialogic interface, animated by a chatbot, for supporting the end-users’ interaction with computational tools accompanied by multi-modal support. While the dialogue progresses, the user is accompanied in extracting the relevant data from repositories and then performing data analysis, which often requires the use of statistical methods or machine learning. Results are returned using simple representations (spreadsheets and graphics), while at the end of a session the dialogue is summarized in textual format. The innovation presented in this article is concerned with not only the delivery of a new tool but also our novel approach to conversational technologies, potentially extensible to other healthcare domains or to general data science.

Differentially Private Medical Texts Generation Using Generative Neural Networks

Technological advancements in data science have offered us affordable storage and efficient algorithms to query a large volume of data. Our health records are a significant part of this data, which is pivotal for healthcare providers and can be utilized in our well-being. The clinical note in electronic health records is one such category that collects a patient’s complete medical information during different timesteps of patient care available in the form of free-texts. Thus, these unstructured textual notes contain events from a patient’s admission to discharge, which can prove to be significant for future medical decisions. However, since these texts also contain sensitive information about the patient and the attending medical professionals, such notes cannot be shared publicly. This privacy issue has thwarted timely discoveries on this plethora of untapped information. Therefore, in this work, we intend to generate synthetic medical texts from a private or sanitized (de-identified) clinical text corpus and analyze their utility rigorously in different metrics and levels. Experimental results promote the applicability of our generated data as it achieves more than 80\% accuracy in different pragmatic classification problems and matches (or outperforms) the original text data.

Impact on Stock Market across Covid-19 Outbreak

Abstract: This paper analysis the impact of pandemic over the global stock exchange. The stock listing values are determined by variety of factors including the seasonal changes, catastrophic calamities, pandemic, fiscal year change and many more. This paper significantly provides analysis on the variation of listing price over the world-wide outbreak of novel corona virus. The key reason to imply upon this outbreak was to provide notion on underlying regulation of stock exchanges. Daily closing prices of the stock indices from January 2017 to January 2022 has been utilized for the analysis. The predominant feature of the research is to analyse the fact that does global economy downfall impacts the financial stock exchange. Keywords: Stock Exchange, Matplotlib, Streamlit, Data Science, Web scrapping.

Information Resilience: the nexus of responsible and agile approaches to information use

AbstractThe appetite for effective use of information assets has been steadily rising in both public and private sector organisations. However, whether the information is used for social good or commercial gain, there is a growing recognition of the complex socio-technical challenges associated with balancing the diverse demands of regulatory compliance and data privacy, social expectations and ethical use, business process agility and value creation, and scarcity of data science talent. In this vision paper, we present a series of case studies that highlight these interconnected challenges, across a range of application areas. We use the insights from the case studies to introduce Information Resilience, as a scaffold within which the competing requirements of responsible and agile approaches to information use can be positioned. The aim of this paper is to develop and present a manifesto for Information Resilience that can serve as a reference for future research and development in relevant areas of responsible data management.

qEEG Analysis in the Diagnosis of Alzheimers Disease; a Comparison of Functional Connectivity and Spectral Analysis

Alzheimers disease (AD) is a brain disorder that is mainly characterized by a progressive degeneration of neurons in the brain, causing a decline in cognitive abilities and difficulties in engaging in day-to-day activities. This study compares an FFT-based spectral analysis against a functional connectivity analysis based on phase synchronization, for finding known differences between AD patients and Healthy Control (HC) subjects. Both of these quantitative analysis methods were applied on a dataset comprising bipolar EEG montages values from 20 diagnosed AD patients and 20 age-matched HC subjects. Additionally, an attempt was made to localize the identified AD-induced brain activity effects in AD patients. The obtained results showed the advantage of the functional connectivity analysis method compared to a simple spectral analysis. Specifically, while spectral analysis could not find any significant differences between the AD and HC groups, the functional connectivity analysis showed statistically higher synchronization levels in the AD group in the lower frequency bands (delta and theta), suggesting that the AD patients brains are in a phase-locked state. Further comparison of functional connectivity between the homotopic regions confirmed that the traits of AD were localized in the centro-parietal and centro-temporal areas in the theta frequency band (4-8 Hz). The contribution of this study is that it applies a neural metric for Alzheimers detection from a data science perspective rather than from a neuroscience one. The study shows that the combination of bipolar derivations with phase synchronization yields similar results to comparable studies employing alternative analysis methods.

Big Data Analytics for Long-Term Meteorological Observations at Hanford Site

A growing number of physical objects with embedded sensors with typically high volume and frequently updated data sets has accentuated the need to develop methodologies to extract useful information from big data for supporting decision making. This study applies a suite of data analytics and core principles of data science to characterize near real-time meteorological data with a focus on extreme weather events. To highlight the applicability of this work and make it more accessible from a risk management perspective, a foundation for a software platform with an intuitive Graphical User Interface (GUI) was developed to access and analyze data from a decommissioned nuclear production complex operated by the U.S. Department of Energy (DOE, Richland, USA). Exploratory data analysis (EDA), involving classical non-parametric statistics, and machine learning (ML) techniques, were used to develop statistical summaries and learn characteristic features of key weather patterns and signatures. The new approach and GUI provide key insights into using big data and ML to assist site operation related to safety management strategies for extreme weather events. Specifically, this work offers a practical guide to analyzing long-term meteorological data and highlights the integration of ML and classical statistics to applied risk and decision science.

Export Citation Format

Share document.

DATA SCIENCE IEEE PAPERS AND PROJECTS-2020

Data science is an inter-disciplinary field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from structured and unstructured data. Data science is related to data mining and big data.

FREE IEEE PAPER AND PROJECTS

Ieee projects 2022, seminar reports, free ieee projects ieee papers.

Help | Advanced Search

Computer Science > Machine Learning

Title: kan: kolmogorov-arnold networks.

Abstract: Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmogorov-Arnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs). While MLPs have fixed activation functions on nodes ("neurons"), KANs have learnable activation functions on edges ("weights"). KANs have no linear weights at all -- every weight parameter is replaced by a univariate function parametrized as a spline. We show that this seemingly simple change makes KANs outperform MLPs in terms of accuracy and interpretability. For accuracy, much smaller KANs can achieve comparable or better accuracy than much larger MLPs in data fitting and PDE solving. Theoretically and empirically, KANs possess faster neural scaling laws than MLPs. For interpretability, KANs can be intuitively visualized and can easily interact with human users. Through two examples in mathematics and physics, KANs are shown to be useful collaborators helping scientists (re)discover mathematical and physical laws. In summary, KANs are promising alternatives for MLPs, opening opportunities for further improving today's deep learning models which rely heavily on MLPs.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

IEEE 2023-2024 : Data Science Projects

For Outstation Students, we are having online project classes both technical and coding using net-meeting software

For details, call: 9886692401/9845166723.

DHS Informatics providing latest 2021-2022 IEEE projects on Data science for the final year engineering students. DHS Informatics trains all students to develop their project with good idea what they need to submit in college to get good marks. DHS Informatics offers placement training in Bangalore and the program name is OJT – On Job Training , job seekers as well as final year college students can join in this placement training program and job opportunities in their dream IT companies. We are providing IEEE projects for B.E / B.TECH, M.TECH, MCA, BCA, DIPLOMA students from more than two decades.

Python Final year CSE projects in Bangalore

- Python 2021 – 2022 IEEE PYTHON PROJECTS CSE | ECE | ISE

- Python 2021 – 2022 IEEE PYTHON MACHINE LEARNING PROJECTS

- Python 2021 – 2021 IEEE PYTHON IMAGE PROCESSING PROJECTS

- Python 2021 – 2022 IEEE IOT PYTHON RASPBERRY PI PROJECTS

DATA SCIENCE PROJECTS

A data mining based model for detection of fraudulent behaviour in water consumption.

Abstract: Fraudulent behavior in drinking water consumption is a significant problem facing water supplying companies and agencies. This behavior results in a massive loss of income and forms the highest percentage of non-technical loss. Finding efficient measurements for detecting fraudulent activities has been an active research area in recent years. Intelligent data mining techniques can help water supplying companies to detect these fraudulent activities to reduce such losses. This research explores the use of two classification techniques (SVM and KNN) to detect suspicious fraud water customers. The main motivation of this research is to assist Yarmouk Water Company (YWC) in Irbid city of Jordan to overcome its profit loss. The SVM based approach uses customer load profile attributes to expose abnormal behavior that is known to be correlated with non-technical loss activities. The data has been collected from the historical data of the company billing system. The accuracy of the generated model hit a rate of over 74% which is better than the current manual prediction procedures taken by the YWC. To deploy the model, a decision tool has been built using the generated model. The system will help the company to predict suspicious water customers to be inspected on site.

Correlated Matrix Factorization for Recommendation with Implicit Feedback

Abstract: As a typical latent factor model, Matrix Factorization (MF) has demonstrated its great effectiveness in recommender systems. Users and items are represented in a shared low-dimensional space so that the user preference can be modeled by linearly combining the item factor vector V using the user-specific coefficients U. From a generative model perspective, U and V are drawn from two independent Gaussian distributions, which is not so faithful to the reality. Items are produced to maximally meet users’ requirements, which makes U and V strongly correlated. Meanwhile, the linear combination between U and V forces a bisection (one-to-one mapping), which thereby neglects the mutual correlation between the latent factors. In this paper, we address the upper drawbacks, and propose a new model, named Correlated Matrix Factorization (CMF). Technically, we apply Canonical Correlation Analysis (CCA) to map U and V into a new semantic space. Besides achieving the optimal fitting on the rating matrix, one component in each vector (U or V) is also tightly correlated with every single component in the other. We derive efficient inference and learning algorithms based on variational EM methods. The effectiveness of our proposed model is comprehensively verified on four public data sets. Experimental results show that our approach achieves competitive performance on both prediction accuracy and efficiency compared with the current state of the art.

Heterogeneous Information Network Embedding for Recommendation

Abstract: Due to the flexibility in modelling data heterogeneity, heterogeneous information network (HIN) has been adopted to characterize complex and heterogeneous auxiliary data in recommended systems, called HIN based recommendation. It is challenging to develop effective methods for HIN based recommendation in both extraction and exploitation of the information from HINs. Most of HIN based recommendation methods rely on path based similarity, which cannot fully mine latent structure features of users and items. In this paper, we propose a novel heterogeneous network embedding based approach for HIN based recommendation, called HERec. To embed HINs, we design a meta-path based random walk strategy to generate meaningful node sequences for network embedding. The learned node embeddings are first transformed by a set of fusion functions, and subsequently integrated into an extended matrix factorization (MF) model. The extended MF model together with fusion functions are jointly optimized for the rating prediction task. Extensive experiments on three real-world datasets demonstrate the effectiveness of the HERec model. Moreover, we show the capability of the HERec model for the cold-start problem, and reveal that the transformed embedding information from HINs can improve the recommendation performance.

NetSpam: A Network-Based Spam Detection Framework for Reviews in Online Social Media

Abstract: Nowadays, a big part of people rely on available content in social media in their decisions (e.g., reviews and feedback on a topic or product). The possibility that anybody can leave a review provides a golden opportunity for spammers to write spam reviews about products and services for different interests. Identifying these spammers and the spam content is a hot topic of research, and although a considerable number of studies have been done recently toward this end, but so far the methodologies put forth still barely detect spam reviews, and none of them show the importance of each extracted feature type. In this paper, we propose a novel framework, named NetSpam, which utilizes spam features for modeling review data sets as heterogeneous information networks to map spam detection procedure into a classification problem in such networks. Using the importance of spam features helps us to obtain better results in terms of different metrics experimented on real-world review data sets from Yelp and Amazon Web sites. The results show that NetSpam outperforms the existing methods and among four categories of features, including review-behavioral, user-behavioral, review-linguistic, and user-linguistic, the first type of features performs better than the other categories.

Comparative Study to Identify the Heart Disease Using Machine Learning Algorithms

Abstract: Nowadays, heart disease is a common and frequently present disease in the human body and it’s also hunted lots of humans from this world. Especially in the USA, every year mass people are affected by this disease after that in India also. Doctor and clinical research said that heart disease is not a suddenly happen disease it’s the cause of continuing irregular lifestyle and different body’s activity for a long period after then it’s appeared in sudden with symptoms. After appearing those symptoms people seek for a treat in hospital for taken different test and therapy but these are a little expensive. So awareness before getting appeared in this disease people can get an idea about the patient condition from this research result. This research collected data from different sources and split that data into two parts like 80% for the training dataset and the rest 20% for the test dataset. Using different classifier algorithms tried to get better accuracy and then summarize that accuracy. These algorithms are namely Random Forest Classifier, Decision Tree Classifier, Support Vector Machine, k-nearest neighbor, Logistic Regression, and Naive Bayes. SVM, Logistic Regression, and KNN gave the same and better accuracy as other algorithms. This paper proposes a development that which factor is vulnerable to heart disease given basic prefix like sex, glucose, Blood pressure, Heart rate, etc. The future direction of this paper is using different devices and clinical trials for the real-life experiment.

A machine learning approach for opinion mining online customer reviews

Abstract :This study was conducted to apply supervised machine learning methods in opinion mining online customer reviews. First, the study automatically collected 39,976 traveler reviews on hotels in Vietnam on Agoda.com website, then conducted the training with machine learning models to find out which model is most compatible with the training dataset and apply this model to forecast opinions for the collected dataset. The results showed that Logistic Regression (LR), Support Vector Machines (SVM) and Neural Network (NN) methods have the best performance in opinion mining in Vietnamese language. This study is valuable as a reference for applications of opinion mining in the field of business.

Hybrid Machine Learning Classification Technique for Improve Accuracy of Heart Disease

Abstract: The area of medical science has attracted great attention from researchers. Several causes for human early mortality have been identified by a decent number of investigators. The related literature has confirmed that diseases are caused by different reasons and one such cause is heart-based sicknesses. Many researchers proposed idiosyncratic methods to preserve human life and help health care experts to recognize, prevent and manage heart disease. Some of the convenient methodologies facilitate the expert’s decision but every successful scheme has its own restrictions. The proposed approach robustly analyze an act of Hidden Markov Model (HMM), Artificial Neural Network (ANN), Support Vector Machine (SVM), and Decision Tree J48 along with the two different feature selection methods such as Correlation Based Feature Selection (CFS) and Gain Ratio. The Gain Ratio accompanies the Ranker method over a different group of statistics. After analyzing the procedure the intended method smartly builds Naive Bayes processing that utilizes the operation of two most appropriate processes with suitable layered design. Initially, the intention is to select the most appropriate method and analyzing the act of available schemes executed with different features for examining the statistics.

Novel Supervised Machine Learning Classification Technique for Improve Accuracy of Multi-Valued Datasets in Agriculture

Abstract: In the modern era, many reasons for agricultural plant disease due to unfavorable weather conditions. Many reasons that influence disease in agricultural plants include variety/hybrid genetics, the lifetime of plants at the time of infection, environment(soil, climate), weather (temperature, wind, rain, hail, etc), single versus mixed infections, and genetics of the pathogen populations. Due to these factors, diagnosis of plant diseases at the early stages can be a difficult task. Machine Learning (ML) classification techniques such as Naïve Bayes (NB) and Neural Network (NN) techniques were compared to develop a novel technique to improve the level of accuracy

Machine Learning and Deep Learning Approaches for Brain Disease Diagnosis: Principles and Recent Advances

Abstract: Brain is the controlling center of our body. With the advent of time, newer and newer brain diseases are being discovered. Thus, because of the variability of brain diseases, existing diagnosis or detection systems are becoming challenging and are still an open problem for research. Detection of brain diseases at an early stage can make a huge difference in attempting to cure them. In recent years, the use of artificial intelligence (AI) is surging through all spheres of science, and no doubt, it is revolutionizing the field of neurology. Application of AI in medical science has made brain disease prediction and detection more accurate and precise. In this study, we present a review on recent machine learning and deep learning approaches in detecting four brain diseases such as Alzheimer’s disease (AD), brain tumor, epilepsy, and Parkinson’s disease. 147 recent articles on four brain diseases are reviewed considering diverse machine learning and deep learning approaches, modalities, datasets etc. Twenty-two datasets are discussed which are used most frequently in the reviewed articles as a primary source of brain disease data. Moreover, a brief overview of different feature extraction techniques that are used in diagnosing brain diseases is provided. Finally, key findings from the reviewed articles are summarized and a number of major issues related to machine learning/deep learning-based brain disease diagnostic approaches are discussed. Through this study, we aim at finding the most accurate technique for detecting different brain diseases which can be employed for future betterment.

Prediction of Chronic Kidney Disease - A Machine Learning Perspective

Abstract: Chronic Kidney Disease is one of the most critical illness nowadays and proper diagnosis is required as soon as possible. Machine learning technique has become reliable for medical treatment. With the help of a machine learning classifier algorithms, the doctor can detect the disease on time. For this perspective, Chronic Kidney Disease prediction has been discussed in this article. Chronic Kidney Disease dataset has been taken from the UCI repository. Seven classifier algorithms have been applied in this research such as artificial neural network, C5.0, Chi-square Automatic interaction detector, logistic regression, linear support vector machine with penalty L1 & with penalty L2 and random tree. The important feature selection technique was also applied to the dataset. For each classifier, the results have been computed based on (i) full features, (ii) correlation-based feature selection, (iii) Wrapper method feature selection, (iv) Least absolute shrinkage and selection operator regression, (v) synthetic minority over-sampling technique with least absolute shrinkage and selection operator regression selected features, (vi) synthetic minority over-sampling technique with full features. From the results, it is marked that LSVM with penalty L2 is giving the highest accuracy of 98.86% in synthetic minority over-sampling technique with full features. Along with accuracy, precision, recall, F-measure, area under the curve and GINI coefficient have been computed and compared results of various algorithms have been shown in the graph. Least absolute shrinkage and selection operator regression selected features with synthetic minority over-sampling technique gave the best after synthetic minority over-sampling technique with full features. In the synthetic minority over-sampling technique with least absolute shrinkage and selection operator selected features, again linear support vector machine gave the highest accuracy of 98.46%. Along with machine learning models one deep neural network has been applied on the same dataset and it has been noted that deep neural network achieved the highest accuracy of 99.6%

Potato Disease Detection Using Machine Learning

Abstract: In Bangladesh potato is one of the major crops. Potato cultivation has been very popular in Bangladesh for the last few decades. But potato production is being hampered due to some diseases which are increasing the cost of farmers in potato production. However, some potato diseases are hampering potato production that is increasing the cost of farmers. Which is disrupting the life of the farmer. An automated and rapid disease detection process to increase potato production and digitize the system. Our main goal is to diagnose potato disease using leaf pictures that we are going to do through advanced machine learning technology. This paper offers a picture that is processing and machine learning based automated systems potato leaf diseases will be identified and classified. Image processing is the best solution for detecting and analyzing these diseases. In this analysis, picture division is done more than 2034 pictures of unhealthy potato and potato’s leaf, which is taken from openly accessible plant town information base and a few pre-prepared models are utilized for acknowledgment and characterization of sick and sound leaves. Among them, the program predicts with an accuracy of 99.23% in testing with 25% test data and 75% train data. Our output has shown that machine learning exceeds all existing tasks in potato disease detection.

A Comparative Evaluation of Traditional Machine Learning and Deep Learning Classification Techniques for Sentiment Analysis

Abstract :With the technological advancement in the field of digital transformation, the use of the internet and social media has increased immensely. Many people use these platforms to share their views, opinions and experiences. Analyzing such information is significant for any organization as it apprises the organization to understand the need of their customers. Sentiment analysis is an intelligible way to interpret the emotions from the textual information and it helps to determine whether that emotion is positive or negative. This paper outlines the data cleaning and data preparation process for sentiment analysis and presents experimental findings that demonstrates the comparative performance analysis of various classification algorithms. In this context, we have analyzed various machine learning techniques (Support Vector Machine, and Multinomial Naive Bayes) and deep learning techniques (Bidirectional Encoder Representations from Transformers, and Long Short-Term Memory) for sentiment analysis

A Comprehensive Review on Email Spam Classification using Machine Learning Algorithms

Abstract: Email is the most used source of official communication method for business purposes. The usage of the email continuously increases despite of other methods of communications. Automated management of emails is important in the today’s context as the volume of emails grows day by day. Out of the total emails, more than 55 percent is identified as spam. This shows that these spams consume email user time and resources generating no useful output. The spammers use developed and creative methods in order to fulfil their criminal activities using spam emails, Therefore, it is vital to understand different spam email classification techniques and their mechanism. This paper mainly focuses on the spam classification approached using machine learning algorithms. Furthermore, this study provides a comprehensive analysis and review of research done on different machine learning techniques and email features used in different Machine Learning approaches. Also provides future research directions and the challenges in the spam classification field that can be useful for future researchers.

Heart Disease Prediction using Hybrid machine Learning Model

Abstract: Heart disease causes a significant mortality rate around the world, and it has become a health threat for many people. Early prediction of heart disease may save many lives; detecting cardiovascular diseases like heart attacks, coronary artery diseases etc., is a critical challenge by the regular clinical data analysis. Machine learning (ML) can bring an effective solution for decision making and accurate predictions. The medical industry is showing enormous development in using machine learning techniques. In the proposed work, a novel machine learning approach is proposed to predict heart disease. The proposed study used the Cleveland heart disease dataset, and data mining techniques such as regression and classification are used. Machine learning techniques Random Forest and Decision Tree are applied. The novel technique of the machine learning model is designed. In implementation, 3 machine learning algorithms are used, they are 1. Random Forest, 2. Decision Tree and 3. Hybrid model (Hybrid of random forest and decision tree). Experimental results show an accuracy level of 88.7% through the heart disease prediction model with the hybrid model. The interface is designed to get the user’s input parameter to predict the heart disease, for which we used a hybrid model of Decision Tree and Random Forest

Heart Failure Prediction by Feature Ranking Analysis in Machine Learning

Abstract: Heart disease is one of the major cause of mortality in the world today. Prediction of cardiovascular disease is a critical challenge in the field of clinical data analysis. With the advanced development in machine learning (ML), artificial intelligence (AI) and data science has been shown to be effective in assisting in decision making and predictions from the large quantity of data produced by the healthcare industry. ML approaches has brought lot of improvements and broadens the study in medical field which recognizes patterns in the human body by using various algorithms and correlation techniques. One such reality is coronary heart disease, various studies gives impression into predicting heart disease with ML techniques. Initially ML was used to find degree of heart failure, but also used to identify significant features that affects the heart disease by using correlation techniques. There are many features/factors that lead to heart disease like age, blood pressure, sodium creatinine, ejection fraction etc. In this paper we propose a method to finding important features by applying machine learning techniques. The work is to design and develop prediction of heart disease by feature ranking machine learning. Hence ML has huge impact in saving lives and helping the doctors, widening the scope of research in actionable insights, drive complex decisions and to create innovative products for businesses to achieve key goals.

Design of face detection and recognition system to monitor students during online examinations using Machine Learning algorithms

Abstract: Today’s pandemic situation has transformed the way of educating a student. Education is undertaken remotely through online platforms. In addition to the way the online course contents and online teaching, it has also changed the way of assessments. In online education, monitoring the attendance of the students is very important as the presence of students is part of a good assessment for teaching and learning. Educational institutions have adopting online examination portals for the assessments of the students. These portals make use of face recognition techniques to monitor the activities of the students and identify the malpractice done by them. This is done by capturing the students’ activities through a web camera and analyzing their gestures and postures. Image processing algorithms are widely used in the literature to perform face recognition. Despite the progress made to improve the performance of face detection systems, there are issues such as variations in human facial appearance like varying lighting condition, noise in face images, scale, pose etc., that blocks the progress to reach human level accuracy. The aim of this study is to increase the accuracy of the existing face recognition systems by making use of SVM and Eigenface algorithms. In this project, an approach similar to Eigenface is used for extracting facial features through facial vectors and the datasets are trained using Support Vector Machine (SVM) algorithm to perform face classification and detection. This ensures that the face recognition can be faster and be used for online exam monitoring.

IEEE DATA SCIENCE PROJECTS (2020-2021)

DHS Informatics believes in students’ stratification, we first brief the students about the technologies and type of Data Science projects and other domain projects. After complete concept explanation of the IEEE Data Science projects, students are allowed to choose more than one IEEE Data Science projects for functionality details. Even students can pick one project topic from Data Science and another two from other domains like Data Science,Data mining, image process, information forensic, big data, Data Mining, block chain etc. DHS Informatics is a pioneer institute in Bangalore / Bengaluru; we are supporting project works for other institute all over India. We are the leading final year project centre in Bangalore / Bengaluru and having office in five different main locations Jayanagar, Yelahanka, Vijayanagar, RT Nagar & Indiranagar.

We allow the ECE, CSE, ISE final year students to use the lab and assist them in project development work; even we encourage students to get their own idea to develop their final year projects for their college submission.

DHS Informatics first train students on project related topics then students are entering into practical sessions. We have well equipped lab set-up, experienced faculties those who are working in our client projects and friendly student coordinator to assist the students in their college project works.

We appreciated by students for our Latest IEEE projects & concepts on final year Data Mining projects for ECE, CSE, and ISE departments.

Latest IEEE 2021-2022 projects on Data Mining with real time concepts which are implemented using Java, MATLAB, and NS2 with innovative ideas. Final year students of computer Data Mining, computer science, information science, electronics and communication can contact our corporate office located at Jayanagar, Bangalore for Data Science project details.

DATA SCIENCE

Data Science is mining knowledge from data, Involving methods at the intersection of machine learning, statistics, and database systems. Its the powerful new technology with great potential to help companies focus on the most important information in their data warehouses. We have the best in class infrastructure, lab set up , Training facilities, And experienced research and development team for both educational and corporate sectors.

Data Science is the process of searching huge amount of data from different aspects and summarize it to useful information. Data Science is logical than physical subset. Our concerns usually implicate mining and text based classification on Data Science projects for Students.

The usages of variety of tools associated to data analysis for identifying relationships in data are the process for Data Science. Our concern support data mining projects for IT and CSE students to carry out their academic research projects.

Data Science is the process of searching huge amount of data from different aspects and summarize it to useful information. Data Science is logical than physical subset. Our concerns usually implicate mining and text based classification on data Science projects for Students. The usages of variety of tools associated to data analysis for identifying relationships in data are the process for data Science. Our concern support data Science projects for IT and CSE students to carry out their academic research projects.

Relational Statics

The popularity of the term “data science” has exploded in business environments and academia, as indicated by a jump in job openings. However, many critical academics and journalists see no distinction between data science and statistics. Writing in Forbes, Gil Press argues that data science is a buzzword without a clear definition and has simply replaced “business analytics” in contexts such as graduate degree programs.In the question-and-answer section of his keynote address at the Joint Statistical Meetings of American Statistical Association, noted applied statistician Nate Silver said, “I think data-scientist is a sexed up term for a statistician….Statistics is a branch of science. Data scientist is slightly redundant in some way and people shouldn’t berate the term statistician.”Similarly, in business sector, multiple researchers and analysts state that data scientists alone are far from being sufficient in granting companies a real competitive advantage and consider data scientists as only one of the four greater job families companies require to leverage big data effectively, namely: data analysts, data scientists, big data developers and big data engineers.

On the other hand, responses to criticism are as numerous. In a 2014 Wall Street Journal article, Irving Wladawsky-Berger compares the data science enthusiasm with the dawn of computer science. He argues data science, like any other interdisciplinary field, employs methodologies and practices from across the academia and industry, but then it will morph them into a new discipline. He brings to attention the sharp criticisms computer science, now a well respected academic discipline, had to once face.Likewise, NYU Stern’s Vasant Dhar, as do many other academic proponents of data science,argues more specifically in December 2013 that data science is different from the existing practice of data analysis across all disciplines, which focuses only on explaining data sets. Data science seeks actionable and consistent pattern for predictive uses.This practical engineering goal takes data science beyond traditional analytics. Now the data in those disciplines and applied fields that lacked solid theories, like health science and social science, could be sought and utilized to generate powerful predictive models.

Java Final year CSE projects in Bangalore

- Java Information Forensic / Block Chain B.E Projects

- Java Cloud Computing B.E Projects

- Java Big Data with Hadoop B.E Projects

- Java Networking & Network Security B.E Pr ojects

- Java Data Mining / Web Mining / Cyber Secu rity B.E Projects

- Java DataScience / Machine Learning B.E Projects

- Java Artificaial Inteligence B.E Projects

- Java Wireless Sensor Network B.E Projects

- Java Distributed & Parallel Networking B.E Projects

- Java Mobile Computing B.E Projects

Android Final year CSE projects in Bangalore

- Android GPS, GSM, Bluetooth & GPRS B.E Projects

- Android Embedded System Application Projetcs for B.E

- Android Database Applications Projects for B.E Students

- Android Cloud Computing Projects for Final Year B.E Students

- Android Surveillance Applications B.E Projects

- Android Medical Applications Projects for B.E

Embedded Final year CSE projects in Bangalore

- Embedded Robotics Projects for M.tech Final Year Students

- Embedded IEEE Internet of Things Projects for B.E Students

- Embedded Raspberry PI Projects for B.E Final Year Students

- Embedded Automotive Projects for Final Year B.E Students

- Embedded Biomedical Projects for B.E Final Year Students

- Embedded Biometric Projects for B.E Final Year Students

- Embedded Security Projects for B.E Final Year

MatLab Final year CSE projects in Bangalore

- Matlab Image Processing Projects for B.E Students

- MatLab Wireless Communication B.E Projects

- MatLab Communication Systems B.E Projects

- MatLab Power Electronics Projects for B.E Students

- MatLab Signal Processing Projects for B.E

- MatLab Geo Science & Remote Sensors B.E Projects

- MatLab Biomedical Projects for B.E Students

Data Science Journal

- Download PDF (English) XML (English)

- Alt. Display

- Collection: Data Management Planning across Disciplines and Infrastructures

Practice Papers

The research data management organiser (rdmo) – a strong community behind an established software for dmps and much more.

- Ivonne Anders

- Daniela Adele Hausen

- Christin Henzen

- Gerald Jagusch

- Giacomo Lanza

- Olaf Michaelis

- Karsten Peters-von Gehlen

- Torsten Rathmann

- Jürgen Rohrwild

- Sabine Schönau

- Kerstin Vanessa Wedlich-Zachodin

- Jürgen Windeck

This practice paper provides an overview of the Research Data Management Organiser (RDMO) software for data management planning and the RDMO community. It covers the background and history of RDMO as a funded project, its current status as a consortium and an open source software and provides insights into the organisation of the vibrant RDMO community. Furthermore, we introduce RDMO from a software developer’s perspective and outline, in detail, the current work in the different sub-working groups committed to developing DMP templates and related guidance materials.

- Data management planning

- DMP templates

- Open source software

- Community building

The Background and History of RDMO

The Research Data Management Organiser (RDMO) is a web-based software that enables research-performing institutions as well as researchers themselves to plan and carry out their management of research data. RDMO can assemble all relevant planning information and data management tasks across the whole life cycle of the research data. RDMO is ready for application in smaller or larger projects.

One of the results of ‘WissGrid’, a collaborative project in the German D-Grid context, was a small collection of guidelines on how to deal with data, including a set of questions to help organise data publication and data management ( Fiedler et al. 2013 ). The publication collected and reflected the discussion driven by the California Digital Library (CDL) and Digital Curation Centre (DCC) on data management and data management plans in the context of Germany and its landscape of research institutions and organisations.

Some of the takeaways from this work were that only writing up DMPs to meet the requirements of a funding agency would not suffice to guide research projects during their subsequent processes of producing and analysing their research data. DMPs and connected information should better remain in the realm of the workgroup/project or institution instead of a central website.

With this motivation, the DFG-founded RDMO project was set up in 2015 ( DFG 2021 ), aiming to develop a modern and easy-to-install and use web application with a questionnaire based on the aforementioned WissGrid guidelines ( Fiedler et al. 2013 ), a storage engine and configurable output options. The web app has consecutively been early-exposed to interested adopters. By giving extensive support, the RDMO project not only improved its web application but also was an attractor for the formation of new local groups to organise their data management work across institutional borders and local and community barriers.

The RDMO project continued in 2018 to interact intensely with the growing group of institutions and groups that used the RDMO web app in many different ways: not only to produce DMPs but also as a tool to organise consulting and coaching in data management, to enforce standardisation of data management within an institution, to feed available information (e.g., from lab instruments) into a project’s RDMO instance or to adapt the collected information into several formats required by funding agencies or research institutions. A DMP with these additional functionalities can also be used to initiate processes and tasks in the whole data lifecycle and is called ‘machine-actionable DMP’ (maDMP). In RDMO, we implemented the recommendations of the RDA WG DMP Common Standards ( Miksa et al. 2020 ).

RDMO from a Software Development’s Perspective

RDMO is an open source tool whose code can be freely extended and modified. It is implemented as a web application. It consists of a backend part running on a server that is mainly written in Python utilising the Django framework ( https://djangoproject.com/ ) and a frontend part based on common web technologies providing the user interface running in a browser to be able to provide a collaborative platform. Python and the Django framework were chosen because Python is a high-level programming language that is relatively easy to learn. Its emphasis is on code readability and usability, which has made it a well-established programming language in the science community. This provides the advantage of having a certain degree of knowledge in the area where RDMO is installed, maintained, used, and its development is driven forward. From the start, RDMO’s code has been freely available with an Apache 2.0 licence on GitHub, which also serves as a focal point for community feedback (bug reports, feature requests) and for defining and tracking RDMO’s future development ( https://github.com/rdmorganiser/rdmo ).

The software’s first release dates back to 2016. Subsequently, the RDMO community has seen over 60 new versions. Regular releases provide continuity and have made RDMO grow quite mature over time. The exact number of software downloads is unknown, but the number of productive and test instances has steadily been increasing during the last few years and has now reached 56 (source: https://rdmorganiser.github.io/Community/ , status: 11/09/2023).

RDMO was designed to make technical hurdles for administrators as low as possible. It can be installed fairly quickly and does not need much storage space or processing power because it primarily deals with textual data saved in rather small databases. RDMO only requires Python to run, a web server like Apache or Nginx to serve static files and a database like PostgreSQL or MySQL. There are Docker images provided as well to ease the RDMO run for those who are familiar with this technology.

Information is stored locally within an RDMO instance and is structured according to RDMO’s data model, presented in Figure 1 . A person compiling a DMP for a project is requested to address a series of questions. The answers are stored as values of internal variables called attributes and can then be further used to generate documents (views) or to activate actions (tasks).

The RDMO data model. RDMO employs a complex data model organised along different Django apps and modules (representing database tables), which is well documented ( https://rdmo.readthedocs.io/en/latest/management/data-model.html ).

The exchange of information among instances is made possible by using a common attribute list (the RDMO domain), which ensures compatibility between question catalogs and still allows use-case-tailored question catalogs, option sets and views. All this content can be exchanged over the GitHub repository for content.

The RDMO domain currently includes 291 hierarchically ordered attributes, which cover all RDM aspects identified so far and thus plays the role of a ‘controlled vocabulary’ for DMPs. The fundamental RDMO catalog contains 125 questions covering all aspects of research data management. Besides that, several other catalogs ( https://www.forschungsdaten.org/index.php/RDMO ) have been tailored to specific disciplines (engineering, chemistry, etc.), institutions (UARuhr, HeFDI) or funding programmes (SNF, Volkswagen Foundation), taking care to reuse as many questions and attributes from the main catalog and domain as possible to ensure interoperability between existing projects, ensuring that the very same attributes are referred to the questions in different catalogs (thus allowing users to switch catalogs when necessary). For example, there were successfully accompanied attribute supplements for DFG questionnaires from FoDaKo, a cooperation of the Universities of Wuppertal, Düsseldorf, and Siegen concerning research data management ( https://fodako.nrw/datenmanagementplan , see Figure 2 ), and the questionnaire of the University of Erlangen-Nuremberg for the Volkswagen Foundation, Germany’s largest private research sponsor. An implementation of the Horizon Europe Data Management Plan Template (for the homonym European funding framework programme) has also been added recently, comprising a questionnaire, new attributes and options, and a view (see Figure 3 ). Soon, the sub-working group will deal with other funding programmes from Germany and abroad, such as the Austrian funding organisation, Fonds zur Förderung der wissenschaftlichen Forschung (FWF)’ ( https://www.fwf.ac.at/ ).

Overview of the FoDaKo questionnaires for projects funded by DFG. All questionnaires fulfil the DFG checklist and have different subject-specific coverage, from the ‘minimum’/‘intersection’ catalog with 85 questions to the ‘maximum’/‘union’ catalog (an extension of the core RDMO catalog) with 139 questions. The subject-specific questionnaires include further recommendations from the DFG Review Board on that subject. ‘All questions’ is an extension of the catalog RDMO. Below the title, the number of questions is given.

Preview of the ready Horizon Europe Data Management Plan in the RDMO interface. Compared to the funders’ DMP templates, the questions in the RDMO catalogs are more precise and ‘fine-grained’. Filling out a DMP is further eased with the provision of help texts and controlled answer choices (options). Finally, export templates, i.e., views, are available for converting the data management plan into a deliverable, which inserts references to thematically overlapping questions and converts the data management plan into the deliverable form for the funder.

The RDMO Consortium

The RDMO consortium was founded in 2020 by signing a Memorandum of Understanding (MoU) ( https://rdmorganiser.github.io/docs/Memorandum-of-Understanding-RDMO.pdf ) between several supporting German institutions and individuals. The organisational structure with various groups has been approved by an RDMO user meeting. This structure supports future development and is detailed in the MoU. There are three permanent groups besides the general meeting of all members of the consortium, i.e., the signatories of the MoU. Members and other interested parties can participate in the general meeting. The general meeting meets at least once a year, as required. All institutions that are interested in the preservation and further development of RDMO are invited to sign the MoU.

Some of the members are active in various RDM working groups, such as RDA and DINI/nestor ( DINI/nestor-AG Forschungsdaten 2022 ), and thus ensure a user-oriented focus on the RDMO content through their external cooperation.

The RDMO Steering Group (StG)

The RDMO consortium is led by a steering group (StG). The representatives of the StG are elected by the members at the general meeting every three years or as needed. The StG accompanies direction of the further development and coordinates the processes for the further development of the software and its content. It is composed of at least five persons.

The RDMO Development Group (DG)

The technical coordination and further development of RDMO are organised by a development group. In addition to a core of long-term committed developers who continuously drive the development forward, the low-threshold participation of a larger number of developers is required and already in place. These, for example, can contribute to development on a project-specific basis.

The RDMO Content Group (CG)

The work of the CG members focuses on maintaining existing and newly generated content, such as attributes or questions for catalog templates. They provide moderation and support for individual processes, as well as domain adjustments. The CG collects user feedback from RDM coordinators and researchers from research institutions in Germany and checks the general usability of RDMO against the background of user feedback.

The work of the CG is currently organised into four sub-working groups and can spawn ad-hoc sub-working groups for special purposes.

Sub-Working Group Guidance Concepts and Texts

The ‘Practical Guide to the International Alignment of Data Management’ published by Science Europe ( 2021 ) provides specific guidance for different stakeholders, such as researchers and reviewers of DMPs, on how to manage research data, describe data management and review a DMP. The guide therefore comprises an overview of core aspects that should be included in a DMP. However, in such guidance documents, discipline-specific recommendations are often lacking. The sub-working group first collected discipline-specific best practices in data management. Based on this collection and findings, the most relevant DMP sections requiring recommendations were identified. For the structuring of a corresponding DMP guidance, the software design pattern concept was used in software engineering for the systematic description of problem-solution pairs ( Gamma et al. 2014 ). The pattern concept provides a template to store information, e.g., problems, solutions, concrete examples and related patterns. A specific DMP guidance template was developed by extending the initial pattern template. The use of such a pattern structure for DMP guidance ensures that recommendations/guidance can be easily compared and linked. Moreover, the pattern structure can help raise awareness of the potential consequences of not implementing proper data management through concrete solutions. As a proof-of-concept and first collection of guidance patterns, examples were selected from the own RDM support experiences for research projects with different disciplinary foci and iteratively improved the template ( Henzen et al. 2022 ). The DMP guidance pattern structure can be applied to other DMP guidance texts and extended accordingly.

In the future, the working group will further elaborate on how to streamline our DMP pattern concept with RDM community activities, like the Stamp project (Standardised data management plan for education sciences; Netscher et al. 2022 ) or the activities of the RDA working group ‘Discipline-specific Guidance for Data Management Plans’ ( https://rd-alliance.org/groups/discipline-specific-guidance-data-management-plans-wg ). Moreover, they are going to implement the envisioned community-driven guidance pattern collection process, e.g., by guiding RDM support teams and researchers to collect further patterns and provide guidance on how to use the pattern collection. On a practical level, they aim to provide a basic set of patterns for the RDMO community to be used in upcoming and existing DMP templates. However, the group envisioned the applicability and usage of the patterns across disciplines and tools, not limited to their usage in RDMO.

Sub-Working Group Editorial Processes

The sub-working group called Editorial Processes is responsible for the development, curation and harmonisation of the content that is necessary for the local usage of an RDMO instance: attributes, catalogs, conditions, option sets and views.

External authors have the option to make their questionnaires available to the general public in the ‘shared’ area of the RDMO repository for content ( https://github.com/rdmorganiser/rdmo-catalog ). Editorial Processes also accompanies the content development by external authors, cares for its harmonisation and adds the newly created attributes and questions whenever they can be of general relevance. Besides that, this sub-working group has coordinated the localisation of the RDMO software and of the RDMO content into French, Spanish and Italian, yielding a total of five languages.

Sub-Working Group Website

The transition of RDMO towards a community-based project required the website ( https://rdmorganiser.github.io/ ) to reflect the change from a project to a community as well. This sub-working group is engaged in the improvement of the online representation of RDMO, tailoring the information for the different audiences, including end users (researchers), RDM managers/coordinators and system administrators presenting various aids. The focus is on providing informational material that is relevant, depending on the needs of the audience.

The website intends to be the first point of contact for RDMO users or interested parties and to bring together all the available information about RDMO.

Sub-Working Group DFG Checklists

This sub-working group is working on the implementation of the Deutsche Forschungsgemeinschaft (DFG) guidelines for research data management in RDMO. These guidelines must be considered during the redaction of project proposals and are available as a checklist ( http://www.dfg.de/research_data/checklist ). Since spring 2022, many German universities have developed guidelines, commentated versions of the DFG checklist or specific RDMO questionnaires to support their local researchers. The sub-working group was established in October 2022 to harmonise and map local solutions, creating one community questionnaire and export template.

Conclusion and Outlook

The overall goals of the work of the RDMO consortium are to simplify RDM and DMP planning further for users, improve their experience and build a sustainable open source community. With the user perspective in mind, the focus is, therefore, particularly on motivating researchers to use RDMO for their purposes. One of the ways by which the consortium intends to achieve this is by expanding different RDMO catalogs for various purposes (e.g., additional benefits such as project management functions and exchange between the different researchers in the project) by using DMPs. Researchers can be motivated in this respect, not only by familiarising them with RDMO but also by involving them in developing questionnaires that can be tailored to their discipline and/or to the needs of their community.

The development of several RDM initiatives, including the German National Research Data Infrastructure (NFDI, https://www.nfdi.de/consortia/ ), gives great momentum to the discussion around DMPs and facilitates the harmonisation and establishment of common infrastructures. In the coming years, it is expected that the importance of research data and corresponding data management will continue to increase enormously. This will also give rise to further environments and tools that facilitate RDM. Due to its strong community, RDMO has the possibility to offer a significant contribution to innovative and demand-oriented research data management.

Acknowledgements

The authors express their gratitude to the entire RDMO community for all the work, the discussions and the results reached.

Funding Information

The authors would like to thank the Federal Government of Germany and the Heads of Government of the Länder, as well as the Joint Science Conference (GWK) and the German Research Foundation (DFG) through the projects NFDI4Ing (project number 442146713) and NFDI4Earth (project no.460036893) for their funding.

Competing Interests

The authors have no competing interest to declare.

Fiedler, N, et al. 2013. Leitfaden zum Forschungsdaten-Management: Handreichungen aus dem WissGrid-Projekt . Verlag Werner Hülsbusch. https://publications.goettingen-research-online.de/handle/2/14366 .

Gamma, E, et al. 2014. Design patterns:elements of reusable object-oriented software . Boston, MA: Addison-Wesley. https://openlibrary.telkomuniversity.ac.id/pustaka/37782/design-patterns-elements-of-reusable-object-oriented-software.html .

Henzen, C, et al. 2022. A Community-driven collection and template for DMP guidance facilitating data management across disciplines and funding. Zenodo . DOI: https://doi.org/10.5281/zenodo.6966878

Miksa, T, Walk, P and Neish, P 2020. RDA DMP common standard for machine-actionable data management plans. Zenodo . DOI: https://doi.org/10.15497/rda00039

Netscher, S, et al. 2022. Stamp—Standardisierter Datenmanagementplan für die Bildungsforschung. Zenodo . DOI: https://doi.org/10.5281/zenodo.6782478

Science Europe 2021. Practical guide to the international alignment of research data management—extended edition. Zenodo . DOI: https://doi.org/10.5281/zenodo.4915862

https://dini.de/ag/dininestor-ag-forschungsdaten/ .

https://gepris.dfg.de/gepris/projekt/270561467 .

Call for Reviewers for 3rd IEEE CVMI 2024 Conference

Welcome to 3rd ieee cvmi 2024 , the cvmi 2022 proceedings published online here ., the cvmi 2022 program schedule is available here ., iapr best paper award (student): manali roy (indian institute of technology (ism), dhanbad, india) [paper id: 176], iapr best paper award (professional): akhilesh kumar (defence institute of psychological research (dipr), drdo, india) [paper id: 200], cvmi best paper award (computer vision): anmol gautam (national institute of technology, meghalaya, india) [paper id: 61], cvmi best paper award (machine intelligence): shajahan aboobacker (national institute of technology, karnataka, india) [paper id: 129], cvmi best phd thesis award: dr. koyel mandal (tezpur university, india).

Organizing Knowledge Partner Research Labs

The CVMI 2022 conference proceedings will be published by Springer.

The CVMI 2022 conference is endorsed by the International Association for Pattern Recognition "IAPR".

The CVMI 2022 conference is Technically Sponsored by IEEE Signal Processing Society UP Chapter.

About IEEE CVMI 2024

The IEEE CVMI 2024 conference is financially and technically sponsored by IEEE Uttar Pradesh Section. The CVMI 2024 conference is "Endorsed by International Association for Pattern Recognition (IAPR)" and "Technically Sponsored by IEEE SPS UP Chapter".

The conference programme will include regular paper presentations, along with keynote talks by prominent expert speakers in the field. All submitted papers will be double-blind peer-reviewed. Paper acceptance will be based on originality, significance, technical soundness, and clarity of presentation. The IAPR Best Paper Award and the CVMI-2024 Best Paper Awards will be given to the outstanding papers. The Best PhD Dissertation Awards will also be given in the PhD Symposium during IEEE CVMI 2024.

- Successfully presented papers will be submitted to IEEE Xplore for publication.

- Sponsored by IEEE Uttar Pradesh Section.

- Endorsed by IAPR.

- Technically Sponsored by IEEE Signal Processing Society Uttar Pradesh Chapter.

- Indexed by Scopus and DBLP.

- IAPR Best Paper Award and CVMI-2024 Best Paper Awards.

- Best PhD Dissertation Awards.

Prayagraj Attractions:

IIIT Allahabad, Jhalwa, Prayagraj, Uttar Pradesh, India

Cabs are available from both Railway Station and Airport on Availability.

Stay can be availed in the Visitors Hostels of the Institute on Availability.

Food will be available through out the event

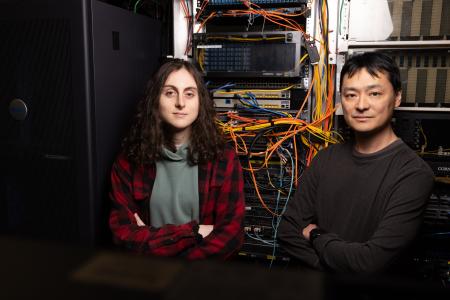

Computer Science Student and Professor at University of Puget Sound Win Best Paper at Big Data Conference

Julia Kaeppel ’24 and Prof. David Chiu published their research on database cache management.

University of Puget Sound student Julia Kaeppel ’24 has always been interested in computer programming. As a kid, she was a member of her elementary school robotics team and got hooked on programming in middle school as a pathway toward making video games. Kaeppel’s lifelong interest in operating systems and programming later led to an exciting research opportunity at Puget Sound. As a rising junior, Kaeppel approached Professor of Computer Science David Chiu about the possibility of working on a summer research project He immediately had an idea for an impactful project they could tackle.

“I’ve been working on this database project for over a decade and I had an idea of where I wanted to go next with the research. It was just a matter of finding the right student because it required a unique skill set,” says Chiu. “That Julia is such a strong C programmer with the right skill set and an interest in operating systems and performance was pretty fortuitous.”

Databases often contain immense amounts of raw data. It takes a long time to search through all that data to find a given piece of information, so computers use caches to store previous results for reuse. Caches serve as shortcuts to get at relevant information quickly, but they have limited space. Chiu wanted to find the optimal sequence in which to dispatch the queries as well as the order in which to evict older cached results in an effort to improve query performance. That’s where Kaeppel’s research came in. With funding from a McCormick Summer Research grant, she was able to dive into the problem and spend 10 weeks trying to find a solution.

“Over the summer, we developed a couple of algorithms for reordering bitmap queries and we found that a lot of them didn’t work,” Kaeppel says. “However, we found that ordering queries by size from shortest to longest provided the greatest optimization. There’s an elegant simplicity to it.”

The result is deceptively simple, but could be proven mathematically to maximize the number of times queries could be reused over time. Chiu and Kaeppel described their research in a paper that was accepted for publication at the 10th Association for Computing Machinery (ACM) and Institute of Electrical and Electronics Engineers (IEEE) International Conference on Big Data Computing, Applications, and Technologies, where it won the award for best paper.

“This conference only accepts 25 to 30% of all papers submitted. So, to be accepted and then to win a best paper award is a major accomplishment,” Chiu says. “Julia isn’t a Ph.D. student. She’s an undergrad—and yet her work beat out every other paper at the conference. It’s unprecedented in my research group.”

Kaeppel credits Professor Chiu’s mentorship for helping her develop the tools she needed to tackle the research project—and for opening her eyes to the possibility of doing more research after graduating from Puget Sound.

“It was a great experience and definitely broadened my horizons. Even if I don’t go into academic research, I could see myself pursuing a career working with algorithms and optimizations,” Kaeppel says.

“Julia was a joy to work with. She is dedicated and has an intuition on problem solving that makes her a very natural researcher,” Chiu adds. “When we got a result that didn’t look right, she knew where to dig for answers. That’s not something I would typically expect from an undergrad. Julia was already at that level—and that made all the difference in making this publication possible.”

Making Space

Breaking Down the Walls

McCarver Day

Team Beaver

Spreading the Aloha Spirit

Connections

University of Puget Sound Launches New Crime, Law, and Justice Studies Minor

University of Puget Sound Alumnus Offers Students Valuable Internship Experience

University of Puget Sound Student Studies the Social Impact of Community Gardens

Learning From Destruction

© 2024 University of Puget Sound

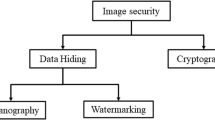

Evaluating the merits and constraints of cryptography-steganography fusion: a systematic analysis

- Regular Contribution

- Open access

- Published: 05 May 2024

Cite this article

You have full access to this open access article

- Indy Haverkamp 1 &

- Dipti K. Sarmah 1

135 Accesses

Explore all metrics