- Media Center

- E-Books & White Papers

- Knowledge Center

The Strategic Value of Regression Analysis in Marketing Research

by Michael Lieberman , on December 14, 2023

Regression analysis offers significant value in modern business and research contexts. This article explores the strategic importance of regression analysis to shed light on its diverse applications and benefits. Included are several different case studies to help bring the concept to life.

Understanding Regression Analysis in Marketing

Regression analysis in marketing is used to examine how independent variables—such as advertising spend, demographics, pricing, and product features—influence a dependent variable, typically a measure of consumer behavior or business performance. The goal is to create models that capture these relationships accurately, allowing marketers to make informed decisions.

Benefits of Regression Analysis in Marketing

- Data-driven decisions : Regression analysis empowers marketers to make data-driven decisions, reducing reliance on intuition and guesswork. This approach leads to more accurate and strategic marketing efforts.

- Efficiency and cost savings : By optimizing marketing campaigns and resource allocation, regression analysis can significantly improve efficiency and cost-effectiveness. Companies can achieve better results with the same or fewer resources.

- Personalization : Understanding consumer behavior through regression analysis allows for personalized marketing efforts. Tailored messages and offers can lead to higher engagement and conversion rates.

- Competitive advantage : Marketers who employ regression analysis are better equipped to adapt to changing market conditions, outperform competitors, and stay ahead of industry trends.

- Continuous improvement : Regression analysis is an iterative process. As new data becomes available, models can be updated and refined, ensuring that marketing strategies remain effective over time.

Strategic Applications

- Consumer behavior prediction : Regression analysis helps marketers predict consumer behavior. By analyzing historical data and considering various factors, such as past purchases, online behavior, and demographic information, companies can build models to anticipate customer preferences, buying patterns, and churn rates.

- Marketing campaign optimization : Businesses invest heavily in marketing campaigns. Regression analysis aids in optimizing these efforts by identifying which marketing channels, messages, or strategies have the greatest impact on key performance indicators (KPIs) like sales, click-through rates, or conversion rates.

- Pricing strategy : Pricing is a critical aspect of marketing. Regression analysis can reveal the relationship between pricing strategies and sales volume, helping companies determine the optimal price points for their products or services.

- Product Development : In product development, regression analysis can be used to understand the relationship between product features and consumer satisfaction. Companies can then prioritize product enhancements based on customer preferences.

Case Study – Regression Analysis for Ranking Key Attributes

Let’s explore a specific example in a category known as Casual Dining Restaurants (CDR). In a survey, respondents are asked to rate several casual dining restaurants on a variety of attributes. For the purposes of this article, we will keep the number of demonstrated attributes to the top eight. The data for each restaurant is stacked into one regression. We are seeking to rank the attributes based on a regression analysis against an industry standard overall measurement: Net Promoter Score.

Table 1 shows the leading Casual Dining Restaurant chains in the United States to be used to ‘rank’ the key reasons that patrons visit this restaurant category, not specific to one restaurant band.

Table 1 - List of Leading Casual Dining Restaurant Chains in the United States

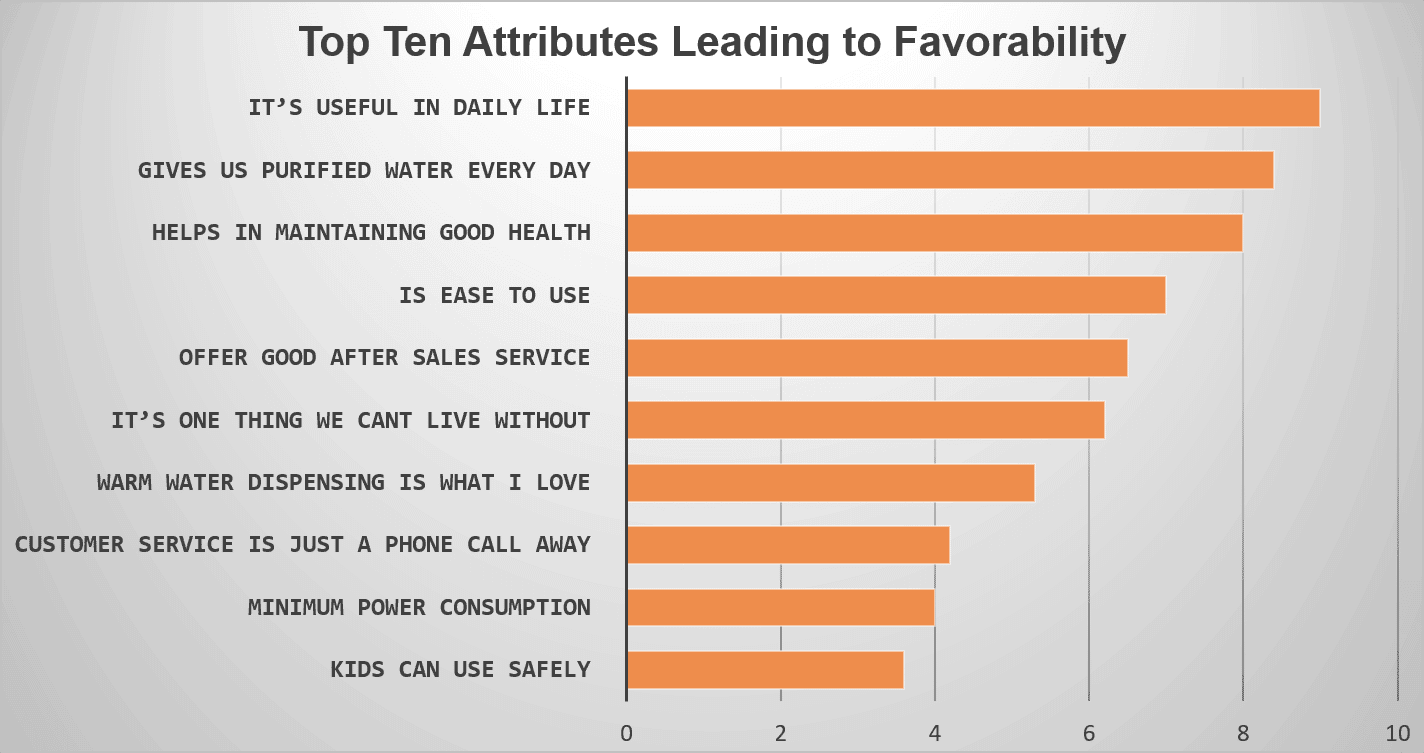

In Figure 1 we see a graphic example of key drivers across the CDR category.

The category-wide drivers of CDR visits are not particularly surprising. Good food. Good value. Cleanliness. Staff energy. There is one attribute, however, that may not seem intuitively as important as restaurant executives might think. Make sure your servers thank departing customers . Diners seek not just delicious cuisine at a reasonable price, but they also desire a sense of appreciation.

Case Study – Regression and Brand Response to Crisis

A major automobile company has a public relations disaster. In order to regain trust in their brand equity, the company commissions a series of regression analyses to gauge how buyers are viewing their brand image. However, what they really want to know is how American auto buyers view trust—the most valuable brand perception of this company’s automotive product.

The disaster is fresh—a nation-wide recall of thousands of cars over safety issues regarding airbags—so our company would like a composite of which values go into “Is this a Company I Trust.” Thus, it surveyed decision makers, stake holders, owners, and prospects. We then stack the data into one dataset and run a strategic regression. Once performed, the regression beta values are summed and then reported as percentages of influence on the dependent variable. What we see are the major components of “Trust.”

Figure 2 - Percentage Influence of "A Company I Trust"

Not surprisingly, family safety is the leading driver of Trust. However, we now have Shapley Values of the major components. These findings would normally be handed over to the public relations team to begin damage control. Within days the company began to run advertisements in major markets to reverse the negative narrative of the recall.

Case Study - Regression Analysis/Maximizing Product Lines

SparkleSquad Studios is a fictional startup hoping to find a niche among tween and teen girls to help reverse the tide of social media addiction. Though funded through venture capital investment, they found that all their 40 potential product areas, they only have capacity to produce eight. In order to determine the top 8 hobby products in demand, they fielded a study.

Table 2 - List of Potential Product Area Development

SparkleSquad Studios then conducted a large study gathering data from thousands of web-based surveys conducted among girls aged 10 to 16 across the United States. The key construct of the study is simple—not more than 5 minutes—and concise to cater to respondents' shorter attention spans. Below are the key questions.

- How much money do you typically allocate to hobbies unrelated to social media in a given month?

- Please check-off the hobbies that interest you from the list of 40 potential options below.

Question 1 serves as the dependent variable in the regression. Question 2 responses are coded into categorical variables, 1=Checked, 0=Not Checked . These are the independent variables.

Results are shown below in Table 3.

Table 3 - Top 10 Hobby Products for Production Determined Through Regression Analysis

Based on the resulting regression analysis, SparkleSquad will commence production of ten statistically significant products. The data-driven approach ensures these offerings meet the maximized determined market demand.

Regression analysis gives businesses the ability to predict consumer behavior, optimize marketing efforts, and drive results through data-driven decision-making. By leveraging regression analysis, businesses can gain a competitive advantage and increase their efficiency, and effectiveness. In an era where consumer preferences and market conditions are in constant flux, regression analysis remains an essential tool for marketers looking to stay ahead of the curve.

Michael Lieberman is the Founder and President of Multivariate Solutions , a statistical and market research consulting firm that works with major advertising, public relations, and political strategy firms. He can be reached at +1 646 257 3794, or [email protected] .

About This Blog

Our goal is to help you better understand your customer, market, and competition in order to help drive your business growth.

Popular Posts

- A CEO’s Perspective on Harnessing AI for Market Research Excellence

- 7 Key Advantages of Outsourcing Market Research Services

- How to Use Market Research for Onboarding and Training Employees

- 10 Global Industries That Will Boom in the Next 5 Years

- Primary Data vs. Secondary Data: Market Research Methods

Recent Posts

Posts by topic.

- Industry Insights (828)

- Market Research Strategy (273)

- Food & Beverage (134)

- Healthcare (125)

- The Freedonia Group (121)

- How To's (109)

- Market Research Provider (93)

- Manufacturing & Construction (81)

- Pharmaceuticals (80)

- Packaged Facts (78)

- Telecommunications & Wireless (70)

- Heavy Industry (69)

- Marketing (58)

- Profound (57)

- Retail (56)

- Software & Enterprise Computing (55)

- Transportation & Shipping (54)

- House & Home (50)

- Materials & Chemicals (47)

- Medical Devices (46)

- Consumer Electronics (45)

- Energy & Resources (42)

- Public Sector (40)

- Biotechnology (37)

- Demographics (37)

- Business Services & Administration (36)

- Education (36)

- Custom Market Research (35)

- Diagnostics (34)

- Academic (33)

- Travel & Leisure (33)

- E-commerce & IT Outsourcing (32)

- Financial Services (29)

- Computer Hardware & Networking (26)

- Simba Information (24)

- Kalorama Information (21)

- Knowledge Centers (19)

- Apparel (18)

- Cosmetics & Personal Care (17)

- Social Media (16)

- Market Research Subscription (15)

- Advertising (14)

- Big Data (14)

- Holiday (11)

- Emerging Markets (8)

- Associations (1)

- Religion (1)

MarketResearch.com 6116 Executive Blvd Suite 550 Rockville, MD 20852 800.298.5699 (U.S.) +1.240.747.3093 (International) [email protected]

From Our Blog

Subscribe to blog, connect with us.

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading Change and Organizational Renewal

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

What Is Regression Analysis in Business Analytics?

- 14 Dec 2021

Countless factors impact every facet of business. How can you consider those factors and know their true impact?

Imagine you seek to understand the factors that influence people’s decision to buy your company’s product. They range from customers’ physical locations to satisfaction levels among sales representatives to your competitors' Black Friday sales.

Understanding the relationships between each factor and product sales can enable you to pinpoint areas for improvement, helping you drive more sales.

To learn how each factor influences sales, you need to use a statistical analysis method called regression analysis .

If you aren’t a business or data analyst, you may not run regressions yourself, but knowing how analysis works can provide important insight into which factors impact product sales and, thus, which are worth improving.

Access your free e-book today.

Foundational Concepts for Regression Analysis

Before diving into regression analysis, you need to build foundational knowledge of statistical concepts and relationships.

Independent and Dependent Variables

Start with the basics. What relationship are you aiming to explore? Try formatting your answer like this: “I want to understand the impact of [the independent variable] on [the dependent variable].”

The independent variable is the factor that could impact the dependent variable . For example, “I want to understand the impact of employee satisfaction on product sales.”

In this case, employee satisfaction is the independent variable, and product sales is the dependent variable. Identifying the dependent and independent variables is the first step toward regression analysis.

Correlation vs. Causation

One of the cardinal rules of statistically exploring relationships is to never assume correlation implies causation. In other words, just because two variables move in the same direction doesn’t mean one caused the other to occur.

If two or more variables are correlated , their directional movements are related. If two variables are positively correlated , it means that as one goes up or down, so does the other. Alternatively, if two variables are negatively correlated , one goes up while the other goes down.

A correlation’s strength can be quantified by calculating the correlation coefficient , sometimes represented by r . The correlation coefficient falls between negative one and positive one.

r = -1 indicates a perfect negative correlation.

r = 1 indicates a perfect positive correlation.

r = 0 indicates no correlation.

Causation means that one variable caused the other to occur. Proving a causal relationship between variables requires a true experiment with a control group (which doesn’t receive the independent variable) and an experimental group (which receives the independent variable).

While regression analysis provides insights into relationships between variables, it doesn’t prove causation. It can be tempting to assume that one variable caused the other—especially if you want it to be true—which is why you need to keep this in mind any time you run regressions or analyze relationships between variables.

With the basics under your belt, here’s a deeper explanation of regression analysis so you can leverage it to drive strategic planning and decision-making.

Related: How to Learn Business Analytics without a Business Background

What Is Regression Analysis?

Regression analysis is the statistical method used to determine the structure of a relationship between two variables (single linear regression) or three or more variables (multiple regression).

According to the Harvard Business School Online course Business Analytics , regression is used for two primary purposes:

- To study the magnitude and structure of the relationship between variables

- To forecast a variable based on its relationship with another variable

Both of these insights can inform strategic business decisions.

“Regression allows us to gain insights into the structure of that relationship and provides measures of how well the data fit that relationship,” says HBS Professor Jan Hammond, who teaches Business Analytics, one of three courses that comprise the Credential of Readiness (CORe) program . “Such insights can prove extremely valuable for analyzing historical trends and developing forecasts.”

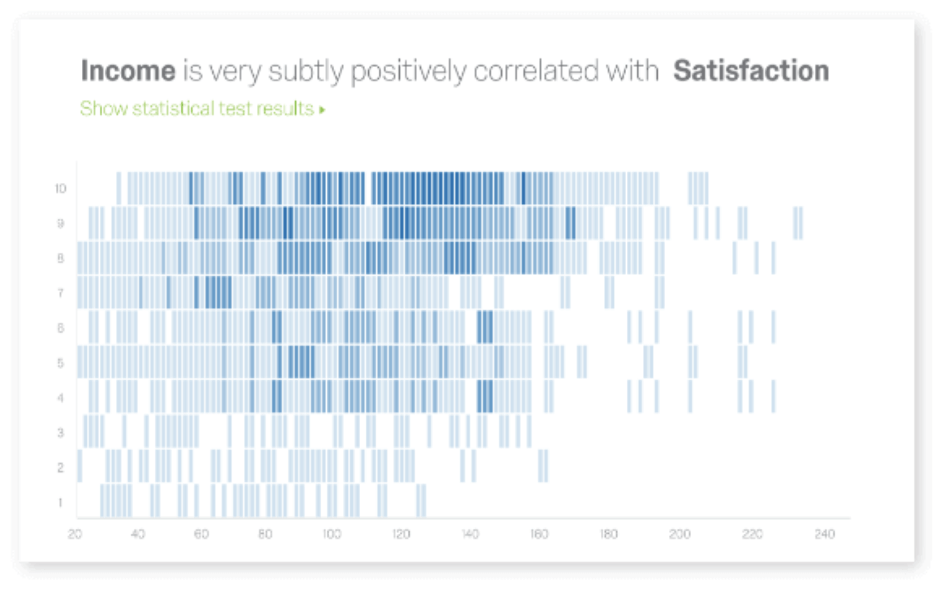

One way to think of regression is by visualizing a scatter plot of your data with the independent variable on the X-axis and the dependent variable on the Y-axis. The regression line is the line that best fits the scatter plot data. The regression equation represents the line’s slope and the relationship between the two variables, along with an estimation of error.

Physically creating this scatter plot can be a natural starting point for parsing out the relationships between variables.

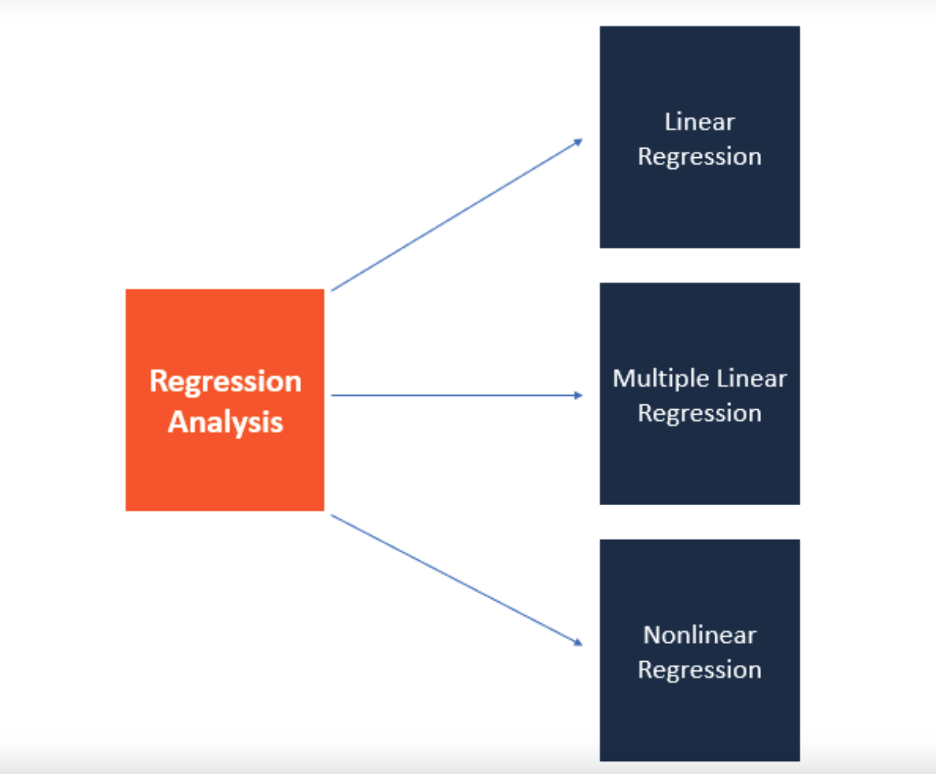

Types of Regression Analysis

There are two types of regression analysis: single variable linear regression and multiple regression.

Single variable linear regression is used to determine the relationship between two variables: the independent and dependent. The equation for a single variable linear regression looks like this:

In the equation:

- ŷ is the expected value of Y (the dependent variable) for a given value of X (the independent variable).

- x is the independent variable.

- α is the Y-intercept, the point at which the regression line intersects with the vertical axis.

- β is the slope of the regression line, or the average change in the dependent variable as the independent variable increases by one.

- ε is the error term, equal to Y – ŷ, or the difference between the actual value of the dependent variable and its expected value.

Multiple regression , on the other hand, is used to determine the relationship between three or more variables: the dependent variable and at least two independent variables. The multiple regression equation looks complex but is similar to the single variable linear regression equation:

Each component of this equation represents the same thing as in the previous equation, with the addition of the subscript k, which is the total number of independent variables being examined. For each independent variable you include in the regression, multiply the slope of the regression line by the value of the independent variable, and add it to the rest of the equation.

How to Run Regressions

You can use a host of statistical programs—such as Microsoft Excel, SPSS, and STATA—to run both single variable linear and multiple regressions. If you’re interested in hands-on practice with this skill, Business Analytics teaches learners how to create scatter plots and run regressions in Microsoft Excel, as well as make sense of the output and use it to drive business decisions.

Calculating Confidence and Accounting for Error

It’s important to note: This overview of regression analysis is introductory and doesn’t delve into calculations of confidence level, significance, variance, and error. When working in a statistical program, these calculations may be provided or require that you implement a function. When conducting regression analysis, these metrics are important for gauging how significant your results are and how much importance to place on them.

Why Use Regression Analysis?

Once you’ve generated a regression equation for a set of variables, you effectively have a roadmap for the relationship between your independent and dependent variables. If you input a specific X value into the equation, you can see the expected Y value.

This can be critical for predicting the outcome of potential changes, allowing you to ask, “What would happen if this factor changed by a specific amount?”

Returning to the earlier example, running a regression analysis could allow you to find the equation representing the relationship between employee satisfaction and product sales. You could input a higher level of employee satisfaction and see how sales might change accordingly. This information could lead to improved working conditions for employees, backed by data that shows the tie between high employee satisfaction and sales.

Whether predicting future outcomes, determining areas for improvement, or identifying relationships between seemingly unconnected variables, understanding regression analysis can enable you to craft data-driven strategies and determine the best course of action with all factors in mind.

Do you want to become a data-driven professional? Explore our eight-week Business Analytics course and our three-course Credential of Readiness (CORe) program to deepen your analytical skills and apply them to real-world business problems.

About the Author

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

- Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Survey Data Analysis & Reporting

- Regression Analysis

Try Qualtrics for free

The complete guide to regression analysis.

19 min read What is regression analysis and why is it useful? While most of us have heard the term, understanding regression analysis in detail may be something you need to brush up on. Here’s what you need to know about this popular method of analysis.

When you rely on data to drive and guide business decisions, as well as predict market trends, just gathering and analyzing what you find isn’t enough — you need to ensure it’s relevant and valuable.

The challenge, however, is that so many variables can influence business data: market conditions, economic disruption, even the weather! As such, it’s essential you know which variables are affecting your data and forecasts, and what data you can discard.

And one of the most effective ways to determine data value and monitor trends (and the relationships between them) is to use regression analysis, a set of statistical methods used for the estimation of relationships between independent and dependent variables.

In this guide, we’ll cover the fundamentals of regression analysis, from what it is and how it works to its benefits and practical applications.

Free eBook: 2024 global market research trends report

What is regression analysis?

Regression analysis is a statistical method. It’s used for analyzing different factors that might influence an objective – such as the success of a product launch, business growth, a new marketing campaign – and determining which factors are important and which ones can be ignored.

Regression analysis can also help leaders understand how different variables impact each other and what the outcomes are. For example, when forecasting financial performance, regression analysis can help leaders determine how changes in the business can influence revenue or expenses in the future.

Running an analysis of this kind, you might find that there’s a high correlation between the number of marketers employed by the company, the leads generated, and the opportunities closed.

This seems to suggest that a high number of marketers and a high number of leads generated influences sales success. But do you need both factors to close those sales? By analyzing the effects of these variables on your outcome, you might learn that when leads increase but the number of marketers employed stays constant, there is no impact on the number of opportunities closed, but if the number of marketers increases, leads and closed opportunities both rise.

Regression analysis can help you tease out these complex relationships so you can determine which areas you need to focus on in order to get your desired results, and avoid wasting time with those that have little or no impact. In this example, that might mean hiring more marketers rather than trying to increase leads generated.

How does regression analysis work?

Regression analysis starts with variables that are categorized into two types: dependent and independent variables. The variables you select depend on the outcomes you’re analyzing.

Understanding variables:

1. dependent variable.

This is the main variable that you want to analyze and predict. For example, operational (O) data such as your quarterly or annual sales, or experience (X) data such as your net promoter score (NPS) or customer satisfaction score (CSAT) .

These variables are also called response variables, outcome variables, or left-hand-side variables (because they appear on the left-hand side of a regression equation).

There are three easy ways to identify them:

- Is the variable measured as an outcome of the study?

- Does the variable depend on another in the study?

- Do you measure the variable only after other variables are altered?

2. Independent variable

Independent variables are the factors that could affect your dependent variables. For example, a price rise in the second quarter could make an impact on your sales figures.

You can identify independent variables with the following list of questions:

- Is the variable manipulated, controlled, or used as a subject grouping method by the researcher?

- Does this variable come before the other variable in time?

- Are you trying to understand whether or how this variable affects another?

Independent variables are often referred to differently in regression depending on the purpose of the analysis. You might hear them called:

Explanatory variables

Explanatory variables are those which explain an event or an outcome in your study. For example, explaining why your sales dropped or increased.

Predictor variables

Predictor variables are used to predict the value of the dependent variable. For example, predicting how much sales will increase when new product features are rolled out .

Experimental variables

These are variables that can be manipulated or changed directly by researchers to assess the impact. For example, assessing how different product pricing ($10 vs $15 vs $20) will impact the likelihood to purchase.

Subject variables (also called fixed effects)

Subject variables can’t be changed directly, but vary across the sample. For example, age, gender, or income of consumers.

Unlike experimental variables, you can’t randomly assign or change subject variables, but you can design your regression analysis to determine the different outcomes of groups of participants with the same characteristics. For example, ‘how do price rises impact sales based on income?’

Carrying out regression analysis

So regression is about the relationships between dependent and independent variables. But how exactly do you do it?

Assuming you have your data collection done already, the first and foremost thing you need to do is plot your results on a graph. Doing this makes interpreting regression analysis results much easier as you can clearly see the correlations between dependent and independent variables.

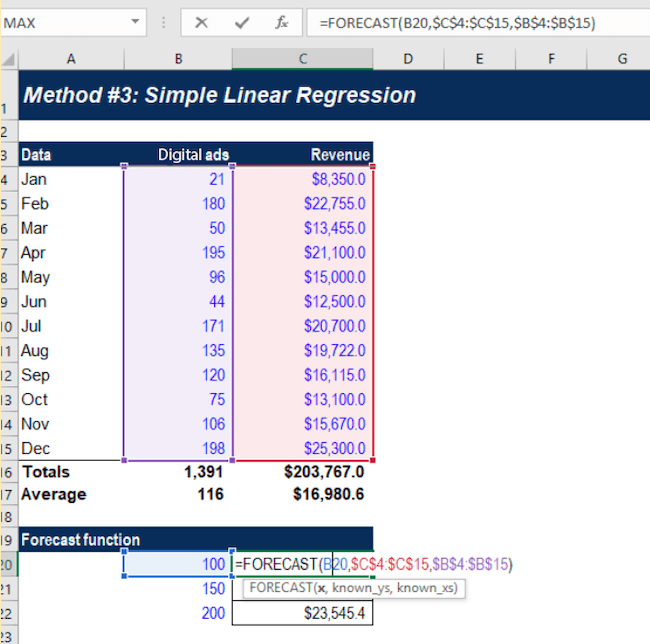

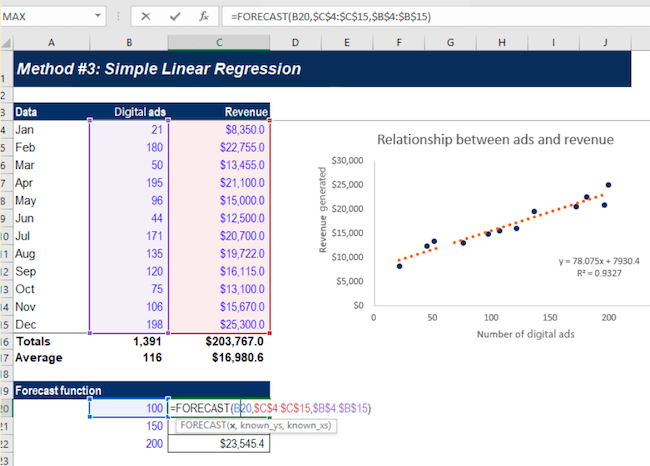

Let’s say you want to carry out a regression analysis to understand the relationship between the number of ads placed and revenue generated.

On the Y-axis, you place the revenue generated. On the X-axis, the number of digital ads. By plotting the information on the graph, and drawing a line (called the regression line) through the middle of the data, you can see the relationship between the number of digital ads placed and revenue generated.

This regression line is the line that provides the best description of the relationship between your independent variables and your dependent variable. In this example, we’ve used a simple linear regression model.

Statistical analysis software can draw this line for you and precisely calculate the regression line. The software then provides a formula for the slope of the line, adding further context to the relationship between your dependent and independent variables.

Simple linear regression analysis

A simple linear model uses a single straight line to determine the relationship between a single independent variable and a dependent variable.

This regression model is mostly used when you want to determine the relationship between two variables (like price increases and sales) or the value of the dependent variable at certain points of the independent variable (for example the sales levels at a certain price rise).

While linear regression is useful, it does require you to make some assumptions.

For example, it requires you to assume that:

- the data was collected using a statistically valid sample collection method that is representative of the target population

- The observed relationship between the variables can’t be explained by a ‘hidden’ third variable – in other words, there are no spurious correlations.

- the relationship between the independent variable and dependent variable is linear – meaning that the best fit along the data points is a straight line and not a curved one

Multiple regression analysis

As the name suggests, multiple regression analysis is a type of regression that uses multiple variables. It uses multiple independent variables to predict the outcome of a single dependent variable. Of the various kinds of multiple regression, multiple linear regression is one of the best-known.

Multiple linear regression is a close relative of the simple linear regression model in that it looks at the impact of several independent variables on one dependent variable. However, like simple linear regression, multiple regression analysis also requires you to make some basic assumptions.

For example, you will be assuming that:

- there is a linear relationship between the dependent and independent variables (it creates a straight line and not a curve through the data points)

- the independent variables aren’t highly correlated in their own right

An example of multiple linear regression would be an analysis of how marketing spend, revenue growth, and general market sentiment affect the share price of a company.

With multiple linear regression models you can estimate how these variables will influence the share price, and to what extent.

Multivariate linear regression

Multivariate linear regression involves more than one dependent variable as well as multiple independent variables, making it more complicated than linear or multiple linear regressions. However, this also makes it much more powerful and capable of making predictions about complex real-world situations.

For example, if an organization wants to establish or estimate how the COVID-19 pandemic has affected employees in its different markets, it can use multivariate linear regression, with the different geographical regions as dependent variables and the different facets of the pandemic as independent variables (such as mental health self-rating scores, proportion of employees working at home, lockdown durations and employee sick days).

Through multivariate linear regression, you can look at relationships between variables in a holistic way and quantify the relationships between them. As you can clearly visualize those relationships, you can make adjustments to dependent and independent variables to see which conditions influence them. Overall, multivariate linear regression provides a more realistic picture than looking at a single variable.

However, because multivariate techniques are complex, they involve high-level mathematics that require a statistical program to analyze the data.

Logistic regression

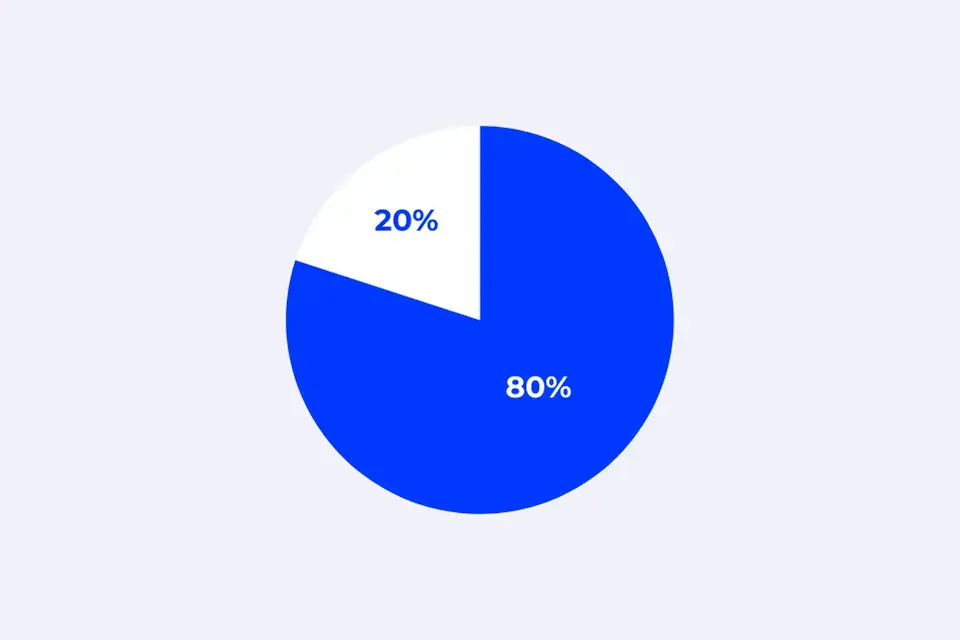

Logistic regression models the probability of a binary outcome based on independent variables.

So, what is a binary outcome? It’s when there are only two possible scenarios, either the event happens (1) or it doesn’t (0). e.g. yes/no outcomes, pass/fail outcomes, and so on. In other words, if the outcome can be described as being in either one of two categories.

Logistic regression makes predictions based on independent variables that are assumed or known to have an influence on the outcome. For example, the probability of a sports team winning their game might be affected by independent variables like weather, day of the week, whether they are playing at home or away and how they fared in previous matches.

What are some common mistakes with regression analysis?

Across the globe, businesses are increasingly relying on quality data and insights to drive decision-making — but to make accurate decisions, it’s important that the data collected and statistical methods used to analyze it are reliable and accurate.

Using the wrong data or the wrong assumptions can result in poor decision-making, lead to missed opportunities to improve efficiency and savings, and — ultimately — damage your business long term.

- Assumptions

When running regression analysis, be it a simple linear or multiple regression, it’s really important to check that the assumptions your chosen method requires have been met. If your data points don’t conform to a straight line of best fit, for example, you need to apply additional statistical modifications to accommodate the non-linear data. For example, if you are looking at income data, which scales on a logarithmic distribution, you should take the Natural Log of Income as your variable then adjust the outcome after the model is created.

- Correlation vs. causation

It’s a well-worn phrase that bears repeating – correlation does not equal causation. While variables that are linked by causality will always show correlation, the reverse is not always true. Moreover, there is no statistic that can determine causality (although the design of your study overall can).

If you observe a correlation in your results, such as in the first example we gave in this article where there was a correlation between leads and sales, you can’t assume that one thing has influenced the other. Instead, you should use it as a starting point for investigating the relationship between the variables in more depth.

- Choosing the wrong variables to analyze

Before you use any kind of statistical method, it’s important to understand the subject you’re researching in detail. Doing so means you’re making informed choices of variables and you’re not overlooking something important that might have a significant bearing on your dependent variable.

- Model building The variables you include in your analysis are just as important as the variables you choose to exclude. That’s because the strength of each independent variable is influenced by the other variables in the model. Other techniques, such as Key Drivers Analysis, are able to account for these variable interdependencies.

Benefits of using regression analysis

There are several benefits to using regression analysis to judge how changing variables will affect your business and to ensure you focus on the right things when forecasting.

Here are just a few of those benefits:

Make accurate predictions

Regression analysis is commonly used when forecasting and forward planning for a business. For example, when predicting sales for the year ahead, a number of different variables will come into play to determine the eventual result.

Regression analysis can help you determine which of these variables are likely to have the biggest impact based on previous events and help you make more accurate forecasts and predictions.

Identify inefficiencies

Using a regression equation a business can identify areas for improvement when it comes to efficiency, either in terms of people, processes, or equipment.

For example, regression analysis can help a car manufacturer determine order numbers based on external factors like the economy or environment.

Using the initial regression equation, they can use it to determine how many members of staff and how much equipment they need to meet orders.

Drive better decisions

Improving processes or business outcomes is always on the minds of owners and business leaders, but without actionable data, they’re simply relying on instinct, and this doesn’t always work out.

This is particularly true when it comes to issues of price. For example, to what extent will raising the price (and to what level) affect next quarter’s sales?

There’s no way to know this without data analysis. Regression analysis can help provide insights into the correlation between price rises and sales based on historical data.

How do businesses use regression? A real-life example

Marketing and advertising spending are common topics for regression analysis. Companies use regression when trying to assess the value of ad spend and marketing spend on revenue.

A typical example is using a regression equation to assess the correlation between ad costs and conversions of new customers. In this instance,

- our dependent variable (the factor we’re trying to assess the outcomes of) will be our conversions

- the independent variable (the factor we’ll change to assess how it changes the outcome) will be the daily ad spend

- the regression equation will try to determine whether an increase in ad spend has a direct correlation with the number of conversions we have

The analysis is relatively straightforward — using historical data from an ad account, we can use daily data to judge ad spend vs conversions and how changes to the spend alter the conversions.

By assessing this data over time, we can make predictions not only on whether increasing ad spend will lead to increased conversions but also what level of spending will lead to what increase in conversions. This can help to optimize campaign spend and ensure marketing delivers good ROI.

This is an example of a simple linear model. If you wanted to carry out a more complex regression equation, we could also factor in other independent variables such as seasonality, GDP, and the current reach of our chosen advertising networks.

By increasing the number of independent variables, we can get a better understanding of whether ad spend is resulting in an increase in conversions, whether it’s exerting an influence in combination with another set of variables, or if we’re dealing with a correlation with no causal impact – which might be useful for predictions anyway, but isn’t a lever we can use to increase sales.

Using this predicted value of each independent variable, we can more accurately predict how spend will change the conversion rate of advertising.

Regression analysis tools

Regression analysis is an important tool when it comes to better decision-making and improved business outcomes. To get the best out of it, you need to invest in the right kind of statistical analysis software.

The best option is likely to be one that sits at the intersection of powerful statistical analysis and intuitive ease of use, as this will empower everyone from beginners to expert analysts to uncover meaning from data, identify hidden trends and produce predictive models without statistical training being required.

To help prevent costly errors, choose a tool that automatically runs the right statistical tests and visualizations and then translates the results into simple language that anyone can put into action.

With software that’s both powerful and user-friendly, you can isolate key experience drivers, understand what influences the business, apply the most appropriate regression methods, identify data issues, and much more.

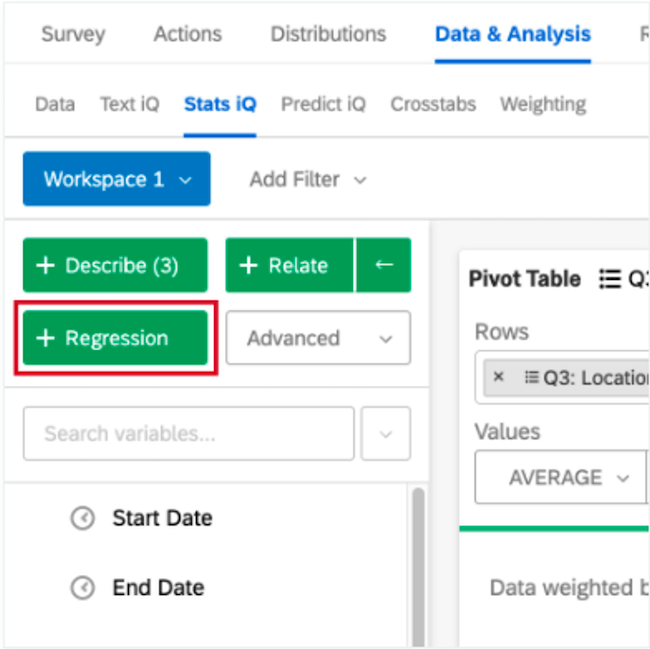

With Qualtrics’ Stats iQ™, you don’t have to worry about the regression equation because our statistical software will run the appropriate equation for you automatically based on the variable type you want to monitor. You can also use several equations, including linear regression and logistic regression, to gain deeper insights into business outcomes and make more accurate, data-driven decisions.

Related resources

Analysis & Reporting

Data Analysis 31 min read

Social media analytics 13 min read, kano analysis 21 min read, margin of error 11 min read, data saturation in qualitative research 8 min read, thematic analysis 11 min read, behavioral analytics 12 min read, request demo.

Ready to learn more about Qualtrics?

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

A Refresher on Regression Analysis

Understanding one of the most important types of data analysis.

You probably know by now that whenever possible you should be making data-driven decisions at work . But do you know how to parse through all the data available to you? The good news is that you probably don’t need to do the number crunching yourself (hallelujah!) but you do need to correctly understand and interpret the analysis created by your colleagues. One of the most important types of data analysis is called regression analysis.

- Amy Gallo is a contributing editor at Harvard Business Review, cohost of the Women at Work podcast , and the author of two books: Getting Along: How to Work with Anyone (Even Difficult People) and the HBR Guide to Dealing with Conflict . She writes and speaks about workplace dynamics. Watch her TEDx talk on conflict and follow her on LinkedIn . amyegallo

Partner Center

Regression Analysis in Market Research

by Richard Nehrboss SR | Mar 14, 2023 | Customer Experience Management , Financial Services , Research Methodology

What is Regression Analysis & How Is It Used?

Regression analysis helps organizations make sense of priority areas and what factors have the most impact and influence on their customer relationships. It allows researchers and brands to read between the lines of the survey data. This article will help you understand the definition of regression analysis, how it is commonly used, and the benefits of using regression research.

Regression Analysis: Definition

Regression analysis is a common statistical method that helps organizations understand the relationship between independent variables and dependent variables.

- Dependent variable: The main factor you want to measure or understand.

- Independent variables: The secondary factors you believe to have an influence on your dependent variable.

More specifically regression analysis tells you what factors are most important, which to disregard, and how each factor affects one another.

Importance of Regression Analysis

There are several benefits of regression analysis, most of which center around using it to achieve data-driven decision-making.

The advantages of using regression analysis in research include:

- Great tool for forecasting: While there is no such thing as a magic crystal ball, regression research is a great approach to measuring predictive analytics and forecasting.

- Focus attention on priority areas of improvement: Regression statistical analysis helps businesses and organizations prioritize efforts to improve customer satisfaction metrics such as net promoter score, customer effort score, and customer loyalty. Using regression analysis in quantitative research provides the opportunity to take corrective actions on the items that will most positively improve overall satisfaction.

When to Use Regression Analysis

A common use of regression analysis is understanding how the likelihood to recommend a product or service (dependent variable) is impacted by changes in wait time, price, and quantity purchased (presumably independent variables). A popular way to measure this is with net promoter score (NPS) as it is one of the most commonly used metrics in market research.

Net promoter score formula

The score is very telling to help your business understand how many raving fans your brand has in comparison to your key competitors and industry benchmarks. While our online survey company always recommends using an open-ended question after NPS to gather context to help understand the driving forces behind the score, sometimes it does not tell the whole story.

Regression Analysis Example in Business

Keeping with the bank survey from above, let’s say in the same survey you ask a series of customer satisfaction questions related to respondents’ experience with the bank. You believe the interest rates and customer service are good at your bank but you think there might be some underlying drivers really pushing your high NPS. In this example, likelihood to recommend, or NPS is your dependent variable A. Your more specific follow-up satisfaction questions are dependent variables B, C, D, E, F, G.

Through your regression analysis, you find out that INDEPENDENT VARIABLE C (friendliness of the staff) has the most significant effect on NPS. This means how the customer rates the friendliness of the staff members will have the largest overall impact on how likely they would be to recommend your bank. This is much different than what customers said in the open-ended comment about interest rates and customer service. However, as regression analysis proves, staff friendliness is essential.

Regression analysis is another tool market research firms used on a daily basis with their clients to help brands understand survey data from customers. The benefit of using a third-party market research firm is that you can leverage their expertise to tell you the “so what” of your customer survey data.

At The MSR Group, we use regression analysis to help our clients understand the relationship between independent variables and dependent variables. We have worked with banks to understand the impact that key index scores from the markets had on sales projections. We also help our clients prioritize efforts to improve customer satisfaction metrics such as net promoter score, customer effort score, and customer loyalty.

If you are interested in using regression analysis to help your business make data-driven decisions, contact The MSR Group by filling out an online contact form or emailing [email protected]. Regression analysis is a powerful tool that can help executives and management make data-driven decisions. It can help them understand the relationship between independent variables and dependent variables, and how each factor affects one another. It can also help them focus their attention on priority areas of improvement, and use predictive analytics and forecasting to understand how their revenue might be impacted in future quarters.

At The MSR Group, we use regression analysis to help our clients understand the relationship between independent variables and dependent variables, and prioritize efforts to improve customer satisfaction metrics. We have worked with banks to understand the impact that key index scores from the markets had on sales projections, and how increasing prices will have any impact on repeat customer purchases. Using regression analysis in quantitative research provides the opportunity to take corrective actions on the items that will most positively improve overall satisfaction.

Subscribe To Our Newsletter

Join our mailing list so you never miss an update from The MSR Group!

Thanks for subscribing!

- Customer Experience Management

- Employee Experience

- Financial Services

- Marketing Effectiveness

- News Releases

- Research Methodology

- Social Media

- Technology & Innovation

Join our email list to receive MSR Group news and industry updates right to your inbox!

You have Successfully Subscribed!

Regression analysis: Precise Forecasts and Predictions

Appinio Research · 03.07.2023 · 8min read

Back to Market Research Blog

Regression analysis plays a vital role in contemporary market research, offering a powerful tool for making accurate forecasts and addressing intricate interdependencies within challenges and decisions. It enables us to predict user behavior and gain valuable insights for optimizing business strategies. This article aims to elucidate the concept of regression analysis, delve into its working principles, and explore its applications in the field of market research.

What is regression analysis?

Regression analysis serves as a statistical method and acts as a translator within the realm of market research, enabling the conversion of ambiguous or complex data into concise and understandable information.

By investigating the relationship between two or more variables, regression analysis sheds light on crucial interactions, such as the correlation between user behavior and screen time in smartphone applications.

What does regression analysis do?

Regression analysis serves multiple purposes.

- It identifies correlations between two or more variables, allowing us to understand and visualize their interrelationship.

- It has the capability to forecast potential changes when variables are altered.

- It can capture values at specific time points, enabling us to examine the impact of fluctuating parameters on the overall outcomes.

Origins of Regression Analysis

Regression analysis traces its roots back to the late 19th century when it was pioneered by the renowned British statistician, Sir Francis Galton. Galton explored variables within human genetics and introduced the concept of regression.

By examining the relationship between parental height and the height of their offspring, Galton laid the foundation for linear regression analysis. Since then, this methodology has found extensive applications not only in market research but also in diverse fields such as psychology, sociology, medicine, and economics.

Precise market analyses with Appinio

Appinio leverages a variety of market research methods to get you the best results for your market research needs. Do you want to determine the potential of a new product or service before launching it onto the market? Then the TURF analysis can help.

Conjoint analysis, on the other hand, collects consumer feedback during the development phase to optimize an idea.

Contact Appinio now and together we will find the optimal approach to your challenge!

What types of regression analysis are there?

Regression analysis encompasses various regression models, each serving specific purposes depending on the research objectives and data availability.

Employing a combination of these techniques allows for in-depth insights into complex phenomena. Here are the key regression models:

Simple linear regression

The classic model examines the relationship between a dependent variable and a single independent variable, revealing their association. For instance, it can explore how daily coffee consumption (independent variable) impacts daily energy levels (dependent variable).

Multiple linear regression

Expanding upon simple linear regression, this model incorporates multiple independent variables, such as price, advertising, competition, or sales figures. In the context of energy levels, variables like sleep duration and exercise can be added alongside coffee consumption.

Non-linear regression

When the relationship between variables deviates from a straight line, non-linear regression comes into play. This is particularly useful for phenomena like exponential growth in app downloads or user numbers, where traditional linear models may not be suitable.

Quadratic regression

For complex correlations or patterns characterized by ups and downs, quadratic regression is utilized.

It fits data that follows non-linear trends, such as seasonal sales fluctuations. For instance, it can help determine market saturation points, where growth typically plateaus after an initial rapid expansion.

Hierarchical regression

Hierarchical regression allows the researcher to control the order of variables in a model, enabling the assessment of each independent variable's contribution to predicting the dependent variable.

For example, in demographic-based analyses, variables like age, gender or education levels may be weighted differently.

Multinomial logistic regression

This model examines the probabilities of outcomes with more than two variables, making it valuable for complex questions.

For instance, a music app may predict users' favorite genres based on their previous preferences, listening habits, and other factors like age, gender, or listening time, enabling personalized recommendations.

Multivariate regression analysis

When multiple dependent variables and their interactions with independent variables need to be explored, multivariate regression analysis is employed.

For instance, in the context of fitness data, it can assess how factors such as diet, sleep, or exercise intensity influence variables like weight and health status.

Binary logistic regression

This model comes into play when a variable has only two possible answers, such as yes or no. Binary logistic regression can be utilized to predict whether a specific product will be purchased by a target group. Factors like age, income, or gender can further segment the buyer groups.

How is regression analysis used in market research?

The versatility of regression analysis is reflected in its diverse applications within the field of market research . Here are selected examples of how regression analysis is utilized:

- Predicting market trends Regression analysis enables the exploration of future market trends. For instance, a real estate company can forecast future home prices by considering factors such as property location, size, and age of the property. Similarly, a food company may employ regression analysis to identify the ice cream flavor with the highest sales potential.

- Customer satisfaction Companies can employ regression analysis to investigate the factors influencing customer satisfaction. By conducting customer surveys and analyzing the data through regression analysis, a customer service company can identify the aspects of their service that have the greatest impact on customer satisfaction.

- Usage behavior Regression analysis provides insights into the factors influencing the usage of smartphone apps. It allows for differentiation based on variables such as age, gender, or education level, shedding light on the drivers of app usage.

- Advertising impact Regression analysis measures the effectiveness of advertising campaigns. By analyzing advertising expenditure in relation to product sales, it enables the classification of advertising effectiveness and informs decision-making regarding advertising strategies.

- Measuring market maturity Regression analysis helps evaluate the reception of a product or service among the target audience. It identifies positive and negative evaluations, as well as determining which features should be emphasized. Through regression analyses, insights can be gained into products and services even before their market launch.

How does a simple linear regression analysis work?

/UK%20-%20US/Regression%20analysis/BLOG_Regression-Analysis.png?width=1440&height=960&name=BLOG_Regression-Analysis.png)

Suppose a company aims to determine the relationship between advertising spending and product sales, requiring a simple linear regression analysis. Here are five possible steps to conduct this analysis:

- Data collection To commence the analysis, data on advertising spending and product sales needs to be collected.

- Chart generation The data is plotted on a scatter plot where one axis represents advertising spending and the other represents product sales.

- Determine the regression line A straight line is drawn to intersect as many data points as possible. This regression line illustrates the average relationship between the two variables.

- Predicting developments The regression line serves as the foundation for making future predictions. By manipulating one variable, you can examine its influence on the other variable.

- Interpretation of the results Valuable insights can be derived from the results. For instance, the analysis may reveal that an additional $10,000 in advertising spending could lead to an average increase in sales of 500 units.

Regression analysis: All-rounder in market research

Regression analysis stands as a powerful and versatile tool in the realm of market research. It offers a range of regression models, varying in complexity depending on the research question or objective at hand. Whether investigating the relationship between advertising spend and sales, analyzing usage behavior, or identifying market trends, regression analysis provides data-driven insights that empower informed and sound decision-making.

Interested in running your own regression analysis?

Then register directly on our platform and get in touch with our experts.

Join the loop 💌

Be the first to hear about new updates, product news, and data insights. We'll send it all straight to your inbox.

Get the latest market research news straight to your inbox! 💌

Wait, there's more

30.05.2024 | 29min read

Pareto Analysis: Definition, Pareto Chart, Examples

28.05.2024 | 32min read

What is Systematic Sampling? Definition, Types, Examples

16.05.2024 | 30min read

Time Series Analysis: Definition, Types, Techniques, Examples

- Privacy Policy

Home » Regression Analysis – Methods, Types and Examples

Regression Analysis – Methods, Types and Examples

Table of Contents

Regression Analysis

Regression analysis is a set of statistical processes for estimating the relationships among variables . It includes many techniques for modeling and analyzing several variables when the focus is on the relationship between a dependent variable and one or more independent variables (or ‘predictors’).

Regression Analysis Methodology

Here is a general methodology for performing regression analysis:

- Define the research question: Clearly state the research question or hypothesis you want to investigate. Identify the dependent variable (also called the response variable or outcome variable) and the independent variables (also called predictor variables or explanatory variables) that you believe are related to the dependent variable.

- Collect data: Gather the data for the dependent variable and independent variables. Ensure that the data is relevant, accurate, and representative of the population or phenomenon you are studying.

- Explore the data: Perform exploratory data analysis to understand the characteristics of the data, identify any missing values or outliers, and assess the relationships between variables through scatter plots, histograms, or summary statistics.

- Choose the regression model: Select an appropriate regression model based on the nature of the variables and the research question. Common regression models include linear regression, multiple regression, logistic regression, polynomial regression, and time series regression, among others.

- Assess assumptions: Check the assumptions of the regression model. Some common assumptions include linearity (the relationship between variables is linear), independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violation of these assumptions may require additional steps or alternative models.

- Estimate the model: Use a suitable method to estimate the parameters of the regression model. The most common method is ordinary least squares (OLS), which minimizes the sum of squared differences between the observed and predicted values of the dependent variable.

- I nterpret the results: Analyze the estimated coefficients, p-values, confidence intervals, and goodness-of-fit measures (e.g., R-squared) to interpret the results. Determine the significance and direction of the relationships between the independent variables and the dependent variable.

- Evaluate model performance: Assess the overall performance of the regression model using appropriate measures, such as R-squared, adjusted R-squared, and root mean squared error (RMSE). These measures indicate how well the model fits the data and how much of the variation in the dependent variable is explained by the independent variables.

- Test assumptions and diagnose problems: Check the residuals (the differences between observed and predicted values) for any patterns or deviations from assumptions. Conduct diagnostic tests, such as examining residual plots, testing for multicollinearity among independent variables, and assessing heteroscedasticity or autocorrelation, if applicable.

- Make predictions and draw conclusions: Once you have a satisfactory model, use it to make predictions on new or unseen data. Draw conclusions based on the results of the analysis, considering the limitations and potential implications of the findings.

Types of Regression Analysis

Types of Regression Analysis are as follows:

Linear Regression

Linear regression is the most basic and widely used form of regression analysis. It models the linear relationship between a dependent variable and one or more independent variables. The goal is to find the best-fitting line that minimizes the sum of squared differences between observed and predicted values.

Multiple Regression

Multiple regression extends linear regression by incorporating two or more independent variables to predict the dependent variable. It allows for examining the simultaneous effects of multiple predictors on the outcome variable.

Polynomial Regression

Polynomial regression models non-linear relationships between variables by adding polynomial terms (e.g., squared or cubic terms) to the regression equation. It can capture curved or nonlinear patterns in the data.

Logistic Regression

Logistic regression is used when the dependent variable is binary or categorical. It models the probability of the occurrence of a certain event or outcome based on the independent variables. Logistic regression estimates the coefficients using the logistic function, which transforms the linear combination of predictors into a probability.

Ridge Regression and Lasso Regression

Ridge regression and Lasso regression are techniques used for addressing multicollinearity (high correlation between independent variables) and variable selection. Both methods introduce a penalty term to the regression equation to shrink or eliminate less important variables. Ridge regression uses L2 regularization, while Lasso regression uses L1 regularization.

Time Series Regression

Time series regression analyzes the relationship between a dependent variable and independent variables when the data is collected over time. It accounts for autocorrelation and trends in the data and is used in forecasting and studying temporal relationships.

Nonlinear Regression

Nonlinear regression models are used when the relationship between the dependent variable and independent variables is not linear. These models can take various functional forms and require estimation techniques different from those used in linear regression.

Poisson Regression

Poisson regression is employed when the dependent variable represents count data. It models the relationship between the independent variables and the expected count, assuming a Poisson distribution for the dependent variable.

Generalized Linear Models (GLM)

GLMs are a flexible class of regression models that extend the linear regression framework to handle different types of dependent variables, including binary, count, and continuous variables. GLMs incorporate various probability distributions and link functions.

Regression Analysis Formulas

Regression analysis involves estimating the parameters of a regression model to describe the relationship between the dependent variable (Y) and one or more independent variables (X). Here are the basic formulas for linear regression, multiple regression, and logistic regression:

Linear Regression:

Simple Linear Regression Model: Y = β0 + β1X + ε

Multiple Linear Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

In both formulas:

- Y represents the dependent variable (response variable).

- X represents the independent variable(s) (predictor variable(s)).

- β0, β1, β2, …, βn are the regression coefficients or parameters that need to be estimated.

- ε represents the error term or residual (the difference between the observed and predicted values).

Multiple Regression:

Multiple regression extends the concept of simple linear regression by including multiple independent variables.

Multiple Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

The formulas are similar to those in linear regression, with the addition of more independent variables.

Logistic Regression:

Logistic regression is used when the dependent variable is binary or categorical. The logistic regression model applies a logistic or sigmoid function to the linear combination of the independent variables.

Logistic Regression Model: p = 1 / (1 + e^-(β0 + β1X1 + β2X2 + … + βnXn))

In the formula:

- p represents the probability of the event occurring (e.g., the probability of success or belonging to a certain category).

- X1, X2, …, Xn represent the independent variables.

- e is the base of the natural logarithm.

The logistic function ensures that the predicted probabilities lie between 0 and 1, allowing for binary classification.

Regression Analysis Examples

Regression Analysis Examples are as follows:

- Stock Market Prediction: Regression analysis can be used to predict stock prices based on various factors such as historical prices, trading volume, news sentiment, and economic indicators. Traders and investors can use this analysis to make informed decisions about buying or selling stocks.

- Demand Forecasting: In retail and e-commerce, real-time It can help forecast demand for products. By analyzing historical sales data along with real-time data such as website traffic, promotional activities, and market trends, businesses can adjust their inventory levels and production schedules to meet customer demand more effectively.

- Energy Load Forecasting: Utility companies often use real-time regression analysis to forecast electricity demand. By analyzing historical energy consumption data, weather conditions, and other relevant factors, they can predict future energy loads. This information helps them optimize power generation and distribution, ensuring a stable and efficient energy supply.

- Online Advertising Performance: It can be used to assess the performance of online advertising campaigns. By analyzing real-time data on ad impressions, click-through rates, conversion rates, and other metrics, advertisers can adjust their targeting, messaging, and ad placement strategies to maximize their return on investment.

- Predictive Maintenance: Regression analysis can be applied to predict equipment failures or maintenance needs. By continuously monitoring sensor data from machines or vehicles, regression models can identify patterns or anomalies that indicate potential failures. This enables proactive maintenance, reducing downtime and optimizing maintenance schedules.

- Financial Risk Assessment: Real-time regression analysis can help financial institutions assess the risk associated with lending or investment decisions. By analyzing real-time data on factors such as borrower financials, market conditions, and macroeconomic indicators, regression models can estimate the likelihood of default or assess the risk-return tradeoff for investment portfolios.

Importance of Regression Analysis

Importance of Regression Analysis is as follows:

- Relationship Identification: Regression analysis helps in identifying and quantifying the relationship between a dependent variable and one or more independent variables. It allows us to determine how changes in independent variables impact the dependent variable. This information is crucial for decision-making, planning, and forecasting.

- Prediction and Forecasting: Regression analysis enables us to make predictions and forecasts based on the relationships identified. By estimating the values of the dependent variable using known values of independent variables, regression models can provide valuable insights into future outcomes. This is particularly useful in business, economics, finance, and other fields where forecasting is vital for planning and strategy development.

- Causality Assessment: While correlation does not imply causation, regression analysis provides a framework for assessing causality by considering the direction and strength of the relationship between variables. It allows researchers to control for other factors and assess the impact of a specific independent variable on the dependent variable. This helps in determining the causal effect and identifying significant factors that influence outcomes.

- Model Building and Variable Selection: Regression analysis aids in model building by determining the most appropriate functional form of the relationship between variables. It helps researchers select relevant independent variables and eliminate irrelevant ones, reducing complexity and improving model accuracy. This process is crucial for creating robust and interpretable models.

- Hypothesis Testing: Regression analysis provides a statistical framework for hypothesis testing. Researchers can test the significance of individual coefficients, assess the overall model fit, and determine if the relationship between variables is statistically significant. This allows for rigorous analysis and validation of research hypotheses.

- Policy Evaluation and Decision-Making: Regression analysis plays a vital role in policy evaluation and decision-making processes. By analyzing historical data, researchers can evaluate the effectiveness of policy interventions and identify the key factors contributing to certain outcomes. This information helps policymakers make informed decisions, allocate resources effectively, and optimize policy implementation.

- Risk Assessment and Control: Regression analysis can be used for risk assessment and control purposes. By analyzing historical data, organizations can identify risk factors and develop models that predict the likelihood of certain outcomes, such as defaults, accidents, or failures. This enables proactive risk management, allowing organizations to take preventive measures and mitigate potential risks.

When to Use Regression Analysis

- Prediction : Regression analysis is often employed to predict the value of the dependent variable based on the values of independent variables. For example, you might use regression to predict sales based on advertising expenditure, or to predict a student’s academic performance based on variables like study time, attendance, and previous grades.

- Relationship analysis: Regression can help determine the strength and direction of the relationship between variables. It can be used to examine whether there is a linear association between variables, identify which independent variables have a significant impact on the dependent variable, and quantify the magnitude of those effects.

- Causal inference: Regression analysis can be used to explore cause-and-effect relationships by controlling for other variables. For example, in a medical study, you might use regression to determine the impact of a specific treatment while accounting for other factors like age, gender, and lifestyle.

- Forecasting : Regression models can be utilized to forecast future trends or outcomes. By fitting a regression model to historical data, you can make predictions about future values of the dependent variable based on changes in the independent variables.

- Model evaluation: Regression analysis can be used to evaluate the performance of a model or test the significance of variables. You can assess how well the model fits the data, determine if additional variables improve the model’s predictive power, or test the statistical significance of coefficients.

- Data exploration : Regression analysis can help uncover patterns and insights in the data. By examining the relationships between variables, you can gain a deeper understanding of the data set and identify potential patterns, outliers, or influential observations.

Applications of Regression Analysis

Here are some common applications of regression analysis:

- Economic Forecasting: Regression analysis is frequently employed in economics to forecast variables such as GDP growth, inflation rates, or stock market performance. By analyzing historical data and identifying the underlying relationships, economists can make predictions about future economic conditions.

- Financial Analysis: Regression analysis plays a crucial role in financial analysis, such as predicting stock prices or evaluating the impact of financial factors on company performance. It helps analysts understand how variables like interest rates, company earnings, or market indices influence financial outcomes.

- Marketing Research: Regression analysis helps marketers understand consumer behavior and make data-driven decisions. It can be used to predict sales based on advertising expenditures, pricing strategies, or demographic variables. Regression models provide insights into which marketing efforts are most effective and help optimize marketing campaigns.

- Health Sciences: Regression analysis is extensively used in medical research and public health studies. It helps examine the relationship between risk factors and health outcomes, such as the impact of smoking on lung cancer or the relationship between diet and heart disease. Regression analysis also helps in predicting health outcomes based on various factors like age, genetic markers, or lifestyle choices.

- Social Sciences: Regression analysis is widely used in social sciences like sociology, psychology, and education research. Researchers can investigate the impact of variables like income, education level, or social factors on various outcomes such as crime rates, academic performance, or job satisfaction.

- Operations Research: Regression analysis is applied in operations research to optimize processes and improve efficiency. For example, it can be used to predict demand based on historical sales data, determine the factors influencing production output, or optimize supply chain logistics.

- Environmental Studies: Regression analysis helps in understanding and predicting environmental phenomena. It can be used to analyze the impact of factors like temperature, pollution levels, or land use patterns on phenomena such as species diversity, water quality, or climate change.

- Sports Analytics: Regression analysis is increasingly used in sports analytics to gain insights into player performance, team strategies, and game outcomes. It helps analyze the relationship between various factors like player statistics, coaching strategies, or environmental conditions and their impact on game outcomes.

Advantages and Disadvantages of Regression Analysis

About the author.

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Bimodal Histogram – Definition, Examples

Textual Analysis – Types, Examples and Guide

Probability Histogram – Definition, Examples and...

Discriminant Analysis – Methods, Types and...

Cluster Analysis – Types, Methods and Examples

Content Analysis – Methods, Types and Examples

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Regression Analysis: Definition, Types, Usage & Advantages

Regression analysis is perhaps one of the most widely used statistical methods for investigating or estimating the relationship between a set of independent and dependent variables. In statistical analysis , distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities.